Revues.org and the Public Knowledge Project: Propositions to Collaborate (remote session): The Session Blog

Presenter: Marin Dacos

July 9, 2009 at 10:00 a.m. SFU Harbour Centre. Rm. 7000*

*Important Note: As this was a remote session, the presenter’s voice was inaudible most of the time due to technical difficulties and constant breaks in the live audio streaming. Therefore, it was difficult to capture parts of the presentation.

Marian Dacos (Source)

Marian Dacos (Source)

Background

Session Overview

Mr. Martin Dacos initiated the session by providing a background summary on Revues.org. He indicated there are currently 187 members, with 42000 online humanities and social sciences full-text, open-access documents (Session Abstract). He mentioned that approximately ten years ago, systems were centralized and focused on sciences. And since the beginning of Revues.org, only PDF documents were processed for publishing by converting to extensible markup language (XML). Later, Lodel (electronic publishing software) was developed as a central management system (CMS) where the web service could convert word documents to XML. This is around the time when the Public Knowledge Project (PKP) started up and its focus was to decentralize and provide a more international access point for the publishing of journals and management of conferences through the Open Journal System (OJS). During this time, the two projects, Lodel and PKP, started to converge with two distinct parts and four kinds of services.

(1) The Project Details

The first part of the project, as Mr. Dacos described it consists of using PKP to develop a manuscript management tool to monitor the workflow through OJS. There is a need to create a new interface for users and make it more human-friendly for interaction in order to allow for the dissemination of documents. This portion of the project also investigates the possibility of connecting Lodel and OJS so both systems can use the system jointly. Next, Mr. Dacos explained the second part of the project which deals with document conversion called OTX – which will convert for example RTF to XML. This parallels PKPs development and there is the possibility of sharing information on this creation.

(2) Services

Revues.org offers various kinds of services to allow for the dissemination and communication of scholarly material and other information such as upcoming events. One of the services presented by Revues is Calenda which is claimed to be the largest French calendar system for the social sciences and humanities. This calender service is important because it disseminates information such as upcoming scientific events to the rest of it’s audience. This communication tool is crucial in bringing members of various online communities together to participate in ‘study days,’ lectures, workshops, seminars, symposiums, and share their papers. Another valuable service offered by Revues.org is Hypotheses which is a platform for research documents. This is a free service which allows researchers, scientists, engineers and other professionals to post their experiences on a particular topic or phenomenon for sharing with a wider audience. One can upload a blog, field notes, newsletters, diary inserts, reviews on certain topics, or even a book for publishing. A third service offered by Revues.org is a monthly newsletter called La Lettre de Revues.org. This newsletter connects the Revues.org community together by showcasing various pieces of information. For instance, new members who have recently joined are profiled and new online documents are highlighted for it’s subscribers to read.

The remainder of Mr. Marin Dacos’ talk focused on Lemon8 and OTX which was difficult to interpret due to technical issues.

Questions from the audience asked at Mr. Dacos’ session:

It was difficult to get an audio connection with Mr. Dacos due to technical difficulties, therefore questions were not asked.

Related Links

- Lodel – electronic publishing software system

- The Centre of Open Electronic Publishing

- The National Centre for Scientific Research

- “The CNRS reinforces its policy of access to digital documents in the human and social sciences” (Article)

- Lift France 09 – Conference attended in France

References

Dacos, M. (2009). Revues.org and the public knowledge project: propositions to collaborate. PKP Scholarly Publishing Conference 2009. Retrieved 2009-07-09, from http://pkp.sfu.ca/ocs/pkp/index.php/pkp2009/pkp2009/paper/view/208

July 10, 2009 Comments Off on Revues.org and the Public Knowledge Project: Propositions to Collaborate (remote session): The Session Blog

The new Érudit publishing platform: The Session Blog

Presenter: Martin Boucher

July 9, 2009 at 9:30 a.m. SFU Harbour Centre. Rm 7000

Martin Boucher (picture taken by Pam Gill)

Background

Mr. Martin Boucher is the Assistant Director for the Centre d’édition numérique/ Digital Publishing Centre located at the University of Montreal, located in Montreal, Quebec. This centre along with the library at the University of Laval are the sites of the Erudit publishing locations which serve as a bridge to the Open Journal Systems (OJS). Erudit focuses on the promotion and dissemination of research similar to the Open Access Press (part of the PKP 2009 conference).

Session Overview

Mr. Martin Boucher highlighted the features and implications of a new publishing platform called Erudit (Session Abstract). He focused on sharing the capabilities of the Erudit publishing platform by first providing a brief historical overview of the organization, describing the publishing process, then introducing Erudit, and concluding with the benefits of such a platform.

(1) Historical Overview

Mr. Boucher started the session by pointing out that Erudit is a non-profit, multi-institutional publishing platform founded in 1998. This platform, based in Quebec, provides an independent research publication service which consists of access to various types of documents in the humanities and social sciences fields to the universities. Erudit also encouraged the development of Synergies which is a similar platform but targets a more mainstream audience since it is published in English. Some facts about Erudit:

- International standards are followed

- Publishes over 50000 current and back-dated articles

- Offers management services, publishing, and subscriptions

- 90% of the downloads are free

- Have over 1 million visits per month

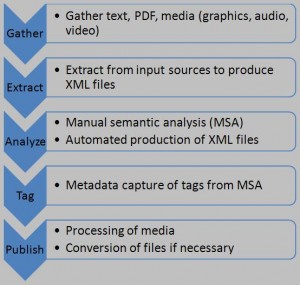

(2) Publishing Process

The description of the publishing process constituted at least a third of the presentation time. Mr. Boucher felt it necessary to take the time to describe to the audience the details involved so it would be easier to compare the similarities and differences between the new and old versions of the system. To begin, Mr. Boucher indicated the publishing process accepted only journals based on extensible markup language (XML), and various input sources (In Design, QuarkXPress, Open Office, Word, RTF). Also, he pointed out that there are no peer-reviews, in fact: only the final documents are considered to be a part of the collection. It should be pointed out that these documents meet high quality standards as they are expected to be peer-reviewed before submitting to the publishing platform. As of yet, Erudit does not have the software to assist in a peer-review type of process. The belief of the Erudit community is to provide quick digital dissemination of the articles. This complicated, lengthy process is made possible by a team of three to four qualified technicians, one coordinator, and one analyst, all of whom ensure a smooth transition of the documents into the virtual domain. The publishing process consists of five key steps as outlined in Figure 1. Mr. Boucher elaborated on the importance of the analysis step. He went on to outline the three steps of the manual semantic analysis. The first consists of manual and automated tagging where detailed XML tagging is only for XHTML, and less tagging is done on PDF files. The second step consists of the automated production of XML files for dissemination. And lastly, a rigorous quality assurance by the technicians prior to dissemination sums up the analysis step of the publishing process.

Figure 1: The publishing process (image created by Pam Gill)

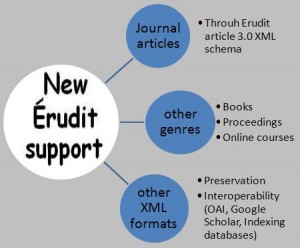

(3) New Erudit Platform

Once the publishing process was described, an illustration of the new Erudit platform was revealed. Mr. Boucher indicated there is now increased support for journal articles through the Erudit Article 3.0 XML schema (see Figure 2). Further, there is support for additional scholarly genres such as books, proceedings, and even online courses (a recent request). It should be mentioned here that some of these other forms of documents are still in the experimental stages such as digitizing books. In addition, there is continued support for other XML input/output formats to ensure preservation and interoperability such as with the Open Archives Initiative (OAI), Google Scholar, and indexing databases.

Figure 2: The new Erudit support system (image created by Pam Gill)

(4) Benefits

To conclude his presentation on Erudit, Mr. Boucher explained the advantages of incorporating such a system by mentioning particular benefits of interest:

- Purely Java-based

- User-friendly because they have a universal set of tools inside the applications which makes it easier for the support technicians to troubleshoot and work with

- Supports plug-ins and extensions

- All scholarly genres are supported

- The process is simpler to follow

- An increase in the quality of data is noted

- The decrease in production time is evident

- There is less software involved

Mr. Martin Boucher hinted that the beta version of Erudit was to release in Fall 2009.

Questions from the audience asked at Mr. Boucher’s session:

- Question: For the open access subscription of readership which consists of a vast collection, are statistics being collected? Answer: Not sure.

- Question: Will the beta version of the publishing platform be released to everyone for bug reporting, testing, or move internally? Answer: Not sure if there will be public access. But it is a good idea to try the beta platform.

- Question: Are you considering using the manuscript coverage for the Synergies launch? Answer: The new platform is creatively tight to what we are doing, and it is really close, with Synergies in mind.

- Question: In a production crisis, are journal editors with you until the end of the process? Answer: They are there at the beginning of the process. They give material, but we do our own quality assurance process and then we release to the journal, however it is our own control. Also, the editors cannot see the work in the process such as the metadata, thought we do exchange information by emails.

- Question: Has the provincial government been generous in funding? Answer: The journals had to publish in other platforms. There is a special grant for that. It is easier for us with that granting repository for pre-prints, documents or data section of the platforms which serve as an agent for them. Yet, Erudit is not considered by the government, although we are trying to get grants from the government. Currently to maintain the platform we only have money for basic management. In order to continue developing platforms (such as Synergy), to get support from the government is difficult.

- Question: How do the sales work for the two platforms? Answer: If you buy it, you will have all the content and access increases.

- Question: How is it passed to the publisher? Answer: The money goes to the journals, keep only a small amount for internal management since we are a non-profit society.

- Question: Could you describe the current workflow and time required to publish one article? Answer: It depends on the article. If we are publishing an article that has no fine grain XML tagging or it is text from a PDF, then it requires less time for us to get it out. It depends on the quality of the article and the associated graphics, tables, size etc. We publish an issue at a time. It takes say two days to get an article published.

Related Links

University of Montreal receives $14M for innovation (news article)

Contact the University of Montreal or the University of Laval libraries for more information on Erudit.

References

Boucher, M. (2009). The new erudit publishing platform. PKP Scholarly Publishing Conference 2009. Retrieved 2009-07-09, from http://pkp.sfu.ca/ocs/pkp/index.php/pkp2009/pkp2009/paper/view/182

July 10, 2009 2 Comments

PKP Open Archives Harvester for the Veterinarian Academic Community: The Session Blog

Date: July 9, 2009

Presenters: Astrid van Wesenbeeck and Martin van Luijt – Utrecht University

Photo taken at PKP 2009, with permission

Astrid van Wesenbeeck is Publishing Advisor for Igitur, Utrecht University Library

Martin van Luijt is the Head of Innovation and Development, Utrecht University Library

Abstract

Presentation:

Powerpoint presentation used with permission of Martin van Luijt

Quote: “We always want to work with our clients. The contributions from our users are very important to us.”

Session Overview

The University Library is 425 years old this year. While they are not scientists or students, they have a mission to provide services that meet the needs of their clients. Omega-integrated searches bring in all metadata and indexes it from publishers and open access areas.

Features discussed included the institutional repository, digitization and journals [mostly open and digital, total about 10 000 digitized archives].

Virtual Knowledge Centers [see related link below]

– this is the area of their most recent work

– shifts knowledge sharing from library to centers

– see slides of this presentation for more detail

The Problem They Saw:

We all have open access repositories now. How do you find what you need? There are too many repositories for a researcher to find information.

The Scenario

They chose to address this problem by targeting the needs of a specific group of users. The motivation – a one-stop shop for users and increased visibility for scientists.

The Solution:

Build an open-access subject repository, targeted at veterinarians, containing the content of at least 5 high-profile veterinarian institutions and meeting other selected standards.

It was organized by cooperating to create a project board and a project team consisting of knowledge specialists and other essential people. The user interface was shaped by the users.

Their Findings:

Searching was not sufficient, the repository content, to use his word, “Ouch!” Metadata quality varied wildly, relevant material was not discernible, non-accessible content existed and there were low quantities in repositories.

Ingredients Needed:

A harvester to fetch content from open archives.

Ingredients Needed 2:

Fetch more content from many more archives, filter it and put it into records and entries through a harvester, then normalize each archive, and put it through a 2000+ keyword filter. This resulted in 700,000+ objects.

Ingredients 3:

Use the harvester, filter it and develop a search engine and finally, a user interface.

Problem: The users wanted a search history and pushed them into dreaming up a way of doing that without a login. As designers, they did not want or need that login, but at first saw no way around a login in order to connect the history to the user. Further discussion revealed that the users did not have a problem with a system where the history did not follow them from computer to computer. A surprise to the designers, but it allowed for a login-free system.

Results: Much better research. Connected Repositories: Cornell, DOAJ, Glasgow, Ugitur, etc.

Workshop Discussion and Questions:

1. How do you design an intelligent filter for searches? [gentleman also working to design a similar search engine] Re-harvesting occurs every night with the PKP harvester rerunning objects through the filter. Incremental harvests are quick. Full harvests take a long time, a couple weeks, so they try not to do them.

2. Do you use the PKP harvester and normalization tools in PKP? We started, but found that we needed to do more and produced a tool outside the harvester.

3. <Question not heard> It was the goal to find more partners to build the tool and its features. We failed. In the evaluation phase, we will decide if this is the right moment to roll out this tool. From a technical viewpoint, it is too early. We may need 1 to 2 years to fill the repositories. If you are interested in starting your own, we would be delighted to talk to you.

4. I’m interested in developing a journal. Of all your repositories, do you use persistent identifiers? How do I know that years down the road I will still find these things? Is anyone interested in developing image repositories? There is a Netherlands initiative to build a repository with persistent identifiers. What about image repositories? No. There are image platforms.

5. Attendee comment: I’m from the UK. If valuable, we’ll have to fight to protect these systems because of budget cuts and the publishers fighting. So, to keep value, we’ll have to convince government about it.

Related Links:

OAI6 talk in Virtulal Knowledge Centers

NARCIS, a dutch repository of theses

First Monday article

Posted by Jim Batchelor, time, date

July 9, 2009 Comments Off on PKP Open Archives Harvester for the Veterinarian Academic Community: The Session Blog

UK Institutional Repository Search: a collaborative project to showcase UK research output through advanced discovery and retrieval facilities: The Session Blog

July 9th 2009 at 10am

Presenters: Sophia Jones and Vic Lyte (apologies for absence – please email any comments and queries)

Background

(Source)

(Source)

Sophia Jones, SHERPA, European Development Officer, University of Nottingham.

sophia.jones@nottingham.ac.uk 44 (0)115 84 67235

Sophia Jones joined the SHERPA team as European Development Officer for the DRIVER project at the end of November 2006. Since December 2007, she has also been working on the JISC funded Intute Repository Search project.

Jones has a BA in Public Administration and Management (Kent), an MA in Organisation Studies (Warwick) a Certificate in Humanities (Open University) and is currently studying part time for a BA in History (Open University). She is also fluent in Greek. Prior to joining the University of Nottingham, Jones worked as International Student Advisor at the University of Warwick, Nottingham Trent University and the University of Leicester.

Sophia’s interests include international travel, music, cinema and enjoys spending time reading the news of the day.

(Source)

(Source)

Vic Lyte MIMAS, Director of INTUTE, University of Manchester

Mimas, University of Manchester, +44 (0)161 275 8330 vic.lyte@manchester.ac.uk

Vic Lyte is the Director of the Institute Repository Search Project. Lyte is also Development Manager at Mimas and also Technical Services Manager at Mimas National Data Centre. Lyte’s specialty areas are design and development of Autonomy IDOL technology within Academic & Research vertical and advanced search and discovery systems, architectures and interfaces with research and teaching context. Lyte’s work is lead by repository and search technologies.

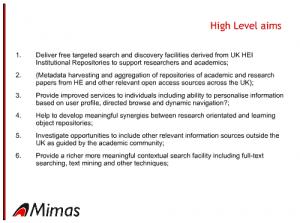

Session Overview

The UK Istitutional Repository Search (IRS) was initiated after a perceived gap was noticed in the knowledge access search process, where there appeared to be unconnected islands in knowledge materials. The IRS followed on from the intute research project. The high end aims included easier access for researchers to move from discovery to innovation by linking repositories to exchange knowledge materials. The IRS uses two main search methods: a conceptual search and a text-mining search. A question was asked by a member of the audience to differentiate the searches. Jones responded by stating that the conceptual search searches documents, whilst the text-mining search searches within documents.

Jonesa then demonstrated the two types of searches by using an example quesry of ethical research. Searches can be added by suggested related terms, or narrowed down by filtering through repository or document type. The conceptual search results can also be viewed as a 3D interactive visualisation. The text-mining search can also be viewed as an interactive cluster map.

In summary Jones stated that IRS had met all its high end aims. In engaging in a focus group with the research community there was encouraging support. The only suggested improvement was a personalisation aspect, which the IRS would have the potential to add, with a projected roll out in the next phase of IRS.

(Source)

(Source)

Questions and comments

1. How is the indexing managed? Answer: Please email Vic Lyte.

2. Does IRS have data-mining tools in the set? Answer: Yes, this was developed by NaCTeM.

3. How much does IRS cost? Please email Vic Lyte.

4. Is IRS available now? Yes, there is free access. However at this stage IRS is more of a tool than a service.

5. Comment from Fred Friend (JISC): I’m from the UK. If this is valuable, we’ll have to fight to protect these systems because of budget cuts and the publishers fighting. So, to keep it’s value, we’ll have to convince government about it.

References

July 9, 2009 Comments Off on UK Institutional Repository Search: a collaborative project to showcase UK research output through advanced discovery and retrieval facilities: The Session Blog

Legal Deposit at Library and Archives Canada and development of a Trusted Digital Repository: The Session Blog

Thursday, July 9, 2009 @9:30

SFU Harbour Centre (Sauder Industries Rm 2270)

Presenters:

Pam Armstrong (Manager of the Digital Preservation Office at Library and Archives Canada and Business Lead of the LAC Trusted Digital Repository Project)

Susan Haigh (Manager of the Digital Office of Published Heritage at Library and Archives Canada)

Session Overview

Pam Armstrong and Susan Haigh succinctly present the aims of and current progress of Library and Archives Canada’s Trusted Digital Repository and Legal Deposit.

Commentary

Pam Armstrong reviewed the Library and Archives Canada (“LAC”) Trusted Digital Repository (“TDR”) project currently underway. Two key aspects of the project were identified as the building component (of the necessary infrastructure) and the business component (the workflow and efficiencies associated with it). Current projects with the TDR include creating a digital format registry, establishing threat risk assessment, establishing effective communications strategies and establishing a storage policy for data. Armstrong points to collaboration as the key to facing the challenges of preserving the digital heritage of Canadians which, given the amount of collaborators involved, is crucial in ensuring the integrity of preservation. Additionally, Armstrong suggests that the TDR brings a corporate approach to digital preservation due to the requirement of having content providers register online. The logistics of the TDR are displayed in a framework illustrating the structured flow of data. Data goes through a quarantine zone then to a virtual loading dock (where it is, among other things, scanned for viruses, decrypted and validated) then to staff processing (where metadata is enhanced) then in to a metadata management system and finally to an access zone. Ultimately, it appears that the TDR will not only organize and preserve data but it will also make it more accessible to Canadians through the world wide web.

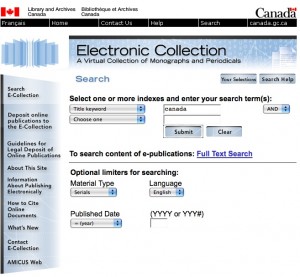

Susan Haigh reviewed the mandates of the Library and Archives Canada Act and broke down the logistics of the Virtual Loading Dock (“VLD”) that exists within the Legal Deposit side of the electronic collections. Haigh highlighted the LAC mandate as the preservation of documentary heritage of Canadians through acquisition and preservation of publications at any cost. She identifies the VLD as an effective tool to do so that requires publishers to adhere to a law obliging them to deposit two copies of a publication and its contents in cryptic form along with any metadata in the VLD. This puts the onus of responsibility on the publisher, though Haigh forwards this with “theoretically” indicating that it is not yet as streamlined as it could be. Simply put, the VLD is part of a drive to build an accessible and comprehensible electronic collection. As a result of this collaborative effort since 1994, there are over 60,000 titles (monographs, serials and websites) and over 150,000 journal issues housed in the Legal Deposit. Open source software is called up in LAC projects including Heritrix, an open source archival software, which is used to acquire domains associated with the federal, provincial and territorial governments as well as Olympic Games domains.

- Front page of LAC’s Electronic Collection

The VLD was conceived as a pilot project in an effort to make the electronic collections at LAC more efficient. This software-based approach had several aims including to test methodologies and to learn technical and operational details about the workflow related to the intake of electronic materials of various types. As is the nature of a pilot project, LAC learned many lessons about the VLD that will ensure adjustments will be made in the future to make archival of materials even more efficient. Lessons learned include the need for distinct ISBN’s for digital editions, the need to have the bugs worked out of JHOVE with respect to large PDF files and a need to tweak the functionality for publisher registration. Haigh indicated that this first release of the VLD was very useful in raising an understanding of the importance of functionality for metadata capture by making sure it is efficient and clear to publishers.

Moving to the future, LAC will begin to test serials as well as all transfer methods associated with the Legal Deposit. Additionally, LAC will explore two important questions related to preservation:

1. How do we capture Canadian OJS journals for legal deposit and preservation purposes?

2. How can LAC and the Can OJS community collaborate?

As for access, it is clear that the goal of the TDR is to provide access but I would be interested to see statistics regarding who is actually accessing the materials from the LAC (ie. mostly the academic community or individuals for personal purposes). In keeping within the confines of copyright, Armstrong notes that rights associated with content as dictated by the publisher will be reflected in terms of access.

Related Links

Library and Archives Canada – Official Site

Library and Archives Canada Act

Library and Archives Canada – Trusted Digital Repository Project

Library and Archives Canada – Legal Deposit

Library and Archives Canada – Electronic Collection

Article – “Attributes of a Trusted Digital Repository: Meeting the Needs of Research Resources”

July 9, 2009 Comments Off on Legal Deposit at Library and Archives Canada and development of a Trusted Digital Repository: The Session Blog