The Latest Developments in XML Content Workflows: The Session Blog

Presenter: Adrian Stanley, Session Abstract

July 10, 2009 at 11:00 a.m.

Background

Adrian Stanley is the Chief Executive Officer for The Charlesworth Group (USA). Prior to this Adrian worked for 4 year as Production Director for Charlesworth China setting up their Beijing office. The Charlesworth Group offers cutting edge automated typesetting services, as well as Rights and Licensing opportunities for publishers in the China market. Adrian is an active committee member for the SSP (Society for Scholarly Publishers), The Association of Learned and Professional Society Publishers (ALPSP North American Chapter), the Council of Science Editors (CSE), as well as working on a key project with the Canadian Association of Learned Journals; he has 20 years experience working in the publishing/printing sector.

Session Overview

This session was a technical session, yet one which tried to keep the technology basic and focused more on the benefits of the technology for the publishing process, particularly the benefits of XML. Charlesworth works with XML and XML workflows. Their aim is to support publishers. This session showcased their tools.

The benefits of using XML are the automation and time saving in the publisher’s workflows. But more fundamentally XML adds energy to data. The presenter took a short poem and showed it as text, then HTML then XML. The change in the poem was less how it looked than what it now was. In XML, it now had substantial additional information encoded with the poem.

Benefits of XML are numerous, adding more information and data about an article, data that is both machine and human readable. The presenter referred to the excellent pre-conference workshop in the PKP 2009 conference by Juan Pablo Alperin for further information on XML.

Image taken from presenter's presentation at PKP 2009, with permission

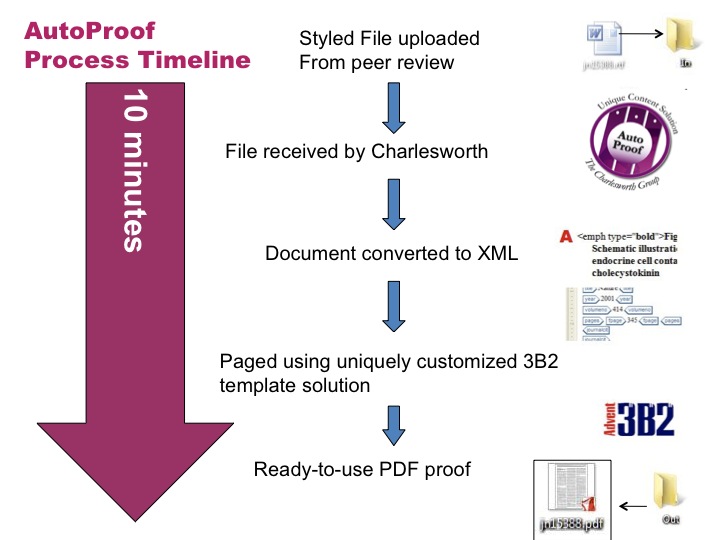

AutoProof Process Timeline diagram highlights the 10 minute process that Charlesworth’s AutoProof performs. It takes a styled file, converts it to XML and then outputs it in production ready PDF format. Charlesworth provides some Microsoft Word macros that help apply tagging and styling, to prepare documents in Microsoft Word for import to this process and ultimately XML. The presenter took us through this process showing each stage.

The presenter then showed the Online Tracking System that allows a view into each stage in the AutoProof process. From one screen, the publisher and editor can run the entire publishing process.

Next some examples of the many other types of AutoProof publications that are possible were shown. It’s not just journals e.g. program and abstract meetings, ebooks, dictionaries, etc can be handled. As long as files coming to AutoProof are structured, many types of documents can be used and created. So what AutoProof is doing is simplification and reduction of time in the workflow process, using XML at its core.

In addition AutoProof supports incorporating XML into the PDF, to make PDFs more readable and searchable. Storing the metadata in the PDF file (using XMP) could make it much easier to import a large collection of PDF files into a reference manager. XMP combines XML metadata within the PDF file. The presenter showed a sample XML Packet (XMP) page.

Other applications and developments with XML are also possible including auto generation of table of contents and Index, author proofing of link to PDF form XML, creating XML in multiple DTDs (document type definition), etc.

So in summary the goals of AutoProof are to enhance publications, customize how much or how little the publisher wants to do with XML, provide integrations with other systems and provide the basis for further developments e.g. authoring templates. Lastly, the presenter showed an example of OJS journal using AutoProof. Every reference automatically picked up the DOI (Digital Object Identifier) and automatically creates web links to the references.

Session Questions

Q: How much of publishing workflow did the James Journal use?

A: The James Journal allowed forward and backward linking, see the references in this James Journal article. They used most of our services; most fundamentally we created the XML. Because they used a lot of our services they were able to publish this magazine with a part-time editor.

Q: What about integration with OJS?

A: So we are working on creating integration with OJS

Q: What are the costs?

A: Broad range, depending on services that you need. The important point however, is to start to work out how much the alternatives of using your staff time is in fact really costing you. That is where we can help you make savings by significantly reducing the amount of staff time you need to dedicate to the publication process.

References and Related Links

Charlesworth Group launches new open access OJS journal

DTDs (document type definition)

DOI (Digital Object Identifier)

July 10, 2009 Comments Off on The Latest Developments in XML Content Workflows: The Session Blog

XML and Structured Data in the PKP Framework: The Session Blog

Presenter: MJ Suhonos, Session Abstract

July 10, 2009 at 11:00 a.m.

Background

MJ Suhonos is a system developer and librarian with the Public Knowledge Project at Simon Fraser University. He has served as technical editor for a number of Open Access journals, helping them to improve their efficiency and sustainability. More recently, he leads development of PKP’s Lemon8-XML software, as part of their efforts to decrease the cost and effort of electronic publishing, while improving the quality and reach of scholarly communication.

Session Overview

“Lemon8-XML is a web-based application designed to make it easier for non-technical editors and authors to convert scholarly papers from typical word-processor editing formats such as MS-Word .DOC and OpenOffice .ODT, into XML-based publishing layout formats.” (Lemon8-XML).

This was a packed session, 50+ attendees. This technical session attempted to give a fairly non technical overview of the L8X software and its relationship to the PKP software suite and equally importantly to highlight the rich benefits that are provided by using XML workflow and the foundation it provides for the future.

The big question is why use XML workflow. Using XML workflow allows numerous things to be possible. These include interaction with other web services (direct interaction with indexes and better interaction with online reading tools); automatic layout (generate html and/or PDF on the fly); complex citation interaction (forward and reverse linking which allows the discovery of everyone who cited you anywhere on the web; advanced bibliometrics, not just impact measures; resource discovery (universal metadata can find related works; and rich document data allows search engine to be much more effective; the document becomes the metadata (remove separation between article and document so all information is in one place. This is the goal of L8X, to convert articles into structured xml and thus enable these benefits. This is also future proofing as XML makes documents fundamentally open, convertible and preservable. Archiving XML (which is text) is much more flexible than archiving PDF files.

Using XML allows connection and communication to all these systems and means of display. We are also future proofing, as XML will be able to be modified into future formats, as its just text.

Where does this fit within PKP framework? Already being used in OJS (import and export and exposing metadata to OAI harvesters). But the next goal is to apply these benefits to all kinds of scholarly work e.g. journal articles, proceedings, theses, books / monographs. So moving L8X into the PKP web application library will allow all these features to be made available to the whole PKP framework. So that’s the near term future plans for L8X. In the long term, beyond the next few years, the goal is to work on this concept of the doc is the metadata by building support for multiple XML formats in the web application library (WAL) and the merging of annotation, reading tools and comments directly into the article.

The distributed resource-linking diagram at the end of the presentation, some find complex. Essentially, structured metadata is needed to make this a reality, which is to let applications in the publication sphere all talk to each other.

Session Questions

Question: How automatic is automatic into XML for non-technical people? When can I just upload my doc and have it magically turn into XML?

Answer: Probably not ever, but it is semi automatic already. Some tools, like L8X, automate part of this process. Some things can be automated, but some will always require human effort.

Question: Will I be able to use L8X in my applications after this is integrated into the PKP framework?

Answer: We would like to be make L8X available for use after it becomes part of the framework and without requiring the framework. We are considering this for the future.

References and Related Links

Lemon8-XML demo server (login: lemon8 password: xmldoc)

July 10, 2009 2 Comments

Revues.org and the Public Knowledge Project: Propositions to Collaborate (remote session): The Session Blog

Presenter: Marin Dacos

July 9, 2009 at 10:00 a.m. SFU Harbour Centre. Rm. 7000*

*Important Note: As this was a remote session, the presenter’s voice was inaudible most of the time due to technical difficulties and constant breaks in the live audio streaming. Therefore, it was difficult to capture parts of the presentation.

Marian Dacos (Source)

Marian Dacos (Source)

Background

Session Overview

Mr. Martin Dacos initiated the session by providing a background summary on Revues.org. He indicated there are currently 187 members, with 42000 online humanities and social sciences full-text, open-access documents (Session Abstract). He mentioned that approximately ten years ago, systems were centralized and focused on sciences. And since the beginning of Revues.org, only PDF documents were processed for publishing by converting to extensible markup language (XML). Later, Lodel (electronic publishing software) was developed as a central management system (CMS) where the web service could convert word documents to XML. This is around the time when the Public Knowledge Project (PKP) started up and its focus was to decentralize and provide a more international access point for the publishing of journals and management of conferences through the Open Journal System (OJS). During this time, the two projects, Lodel and PKP, started to converge with two distinct parts and four kinds of services.

(1) The Project Details

The first part of the project, as Mr. Dacos described it consists of using PKP to develop a manuscript management tool to monitor the workflow through OJS. There is a need to create a new interface for users and make it more human-friendly for interaction in order to allow for the dissemination of documents. This portion of the project also investigates the possibility of connecting Lodel and OJS so both systems can use the system jointly. Next, Mr. Dacos explained the second part of the project which deals with document conversion called OTX – which will convert for example RTF to XML. This parallels PKPs development and there is the possibility of sharing information on this creation.

(2) Services

Revues.org offers various kinds of services to allow for the dissemination and communication of scholarly material and other information such as upcoming events. One of the services presented by Revues is Calenda which is claimed to be the largest French calendar system for the social sciences and humanities. This calender service is important because it disseminates information such as upcoming scientific events to the rest of it’s audience. This communication tool is crucial in bringing members of various online communities together to participate in ‘study days,’ lectures, workshops, seminars, symposiums, and share their papers. Another valuable service offered by Revues.org is Hypotheses which is a platform for research documents. This is a free service which allows researchers, scientists, engineers and other professionals to post their experiences on a particular topic or phenomenon for sharing with a wider audience. One can upload a blog, field notes, newsletters, diary inserts, reviews on certain topics, or even a book for publishing. A third service offered by Revues.org is a monthly newsletter called La Lettre de Revues.org. This newsletter connects the Revues.org community together by showcasing various pieces of information. For instance, new members who have recently joined are profiled and new online documents are highlighted for it’s subscribers to read.

The remainder of Mr. Marin Dacos’ talk focused on Lemon8 and OTX which was difficult to interpret due to technical issues.

Questions from the audience asked at Mr. Dacos’ session:

It was difficult to get an audio connection with Mr. Dacos due to technical difficulties, therefore questions were not asked.

Related Links

- Lodel – electronic publishing software system

- The Centre of Open Electronic Publishing

- The National Centre for Scientific Research

- “The CNRS reinforces its policy of access to digital documents in the human and social sciences” (Article)

- Lift France 09 – Conference attended in France

References

Dacos, M. (2009). Revues.org and the public knowledge project: propositions to collaborate. PKP Scholarly Publishing Conference 2009. Retrieved 2009-07-09, from http://pkp.sfu.ca/ocs/pkp/index.php/pkp2009/pkp2009/paper/view/208

July 10, 2009 Comments Off on Revues.org and the Public Knowledge Project: Propositions to Collaborate (remote session): The Session Blog

The new Érudit publishing platform: The Session Blog

Presenter: Martin Boucher

July 9, 2009 at 9:30 a.m. SFU Harbour Centre. Rm 7000

Martin Boucher (picture taken by Pam Gill)

Background

Mr. Martin Boucher is the Assistant Director for the Centre d’édition numérique/ Digital Publishing Centre located at the University of Montreal, located in Montreal, Quebec. This centre along with the library at the University of Laval are the sites of the Erudit publishing locations which serve as a bridge to the Open Journal Systems (OJS). Erudit focuses on the promotion and dissemination of research similar to the Open Access Press (part of the PKP 2009 conference).

Session Overview

Mr. Martin Boucher highlighted the features and implications of a new publishing platform called Erudit (Session Abstract). He focused on sharing the capabilities of the Erudit publishing platform by first providing a brief historical overview of the organization, describing the publishing process, then introducing Erudit, and concluding with the benefits of such a platform.

(1) Historical Overview

Mr. Boucher started the session by pointing out that Erudit is a non-profit, multi-institutional publishing platform founded in 1998. This platform, based in Quebec, provides an independent research publication service which consists of access to various types of documents in the humanities and social sciences fields to the universities. Erudit also encouraged the development of Synergies which is a similar platform but targets a more mainstream audience since it is published in English. Some facts about Erudit:

- International standards are followed

- Publishes over 50000 current and back-dated articles

- Offers management services, publishing, and subscriptions

- 90% of the downloads are free

- Have over 1 million visits per month

(2) Publishing Process

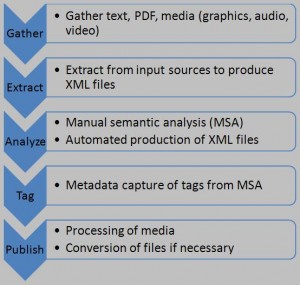

The description of the publishing process constituted at least a third of the presentation time. Mr. Boucher felt it necessary to take the time to describe to the audience the details involved so it would be easier to compare the similarities and differences between the new and old versions of the system. To begin, Mr. Boucher indicated the publishing process accepted only journals based on extensible markup language (XML), and various input sources (In Design, QuarkXPress, Open Office, Word, RTF). Also, he pointed out that there are no peer-reviews, in fact: only the final documents are considered to be a part of the collection. It should be pointed out that these documents meet high quality standards as they are expected to be peer-reviewed before submitting to the publishing platform. As of yet, Erudit does not have the software to assist in a peer-review type of process. The belief of the Erudit community is to provide quick digital dissemination of the articles. This complicated, lengthy process is made possible by a team of three to four qualified technicians, one coordinator, and one analyst, all of whom ensure a smooth transition of the documents into the virtual domain. The publishing process consists of five key steps as outlined in Figure 1. Mr. Boucher elaborated on the importance of the analysis step. He went on to outline the three steps of the manual semantic analysis. The first consists of manual and automated tagging where detailed XML tagging is only for XHTML, and less tagging is done on PDF files. The second step consists of the automated production of XML files for dissemination. And lastly, a rigorous quality assurance by the technicians prior to dissemination sums up the analysis step of the publishing process.

Figure 1: The publishing process (image created by Pam Gill)

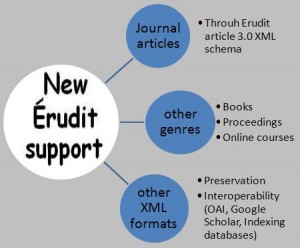

(3) New Erudit Platform

Once the publishing process was described, an illustration of the new Erudit platform was revealed. Mr. Boucher indicated there is now increased support for journal articles through the Erudit Article 3.0 XML schema (see Figure 2). Further, there is support for additional scholarly genres such as books, proceedings, and even online courses (a recent request). It should be mentioned here that some of these other forms of documents are still in the experimental stages such as digitizing books. In addition, there is continued support for other XML input/output formats to ensure preservation and interoperability such as with the Open Archives Initiative (OAI), Google Scholar, and indexing databases.

Figure 2: The new Erudit support system (image created by Pam Gill)

(4) Benefits

To conclude his presentation on Erudit, Mr. Boucher explained the advantages of incorporating such a system by mentioning particular benefits of interest:

- Purely Java-based

- User-friendly because they have a universal set of tools inside the applications which makes it easier for the support technicians to troubleshoot and work with

- Supports plug-ins and extensions

- All scholarly genres are supported

- The process is simpler to follow

- An increase in the quality of data is noted

- The decrease in production time is evident

- There is less software involved

Mr. Martin Boucher hinted that the beta version of Erudit was to release in Fall 2009.

Questions from the audience asked at Mr. Boucher’s session:

- Question: For the open access subscription of readership which consists of a vast collection, are statistics being collected? Answer: Not sure.

- Question: Will the beta version of the publishing platform be released to everyone for bug reporting, testing, or move internally? Answer: Not sure if there will be public access. But it is a good idea to try the beta platform.

- Question: Are you considering using the manuscript coverage for the Synergies launch? Answer: The new platform is creatively tight to what we are doing, and it is really close, with Synergies in mind.

- Question: In a production crisis, are journal editors with you until the end of the process? Answer: They are there at the beginning of the process. They give material, but we do our own quality assurance process and then we release to the journal, however it is our own control. Also, the editors cannot see the work in the process such as the metadata, thought we do exchange information by emails.

- Question: Has the provincial government been generous in funding? Answer: The journals had to publish in other platforms. There is a special grant for that. It is easier for us with that granting repository for pre-prints, documents or data section of the platforms which serve as an agent for them. Yet, Erudit is not considered by the government, although we are trying to get grants from the government. Currently to maintain the platform we only have money for basic management. In order to continue developing platforms (such as Synergy), to get support from the government is difficult.

- Question: How do the sales work for the two platforms? Answer: If you buy it, you will have all the content and access increases.

- Question: How is it passed to the publisher? Answer: The money goes to the journals, keep only a small amount for internal management since we are a non-profit society.

- Question: Could you describe the current workflow and time required to publish one article? Answer: It depends on the article. If we are publishing an article that has no fine grain XML tagging or it is text from a PDF, then it requires less time for us to get it out. It depends on the quality of the article and the associated graphics, tables, size etc. We publish an issue at a time. It takes say two days to get an article published.

Related Links

University of Montreal receives $14M for innovation (news article)

Contact the University of Montreal or the University of Laval libraries for more information on Erudit.

References

Boucher, M. (2009). The new erudit publishing platform. PKP Scholarly Publishing Conference 2009. Retrieved 2009-07-09, from http://pkp.sfu.ca/ocs/pkp/index.php/pkp2009/pkp2009/paper/view/182

July 10, 2009 2 Comments

10 Years Experience with Open Access Publishing and the Development of Open Access Software Tools: The Session Blog

Presenter: Gunther Eysenbach

July 9, 2009 at 3:00 p.m.

Gunther Eysenbach

Background

Gunther Eysenbach is editor/publisher of the Journal of Medical Internet Research (JMIR), which has presently been established as the top peer-reviewed journal in the field of ehealth.

Eysenbach is also an associate professor with the Department of Health Policy, Management and Evaluation (HPME) at the University of Toronto. In addition, he is a senior scientist for the Centre for Global eHealth Innovation. The JMIR boasts an impact factor of 3.0 which is highly ranked within other journals of health and sciences (Eysenbach2009).

Session Overview

Eysenbach have a condensed version during his session discussion due to time constraints but he managed to give the audience the key elements of where JMIR began to its present developments. He referred to the main concepts of JMIR being described as triple ‘o’ (open access, open source, open peer-reviewed). The basis of his talk was to explain the evolution and modifications that JMIR uses to continue to develop and publish online medical journals.

JMIR has developed a system that allows many facets of the publishing of journals to interface with online Web based technologies. JMIR’s structure uses the concept of OJS but also has adapted the business model to keep up with the ever changing structures of the Web. For example, JMIR has developed a system that re-bundles the topics of the journal collections called eCollections to place common topic journals together.

There are three levels of membership/subscription. Individual membership, institutional membership and institutional membership B (Gold). The online business model supports complex innovations such as the generating of electronic invoices for members, automatic word check for plagiarism of author submissions and fast track editing options.

JMIR also experimented with open peer-review systems and found that approximately 20% of the authors want open peer-review but JMIR continues to look at this issue and currently has a section on the submission form for authors to be self assigned or editor assigned. The editors can view what is called the Submissions Dashboard to get a visual charting of the various submissions and the status whether it me a fast track edit or not and the editor can track the submissions status.

JMIR’s system also integrates XML into OJS with conversion scripts and the system can edit XML files online. Also, there is WebCite which is an on-demand archiving system used so that readers and authors can have the same version of file.

Audience Input

Questions arose around copyright issues which Eysenbach addressed that JMIR uses “fair use” policies and that submission forms invite authors to inform the journal if they want their work archived or not.

Costs of membership for developing countries was also a question from the floor and Eysenbach responded to inform the audience that various models are currently being looked at – possibly increasing membership fees to subsidize developing country authors but the issue around subsidizing criteria has yet to be worked out. This was recognized as a dilemma.

References

Eysenbach, G. (2009). Open Access journal JMIR rises to top of its discipline. Retrieved July 7, 2009, from http://gunther-eysenbach.blogspot.com/2009/06/open-access-journal-jmir-rises-to-top.html

Related Links

Research at University Health Network

July 9, 2009 Comments Off on 10 Years Experience with Open Access Publishing and the Development of Open Access Software Tools: The Session Blog