What would happen if we programmed a computer to design a faster, more efficient computer? Well, if all went according to plan, we’d get a faster, more efficient computer. Now, we’ll assign this newly designed computer the same task: improve on your own design. It does so, faster (and more efficiently), and we iterate on this process, accelerating onwards. Towards what? Merely a better computer? Would this iterative design process ever slow down, ever hit a wall? After enough iterations, would we even recognize the hardware and software devised by these ever-increasingly capable systems? As it turns out, these could potentially be some of the most important questions our species will ever ask.

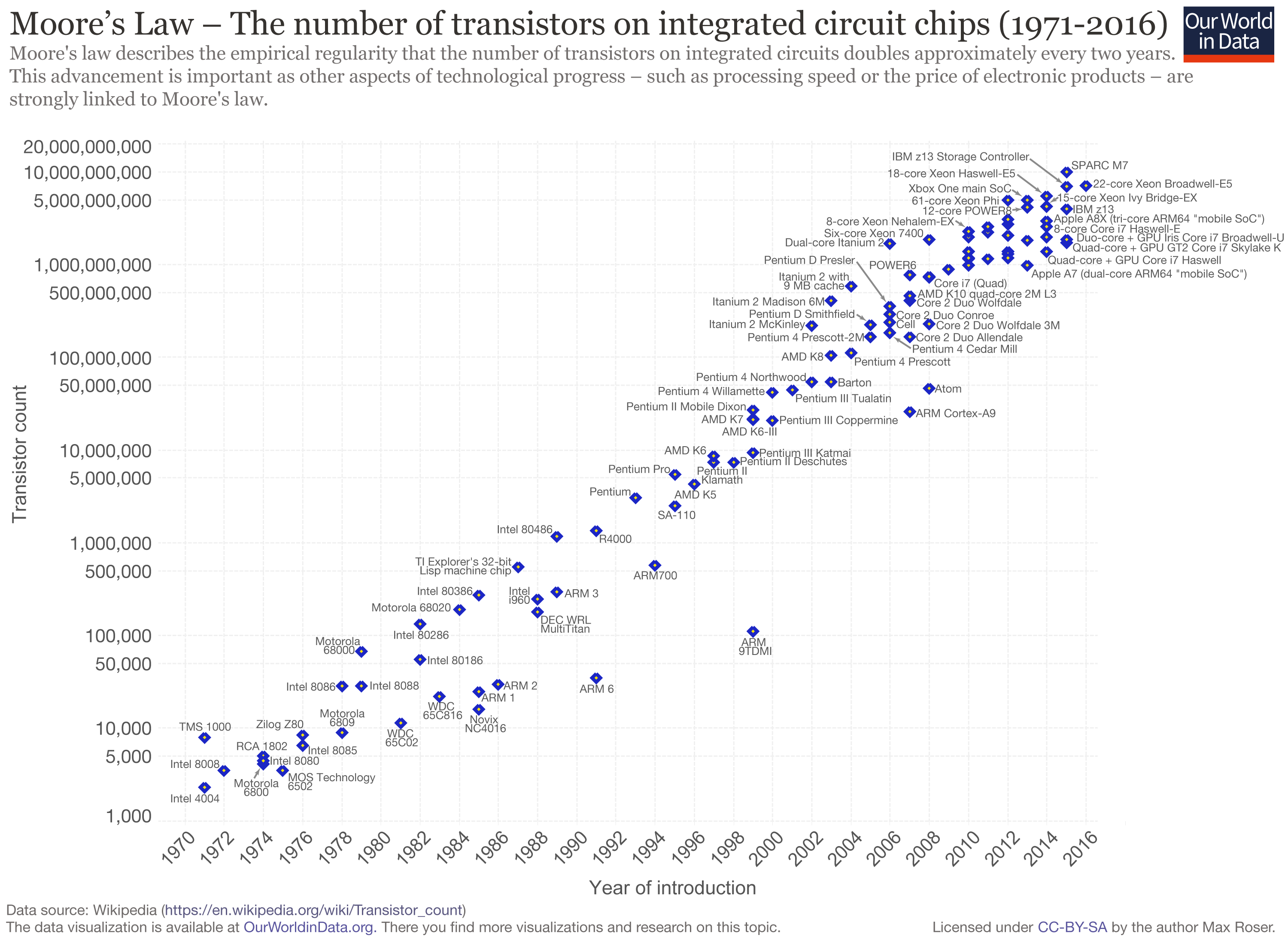

In 1965, Gordon Moore, then CEO of Intel, wrote a paper describing a simple observation: every year, the number of components in an integrated circuit (computer chip) seemed to double. This roughly corresponds to a doubling of performance, as manufacturers can fit twice the “computing power” on the same-sized chip. Ten years later, Moore’s observation remained accurate, and around this same time, an eminent Caltech professor popularized the principle under the title of “Moore’s law”. Although current technology is brushing up against theoretical physical limits of size (there is a theoretical “minimum size” transistor, limited by quantum mechanics), Moore’s law has more-or-less held steady throughout the last four and a half decades.

This performance trend represents an exponential increase over time. Exponential change underpins Ray Kurzweil’s “law of accelerating returns” — in the context of technology, accelerating returns mean that the technology improves at a rate proportional to its quality. Does this sound familiar? This is certainly the kind of acceleration we anticipated with computers designing computers. This is what is meant by the concept of a singularity — once the conditions for accelerating returns are met, those advances begin to spiral beyond our understanding, if not our control.

This concept is perhaps most easily applied to artificial intelligence (AI):

Let us suppose that the technological trends most relevant to AI and neurotechnology maintain their accelerating momentum, precipitating the ability to engineer the stuff of mind, to synthesize and manipulate the very machinery of intelligence. At this point, intelligence itself, whether artificial or human, would become subject to the law of accelerating returns, and from here to a technological singularity is but a small leap of faith. — Murray Shanahan, The Technological Singularity, MIT Press

Clearly, there is reason to wade cautiously into these teeming depths. In his excellent TED Talk, the world-renowned AI philosopher Nick Bostrom suggests that, though the advent of machine superintelligence remains decades away, it would be prudent to address its lurking dangers as far in advance as possible.

Source: TED

— Ricky C.