What defines immortality? If immortality is defined by “living” beyond the grave as a physical body with a personality and ability to interact with the world, then computer science is on the edge of this scary yet fascinating phenomenon.

https://www.sciencealert.com/images/articles/processed/shutterstock_225928441_web_1024.jpg

What is it:

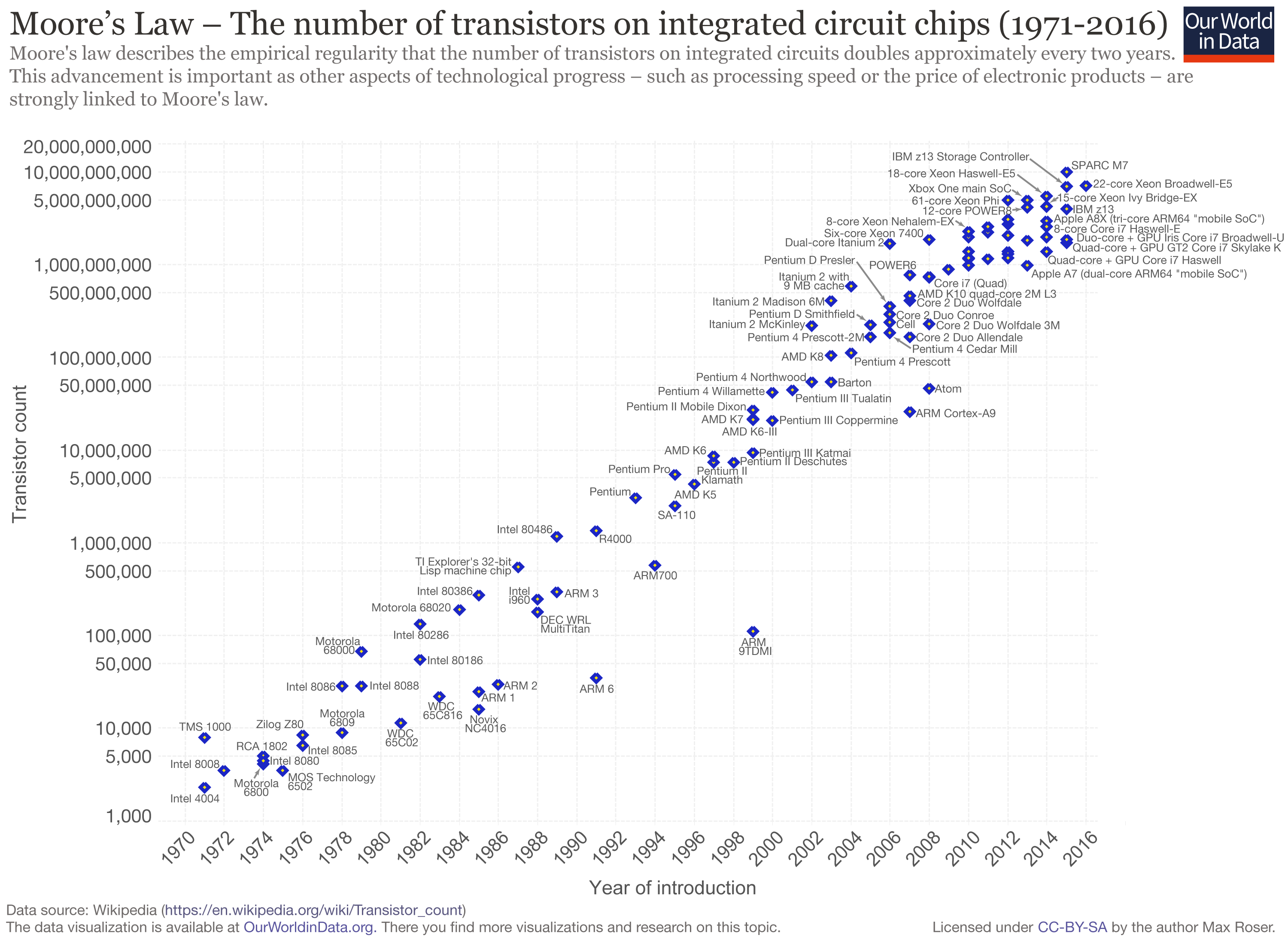

In the past few years, researchers have developed many different types of AI technology to capture and store human data, with the potential of building Virtual Reality replicas of the deceased. This AI technology is based on the idea of “augmented reality,” where an AI programme uses the technological imprint – past social media – left behind by someone to build a digital replica of them. Lifenaut, a branch of the Terasem Movement, for example, gathers human personality data for free with the hope of creating a foundational database to one day transfer into a robot or holograph. While this technology is still in its experimental stages, at least 56,00 people have already stored mind-files online, each containing the person’s unique characteristics, including their mannerisms, beliefs, and memories. According to researchers, in about fifty years, millennials will have reached a point in their lives where they will have generated zettabytes (1 trillion gigabytes) of data, which is enough to create a digital version of themselves.

How:

The prospective application of this technology is that loved ones may use robot reincarnation as a way to grieve or commemorate someone who passed away. VR replicas will be able to speak with the same voice as the dead person, ask questions, and even perform simple tasks. They may be programmed to contain memories and personality, so family members could dynamically converse and interact with them.

https://www.youtube.com/watch?time_continue=89&v=KYshJRYCArEConcerns:

Concerns:

Of course, digital-afterlife technology is a revolutionary concept that brings major ethical and practical implications. Some believe that VR replicas of loved ones are a normal, new way to mourn the deceased, similar to current ways people use technology to remember their loved ones, such as watching videos or listening to voice recordings. The problematic part of this application is that it does not seem like a healthy way to grieve. Allowing people to clutch onto digital personas of deceased individuals out of fear and delusion could effectively inhibit people from moving on with their life. The other consequence that this AI technology threatens is the potential of robots achieving high intelligence, becoming so advanced they could replicate the human race. Some futurists thus believe that it is essential to program chips with preventative technology into robots to battle this apocalyptic risk. There are also significant social implications to consider with VR replicas. Should the right to create these replicas be based solely on wealth? The prospect of people having the ability to buy immortality, even in digital form, is certainly problematic, as it perpetuates troubling societal disparity. Ultimately, there are far too many harmful individual and societal consequences of VR human replication technology for it be a worthwhile or necessary AI innovation.

“Do you believe in immortality?”

“No, and one life is enough for me.” – Albert Einstein

~ Angela Wei

Sebastian Thrun

Sebastian Thrun Elon Musk

Elon Musk