Summary

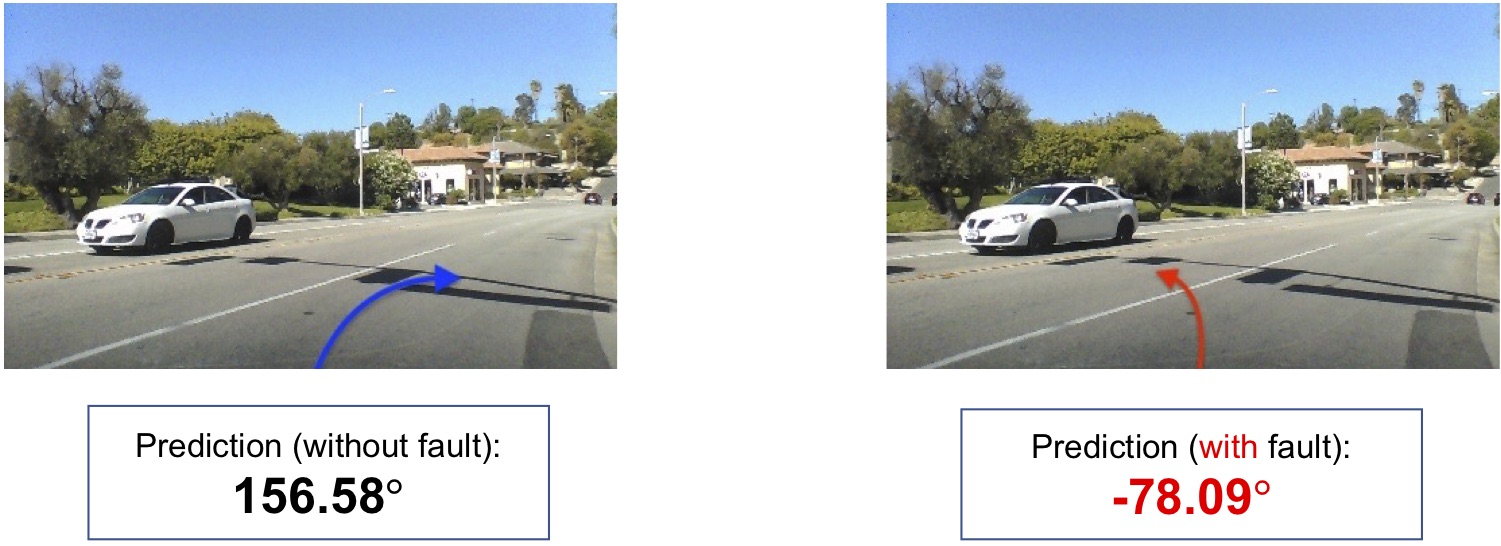

Machine learning (ML) systems have been increasingly deployed in safety-critical applications such as autonomous driving, medical diagnosis. Unfortunately, the underlying computing hardware that executes these ML models is prone to hardware transient faults (i.e., soft errors), which manifest as single bit-flips in the systems, and eventually lead to the systems’ failures (called SDCs). The following figure illustrates how a soft error can cause an ML model to predict the wrong steering angle for a self-driving car (from our experiments). The goal of this project is to understand how faults arising in different components (e.g., datapath, memory) would affect these ML systems, and develop novel solutions to enhance the error resilience of the systems.

Efficient fault injection for ML systems

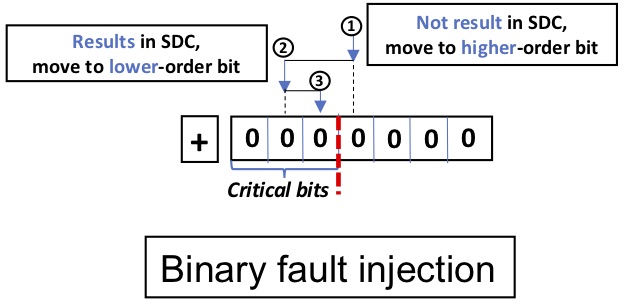

This project first aims to understand the vulnerability of ML systems w.r.t computational faults by identifying the critical bits, which are the bits where if soft errors arise, they will almost always result in SDCs. Existing solutions perform random fault injections, and can hence find only a small portion of critical faults. To overcome this challenge, we propose an efficient fault injector whose main idea is to characterize the unique fault patterns based on the mathematical property of the ML functions. We implement our technique in a tool called BinFI [1], which is able to identify most of the critical faults within the ML systems with low overhead. The following figure demonstrates fault injection strategy used by BinFI.

Enhancing the error resilience of ML systems

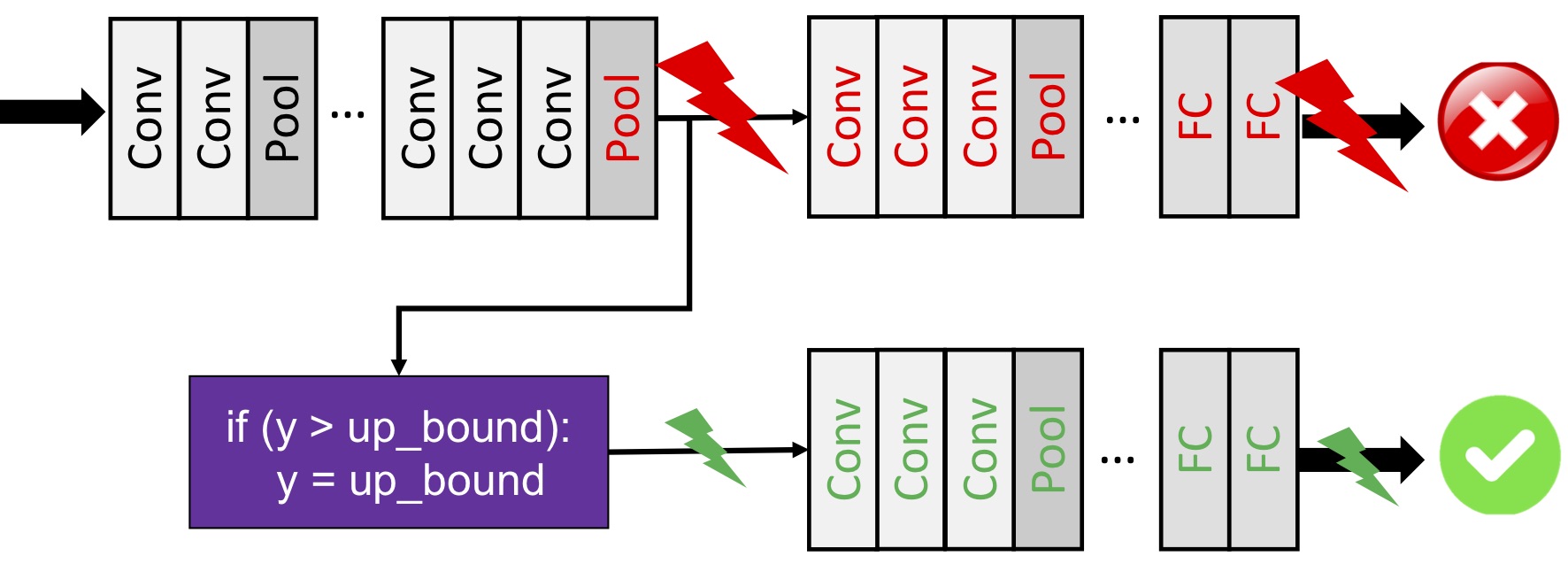

BinFI is able to efficiently pinpoint the critical faults in the ML systems, and our follow-up work focuses on how to protect the ML systems from these critical faults. Specifically, we introduce a low-cost fault correction technique called Ranger [2], which allows the ML program to make correct prediction even under the presence of critical faults. The main idea is to selectively restrict the value ranges in different layers of the models – The following figure illustrates this. The intuition for range restriction is to mitigate the large deviation caused by critical faults, and the reduced deviation can be inherently tolerated by the ML models. Ranger is implemented as an automated transformation to convert the unreliable ML models into error-resilient ones, which significantly improves the ML model’s reliability under soft errors with negligible overhead.

Memory faults in ML systems

In a similar line of work, we look into how memory faults would affect the ML models through corrupting the models’ parameters that are stored in the memory. Memory faults affect not only the reliability of the systems, but also its security, because they can be artificially induced by malicious attackers to downgrade the models’ performance, e.g., Rowhammer attacks. We are actively exploring solutions to make the ML models more resilient to such memory faults [3].

Team

Zitao Chen (zitaoc@ece.ubc.ca)

Ali Asgari (aliasgarikh97@gmail.com)

Papers

[1] Zitao Chen, Guanpeng Li, Karthik Pattabiraman, and Nathan DeBardeleben. BinFI: An Efficient Fault Injector for Safety-Critical Machine Learning Systems, In The International Conference for High Performance Computing, Networking, Storage, and Analysis (SC ’19), 2019. Acceptance rate: 20.9% Finalist for reproducibility award

[2] Zitao Chen, Guanpeng Li, Karthik Pattabiraman. A Low-cost Fault Corrector for Deep Neural Networks through Range Restriction, The 51st Annual IEEE/IFIP International Conference on Dependable Systems and Networks (DSN), 2021. Acceptance rate: 16.3% (Best paper award runner-up), Implemented as part of Intel’s Open-Vino Toolkit.

[3] Geissler, Florian, Syed Qutub, Sayanta Roychowdhury, Ali Asgari, Yang Peng, Akash Dhamasia, Ralf Graefe, Karthik Pattabiraman, and Michael Paulitsch. Towards a Safety Case for Hardware Fault Tolerance in Convolutional Neural Networks Using Activation Range Supervision 2021. Best Paper Award Nominee