What would happen if we programmed a computer to design a faster, more efficient computer? Well, if all went according to plan, we’d get a faster, more efficient computer. Now, we’ll assign this newly designed computer the same task: improve on your own design. It does so, faster (and more efficiently), and we iterate on this process, accelerating onwards. Towards what? Merely a better computer? Would this iterative design process ever slow down, ever hit a wall? After enough iterations, would we even recognize the hardware and software devised by these ever-increasingly capable systems? As it turns out, these questions have extremely important ramifications in the realm of artificial intelligence (AI) and humanity’s continuing survival.

Conceptual underpinnings

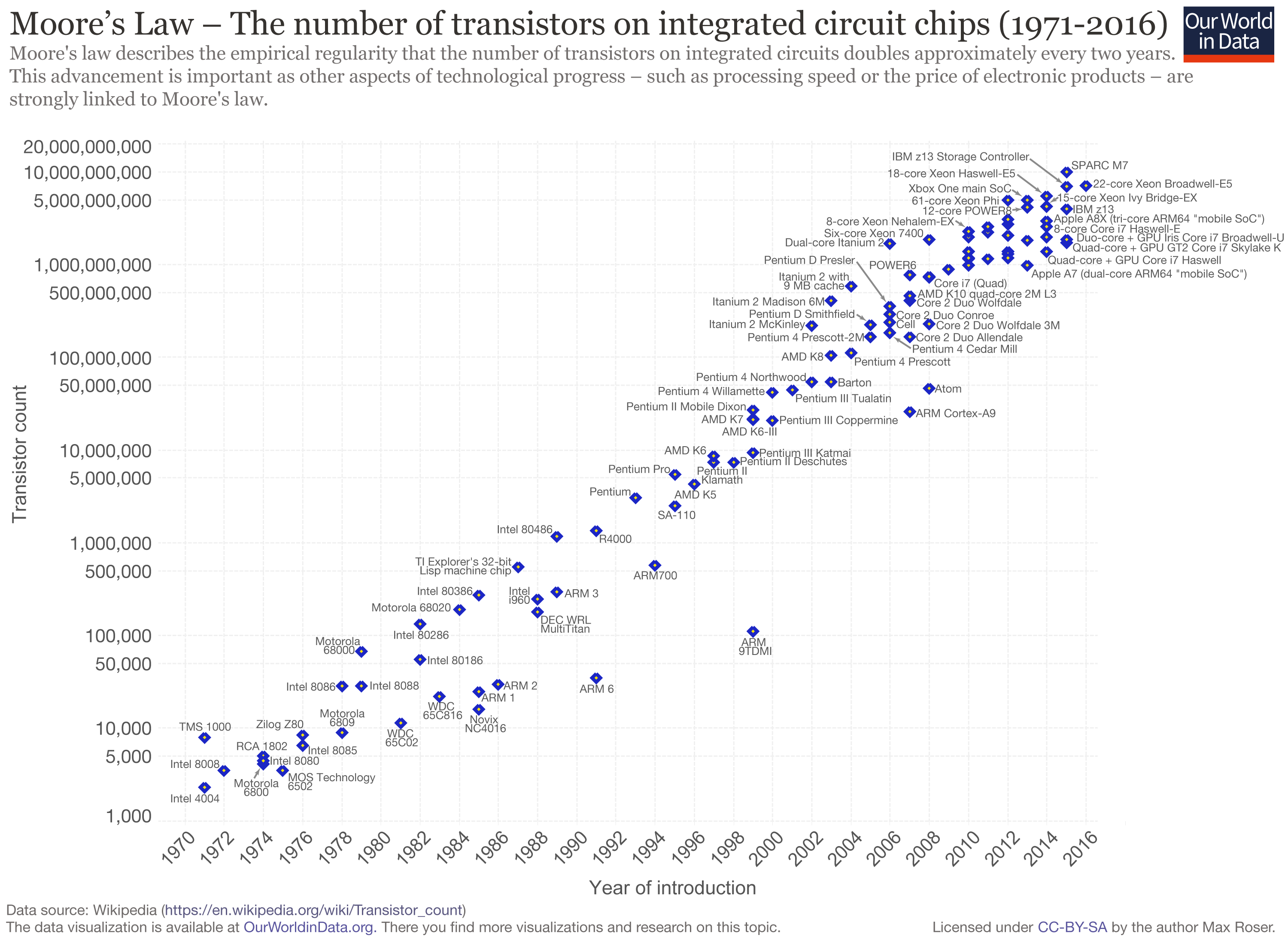

In 1965, Gordon Moore, then CEO of Intel, wrote a paper describing a simple observation: every year, the number of components in an integrated circuit (computer chip) seemed to double. This roughly corresponds to a doubling of performance, as manufacturers can fit twice the “computing power” on the same-sized chip. Ten years later, Moore’s observation remained accurate, and around this same time, an eminent Caltech professor popularized the principle under the title of “Moore’s law”. Although current technology is brushing up against theoretical physical limits of size (there is a theoretical “minimum size” transistor, limited by quantum mechanics), Moore’s law has more-or-less held steady throughout the last four and a half decades.

Accelerating returns

This performance trend represents an exponential increase over time. Exponential change underpins Ray Kurzweil’s “law of accelerating returns” — in the context of technology, accelerating returns mean that the technology improves at a rate proportional to its quality. Does this sound familiar? It is certainly the kind of acceleration we anticipated in our initial scenario. This is what is meant by the concept of a singularity — once the conditions for accelerating returns are met, the advances they bring begin to spiral beyond our understanding and, quite likely, beyond our control.

Losing control

As AI will almost certainly depend on some digital computer substrate, the concept of accelerating returns are readily applied to AI. However, losing control of an exponentially accelerating machine intelligence could have catastrophic consequences. In his excellent TED Talk, the world-renowned AI philosopher Nick Bostrom discusses the “control problem” of general AI and suggests that, though the advent of machine superintelligence remains decades away, it would be prudent to address its lurking dangers as far in advance as possible.

Nick Bostrom delves into the existential implications imposed onto humanity by machine superintelligence. Source: TED

In his talk, Bostrom makes a poignant illustrative analogy: “The fate of [chimpanzees as a species] depends a lot more on what we humans do than on what the chimpanzees do themselves. Once there is superintelligence, the fate of humanity may depend on what the superintelligence does.”

— Ricky C.