I’m going to use this post just to note a few resources on ethical principles around educational technology that I haven’t yet discussed in the series I’ve been writing about ethics & ed tech so far. I will at some point get around to writing about these, or at least synthesizing them with others I’ve reviewed so far.

This post will be updated over time. It’s meant as a way for me to keep track of things I want to look into more carefully and/or collate with other principles. Eventually I’d like to map out common ones and pay attention to those that are not commonly included in sets of already-existing principles as well.

I also have a Zotero library about ethics of educational technology and artificial intelligence that I update too.

Ethics in Ed Tech

Ethical Ed Tech Workshop at CUNY

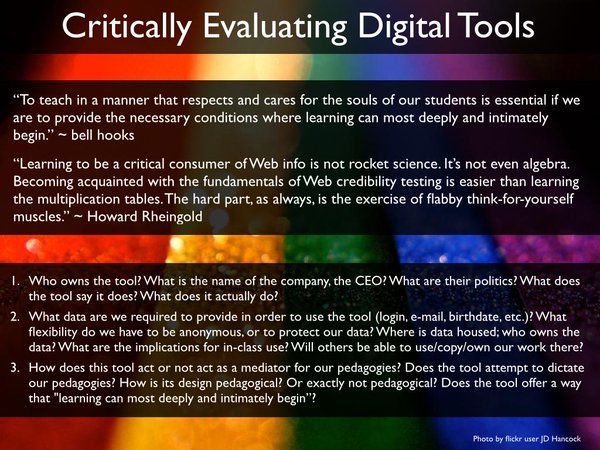

Information and resources for a workshop on Ethical Approaches to Ed Tech, by Laurie Hurson and Talisa Feliciano, as part of a Teach@CUNY 2020 Summer Institute. This web page includes a handout for workshop participants that lists the following categories of questions to ask in regard to ethics & ed tech:

- Access

- Control

- Data

- Inclusion

- Intellectual Property & Copyright

- Privacy

- Source

See the handout for more details!

UTS Ed Tech Ethics Report

The University of Technology, Sydney, went through a deliberative democracy process in 2021 to address the following question:

What principles should govern UTS use of analytics and artificial intelligence to improve teaching and learning for all, while minimising the possibility of harmful outcomes?

A report on the process and the draft principles was published in 2022. The categories of principles in that report are:

- Accountability/Transparency

- Bias/Fairness

- Equity and Access

- Safety and Security

- Human Authority

- Justifications/Evidence

- Consent

Again, see the report for more details–the principles are in the Appendix.

Ethics in Artificial Intelligence

EU Ethical Guidelines on AI

In October 2022 the European Commission published a set of Ethical guidelines on the use of artificial intelligence and data in teaching and learning for educators.

The categories of these principles are:

- Human agency and oversight

- Transparency

- Diversity, non-discrimination, and fairness

- Societal and environmental wellbeing

- Privacy and data governance

- Technical robustness and safety

- Accountability

See the PDF version of the report for more detail.

UNESCO Recommendations on the Ethics of AI

In 2022, UNESCO published a report about ethics and AI as well. The main categories of their ethical principles are:

- Proportionality and do no harm

- Safety and security

- Fairness and non-discrimination

- Sustainability

- Right to privacy, and data protection

- Human oversight and determination

- Transparency and explainability

- Responsibility and accountability

- Awareness and literacy

- Multi-stakeholder and adaptive governance and collaboration