As noted in the previous post on this blog, I’m reviewing some resources on ethics of educational technology (aka learning technology). In that post I did a short summary and some reflections on the UK’s Association for Learning Technology’s Framework for Ethical Learning Technology. That framework is made up of fairly broad principles that can form a very useful foundation for self-reflection and discussion about ethical approaches to learning technology decisions and practices.

In this post, I’m going to consider a couple of sets of questions that can guide reviews of specific educational technology tools: (1) a rubric by Sean Michael Morris and Jesse Stommel that has been used and refined in several Digital Pedagogy Lab Institutes, and (2) a tool to help with analyzing the ethics of digital technology that Autumm Caines adapted from another source for an Ed Tech workshop at the 2020 Digital Pedagogy Lab Institute.

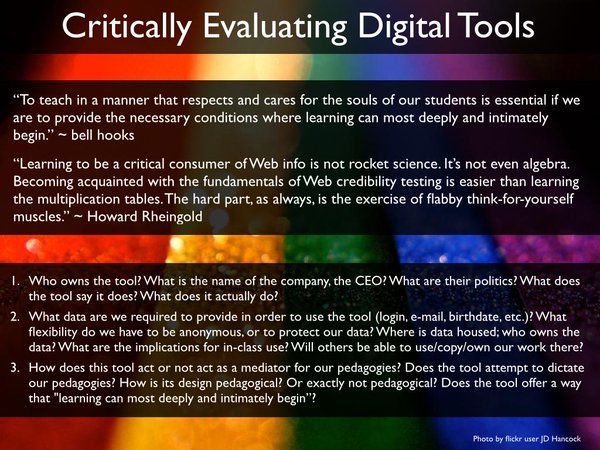

Morris & Stommel, Rubric for Critically Evaluating Digital Tools

This rubric comes from Morris & Stommel (2017), where they describe a “crap detection” exercise they have used in Digital Pedagogy Lab Institutes, asking participants to review and compare various learning technology tools on a particular set of questions.

Rubric for evaluating learning technology tools, by Morris and Stommel, licensed CC BY-NC 4.0

The slide above includes the following questions as ethical considerations one could use when reviewing one or a small number of specific learning technology tools:

- Who owns the tool? What is the name of the company, the CEO? What are their politics? What does the tool say it does? What does it actually do?

- What data are we required to provide in order to use the tool (login, e-mail, birthdate, etc.)? What flexibility do we have to be anonymous, or to protect our data? Where is data housed; who owns the data? What are the implications for in-class use? Will others be able to use/copy/own our work there?

- How does this tool act or not act as a mediator for our pedagogies? Does the tool attempt to dictate our pedagogies? How is its design pedagogical? Or exactly not pedagogical? Does the tool offer a way that “learning can most deeply and intimately begin”?

Morris and Stommel also note in the article that they have also added another set of questions, around accessibility:

- How accessible is the tool? For a blind student? For a hearing-impaired student? For a student with a learning disability? For introverts? For extroverts? Etc. What statements does the company make about accessibility?

They also note that the point of using the rubric is not necessarily to do a takedown of specific tools but to encourage participants to think more deeply about the tools they use, or may consider using (and requiring students to use): it is “a critical thinking exercise aimed at asking critical questions, empowering critical relationships, encouraging new digital literacies” (Morris & Stommel 2017).

In a brief summary, this rubric focuses on:

- The companies behind the tools, including what they say juxtaposed to what is actually the case (regarding what the tool does, regarding accessibility)

- Usage and ownership of data

- Privacy and anonymity

- Relationship between the tool and pedagogical design and support of learning

- Accessibility of the tool

In the reflections I have done so far on learning technology ethics, I have often considered topics in the areas of usage and ownership of data, privacy, and accessibility (among other things), and I appreciate that this rubric also includes a clear focus on the pedagogical value of the technology. Clearly, learning technology should support student learning—that is fundamental to evaluating tools. But reading through this rubric is making me think: might it be that further evaluation of pedagogical effectiveness may be needed than may have been done before adoption? I can say with certainty that I haven’t myself done a thorough review of all learning technology I’ve used as an educator. Is my sense that a certain tool, or kind of tool, seems like it could be useful and my students seem to like it enough?

I also appreciate the question about whether the design of the tool constrains or drives the pedagogical approach. In some ways, this is probably always going to be the case: when we are using any technology (even a chalkboard) this will affect our pedagogy. But it may be that some kinds of tools are more or less constraining of pedagogical approach. For example, on a chalkboard I can write words, equations, draw diagrams, maps, or images. I can do the same when I create quiz questions on a digital or paper document. While using a physical clicker I can only create questions that are multiple-choice.

Finally, the first couple of questions in the rubric are striking—asking about the company, the CEO, and their politics—just because I haven’t seen these elsewhere. But it makes sense to consider not just the tool, but the company that created it. It’s important to know who is behind the tools one is using (it’s not always obvious) and what their past and current practices are. Are those practices ethical? Do they support equity and inclusion? Do they respect autonomy and agency for users? Are they open to and responsive to criticism?

This rubric is not necessarily comprehensive–there are many more ethical questions one could ask about learning technology tools—but I don’t think that is the point. It is a set of questions to use to start reflections and discussions, not necessarily end them.

Caines, “Is this technology ethical?”

Autumm Caines put together another set of questions for an Ethical Ed Tech workshop at the 2020 Digital Pedagogy Lab, the Ethical Analysis of Educational Technology Tool. Like the rubric above, it’s designed for review of specific learning technology tools. It’s adapted from Krutka, Heath, and Willet (2019), which focused on “technoethical questions” that educators of teacher candidates should consider when teaching about use of educational technology.

The tool begins by asking not only for the name of the tool but also the company that created it, again emphasizing the importance of reviewing who is behind the technology we are using or considering. It then asks a series of critical questions with space left open for reflections, reasons, and evidence.

The questions in the rubric are:

- Was the tool designed ethically? Consider whether it was designed to be: transparent, equitable, safe, healthy, democratic.

- Are laws that apply to the use of the technology just?

- Does the technology afford or constrain democracy and justice for all people and groups? E.g., “Consider exploring how technology privileges or marginalizes students at the intersections of identity (e.g., race, language, culture, gender, sexual orientation, class, disability, religion, age).”

- Are the ways the developers profit from this technology ethical? “Ask yourself – what kind of company is this? How do they make money?”

- What are unintended and unobvious problems to which this technology might contribute? E.g., quoting Neil Postman, “Which people and what institutions will be most harmed by this new technology?”

- In what ways does this technology afford and constrain learning opportunities?

With the last item, this list too focuses on the pedagogical value of learning technology, specifically asking not only in what ways a tool may support learning, but also how it may constrain it. I think the latter is important to consider: a tool may help with some pedagogical goals and outcomes, making some easier or more effective, but at the same time it may foreclose others, and I know for myself I tend to focus more on the former than the latter. Keeping both in mind while evaluating is important.

A simple example is classroom response tools. While physical clickers opened up useful learning possibilities such as being able to answer a question, discuss in pairs or small groups, learn from peers, then re-answer, they also constrained the type of questions that could be asked to multiple-choice or true/false. Later versions of classroom response systems allow more question types, including free text, word clouds, sliding scales, adding hot spots to images, and more; but one is still constrained by the types that the tool has already in production. Another example is using collaborative documents such as shared Word docs in Microsoft Teams, where students can work on a document in real time or asynchronously. These can allow for very powerful learning opportunities, but word processing documents tend to constrain us into linear organization, top to bottom. For more freedom in organization, one could use mind maps or a whiteboard app such as Miro.

Of course, every technology has constraints–there are things one can and can’t do with a pen and paper, a chalkboard, a document camera, slides…. But it’s important to pay attention to what boundaries the tool is putting on the activities on is doing in one’s courses that are based on decisions made by the vendor, and then decide if it’s still worth using the tool.

This is related to point 5, which asks us to consider what “unobvious” problems the tool may contribute to that may not have been intended and may otherwise be hidden if we don’t specifically ask this question? Whereas point 6 asks about what learning opportunities may be constrained, this one is more directly about harm: who will be harmed, and most harmed, by the technology? To me, this immediately also connects to point 3, which asks us to consider equitable participation, marginalization, and injustice for some people or groups that can be exacerbated by the use of technology.

One concern is equitable access, which can be an issue for people whose access to the internet is low bandwidth (perhaps because of location, or ability to afford high speed internet connections)—some tools require high bandwidth to work well, including most anything having to do with video. One thing that the Learning Technology Hub at UBC Vancouver has started to do is to put bandwidth requirements on top of their tool guides for instructors. For example, the iClicker Cloud Tool Guide says there is low demand on internet connections, whereas the Kaltura Instructor Tool Guide and the Microsoft OneDrive Tool Guide say these have high demand.

Another way access may be inequitable is for users with disabilities. While many tools may be accessible in some respects (see, e.g., the Accessibility standards and features page for the Canvas LMS), there is also the question of whether content put into the tools by users is itself accessible. For example, the Canvas LMS may have many accessibility features in the platform itself, but are the documents, slides, videos, or other resources that faculty or students upload into the platform accessible? Have faculty used the built-in accessibility checker when creating pages on Canvas? My own view is that there is a good deal of work yet to be done in this area.

Biases in learning technology that uses AI algorithms have been widely discussed in recent years; e.g., Coghlan, Miller, and Paterson (2021) discuss ethical principles and concerns related to online proctoring, including bias that can result from machine learning algorithms used in software that requires facial detection. One major concern is that such tools can fail to detect the faces of people with darker skin, leading to increased stress for such students who have the burden of taking more time to try to get the system to “see” their faces, to adjust lighting, and to talk to support people to try to troubleshoot the problem. Another problem noted by Coghlan, Miller, and Paterson is that the tools can be biased towards neurotypical behaviour, by algorithmically “flagging” behaviour in student recordings such as “abnormal” head and eye movement. Such ethical concerns lay behind the UBC Vancouver Senate Teaching and Learning Committee, and then the Vancouver Senate, motions in 2021 to restrict the use of online proctoring tools that use algorithmic analysis of recordings to only courses in program that require such tools for accreditation.

Point number 1, above, asks some similar questions about product design: was it designed to be “transparent, safe, healthy, democratic?” It’s interesting to me that this list of points includes both the actual impact of the technology when in use (point 3) and what the tool was designed to do, whether or not it succeeds. I hadn’t been thinking as much about the latter as the former, when reflecting on ethics in learning technology, but of course both are important. One example of something designed not with safety or health in mind, as noted by Krutka et al. (2019), is the way that many social media platforms have videos autoplay by default–this means that when you come across a video in your feed it will start playing whether it’s something you want to see or not. Another example is that many social medial platforms do not have a useful way to provide content warnings that block people from seeing or hearing things they would rather not.

Mastodon is one very good exception: a content warning placed by the person posting a message on Mastodon blocks the content unless the recipient specifically clicks on it. Mastodon is also a decentralized social platform–there are many different Mastodon communities with their own guidelines and rules, and choices on which other communities they will connect with in the federation of communities. Many of these have signed onto the Mastodon Server Covenant which, among other things, requires the communities that sign on to commit to “Active moderation against racism, sexism, homophobia and transphobia.” There are also a fairly robust set of actions that users can do on their own to filter out problematic content. This is a design approach meant to help support safety from harm and abuse (imperfect, but better than, say, Twitter in my opinion).

With point 4, about whether how the company generates profit is ethical, this resonates with the questions asked in Morris & Stommel about the vendors behind the learning technology products. Point 2 bring in something different by asking about the laws governing use of the technology, and whether they are just. This is particularly intriguing to me; some discussions I’ve been having with folks at our institution revolve around treating legal requirements about privacy (for example) as a baseline, but that privacy considerations should go further than that (though what these will look like is as yet to be discussed, and likely to be complicated). In asking whether laws related to the use of learning technology are just, this prompts us to consider, in part, whether they go far enough. But it also prompts us to consider whether the laws are problematic in other ways, and thus whether it behooves us to work towards changing them.

Conclusion

Well, I have continued my long tradition of writing very long blog posts with this one. I will end by summarizing overlaps between the sets of questions discussed in this post and the Association for Learning Technology’s Framework for Ethical Learning Technology (FELT) discussed in my previous post.

I think questions here tie to the at least following points from ALT’s FELT:

- Respect the autonomy and interests of different stakeholders

- Promote fair and equitable treatment, enhancing access to learning

- Develop learning environments that are inclusive and supportive

- Design services, technologies to be widely accessible

- Minimise the risk of harms

The set of questions from Caines (based on Krutka et al. (2019)), also relate somewhat to the following, except this set of questions goes further to ask whether relevant laws are themselves just.

- Ensure practice complies with relevant laws and institutional policies

The questions discussed in this post that aren’t represented in the FELT directly (though perhaps indirectly through some of the broader principles in the framework) include those that focus on companies behind the technology, privacy, and use and ownership of data.

Here is the next post in this series, Ed Tech Ethics part 3.