In a foreseeable future, Starbucks will hire robots as baristas, airports will adopt robots standing in front of the boarding gates, and of course, humans will no longer drive their own cars. A major trend for advancing science and technology is driven by facilitating daily lives, and humans have been dreaming about self-driving cars since 1920s. Autonomous vehicles (AVs), as knowns as self-driving cars or robotic cars, have developed incredibly fast in the past decade. In fact, California is ready to put AVs in use next year, but are we really ready for robots driving on the road?

“A Google self-driving car” by Michael Shick. Image from Wikimedia Commons. CC BY-SA 4.0

AVs are designed to reduce the traffic accidents, but how will the robots decide when they are forcing to choose between two evils, such as to run over pedestrians protecting the passenger or to sacrifice themselves and the passenger in the car saving pedestrians. This hypothetical situation, also called the Trolley Problem, requires a moral decision. A study showed that such decisions made by humans based on emotional engagements, yet robots can only follow the pre-defined algorithms. It is doubtful that AVs should be introduced to our lives before we trust robots can make moral choices.

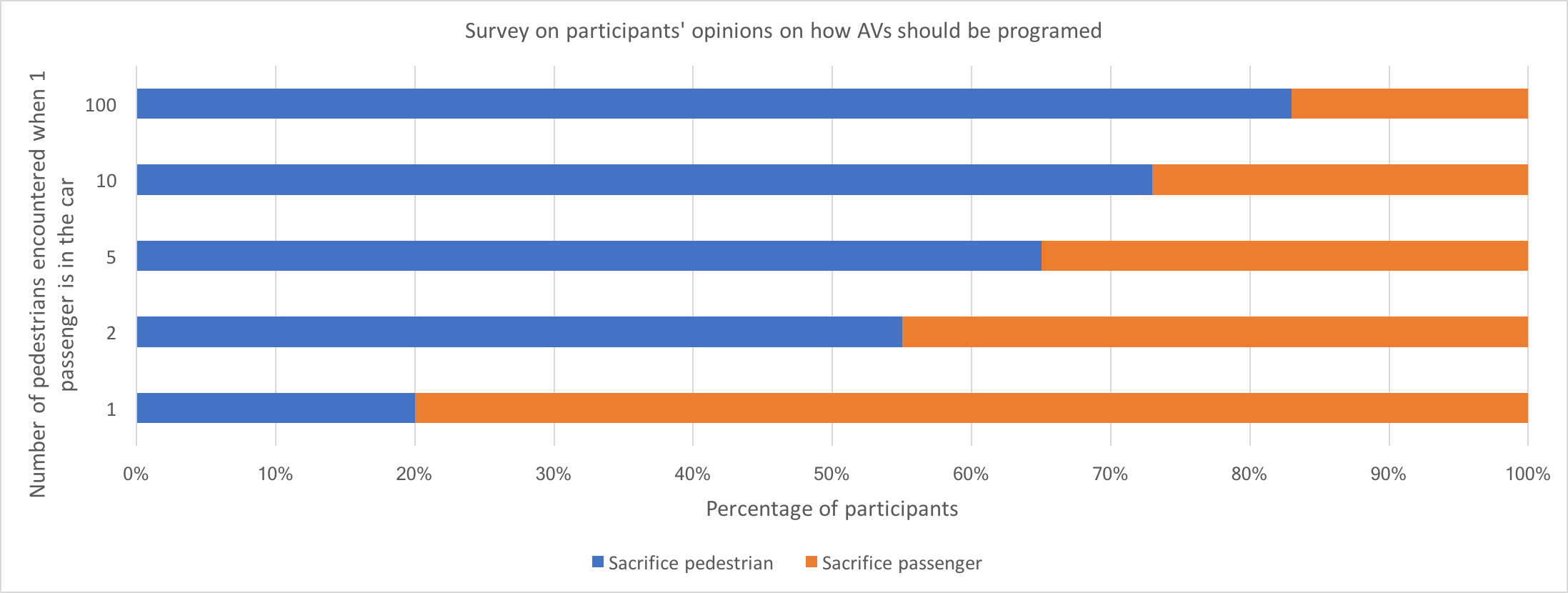

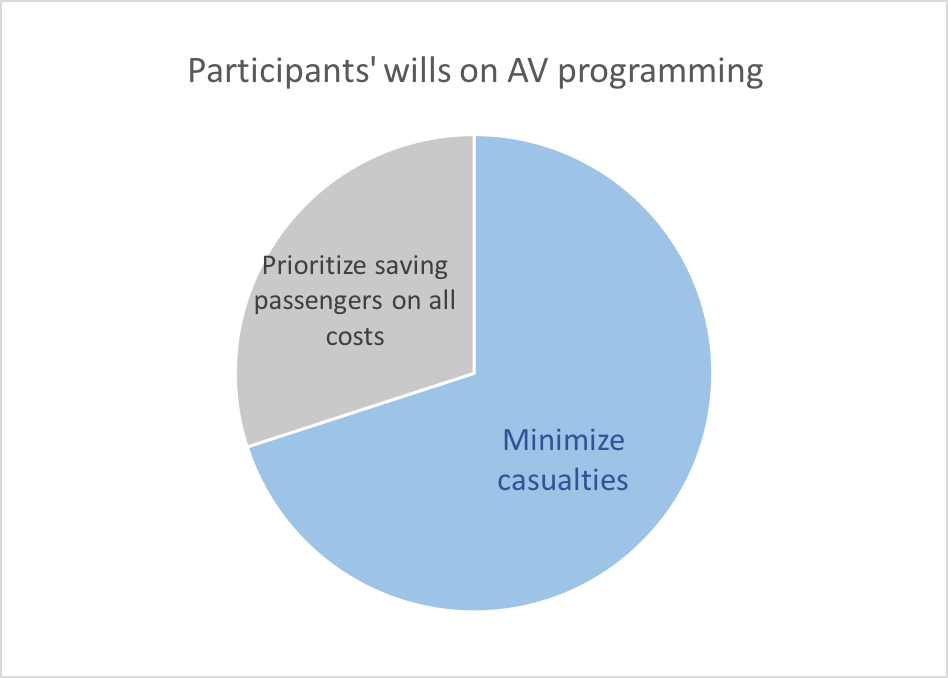

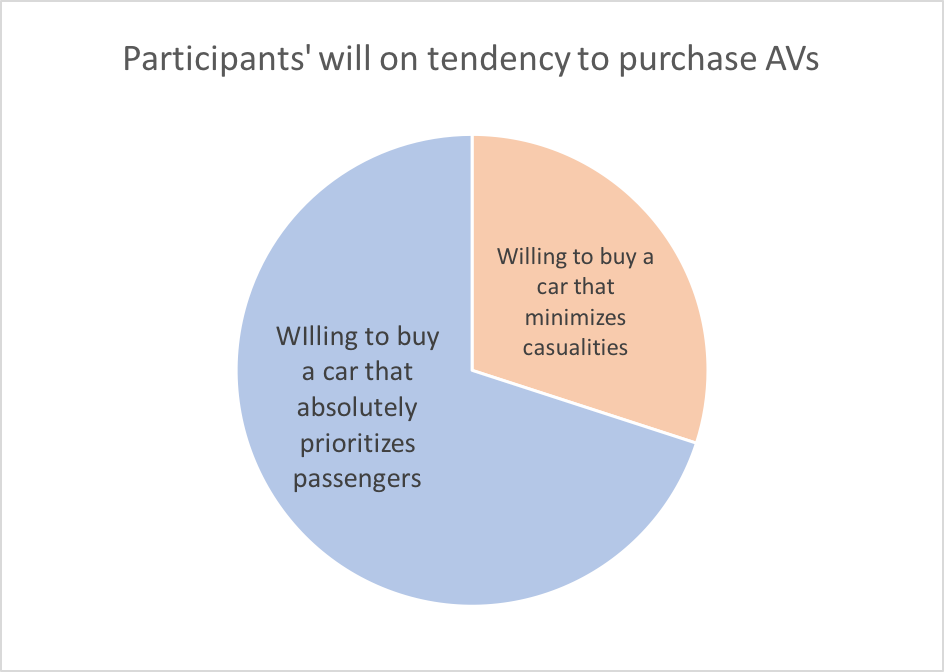

A study conducted online surveys including their opinion on how to program AVs when facing moral decisions and how likely they are going to buy AVs if AVs are programmed as they wished. The results are summarized and visually presented below:

Figure 1. Survey in the study. Image created by Zhou Wang, the author.

Figure 2. Participants’ wills on AV programming. Image created by Zhou Wang, the author.

Figure 3. Participants’ will on tendency to purchase AVs. Image created by Zhou Wang, the author.

From figure 2, it is clear to see that survey participants show a trend of agreeing to sacrifice the passenger in the car and to save the pedestrians as the number of pedestrians increases. On the other hand, figure 3 and 4 concludes even the majority agrees to program AVs to sacrifice the passenger in the car when it is possible to save more pedestrians, the same group of participants also showed that the majority will unlikely to purchase an AV if the passenger’s life is not absolutely prioritized.

When the participants assumed themselves as pedestrians, most of them was rational and thought the robots should sacrifice the passenger as long as more pedestrians were saved. Conversely, when they considered themselves as consumers to buy AVs, they wanted AVs to protect themselves at all costs.

The contradicted results are not surprising, as the participants were always trying to maximize their own benefits. However, the results do suggest that our society may not be ready to let robots make moral decisions regarding life-prioritizing problems, because it is unsure on how to program the AVs. Before safer and more advanced technology is available, we may not be ready to trust robots to drive next to us.

Youtube: TEDx talk about driverless cars

-Zhou Wang

2 responses to “Robots’ moral choices: are we ready for self-driving cars?”