You may have been told that you’re “not the center of the universe”, or perhaps have even used the phrase before. Well, a recently developed model of the universe indicates that this may not be entirely true. In fact, on a basic level, everyone may be able to consider themselves the center of their own universe. Not only that, but what we’re seeing in the night sky may not be what is actually happening up there. So what exactly is going on?

A Spitzer Space Telescope image of Messier 81, a grand design spiral. Source: Wikipedia Commons

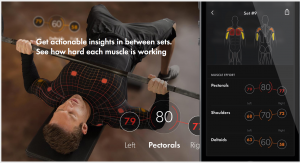

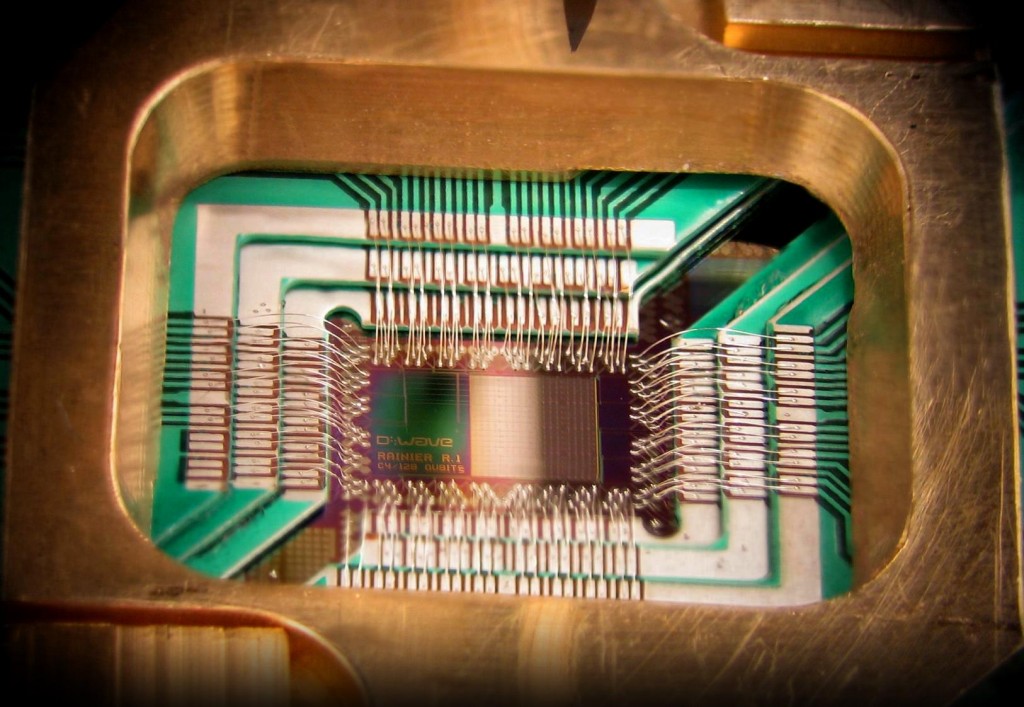

Dr. Daniel Carney, a theoretical physicist at the University of British Columbia, studies quantum gravity in a cosmological setting; he focuses on how the basic laws of physics can be applied to the whole universe at once. However, combining physics and cosmology (the study of the entire universe through all of time) is challenging, as the rules that apply in physics may not hold true for the infinite and changing universe. Therefore, models (such as the one Daniel Carney and co-author Willy Fischler developed) are our best hope at explaining what is happening in our observable space.

Observable space is what you can see from your own point of view, differing for each person depending on their location. We also have an individual event horizon , which is the furthest point where you can still identify objects in space; beyond this, you can no longer see anything. When an object reaches the event horizon, Carney states that it will spread evenly across the entire horizon, instead of disappearing from the observer’s view. This spreading – called “scrambling” – makes it difficult for observers to tell where the actual object is located. However, the object’s information is scrambled, not lost. Therefore, it is possible to determine where the actual object is located.

But what happens over time? It turns out that a process called “de-scrambling” – coined by Carney and Fischler – occurs when an object’s information comes back together as the universe expands at a slower speed. Because the universe expands at different rates, there are times when an object’s information scrambles and times when it de-scrambles. Carney adds, “The further claim is that if you could do this through all of cosmological history, you might see things pancaking, and then coming back together, and then pancaking…But critically, you never lose the information, it’s always there at the end of the day.”

For a visual representation of Dan Carney’s notion of scrambling and de-scrambling, watch this video here:

Although we can’t see it happening during our lifetime, objects in space that we view as unchanging may actually be in the process of scrambling or de-scrambling. Furthermore, what we observe in space is different for all of us – each individual has their own personal “bubble” from which they can observe the universe, which is different from those of other individuals. So it really is as if we’re each the center of our own universe.

For more information on what we observe in the night sky, listen to our podcast below:

Posted by: Navjit Moore, Corey Wilson, Matthew Leupold, and Krystyna Pangilinan