“This is a revolution not unlike the early days of computing. It is a transformation in the way computers are thought about.”

– Ray Johnson, Lockheed Martin

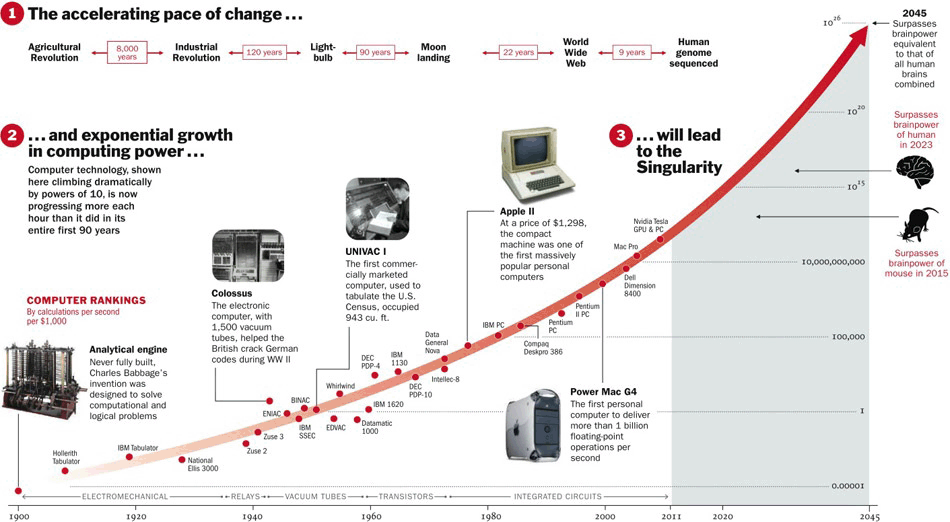

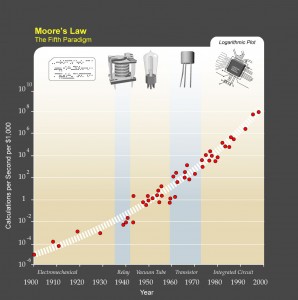

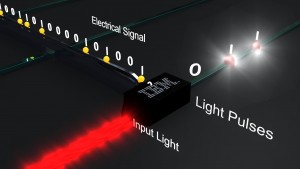

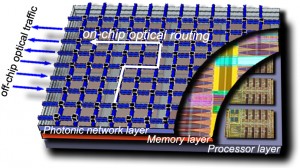

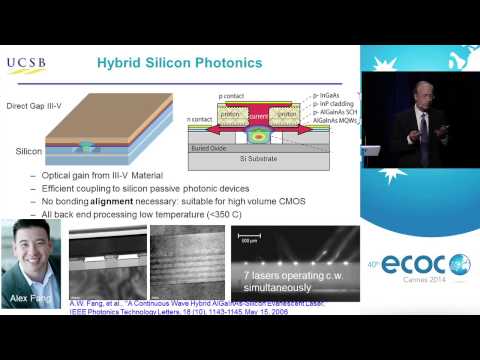

In Part One of this series, we discussed how Photonics could extend Moore’s Law by allowing conventional computers to send information at light speed. In Part Two, we discussed how Graphene could extend Moore’s law by creating computers that could operate thousands of times faster, cheaper, cooler, and friendlier to the environment. But what if the solution to Moore’s Law isn’t harnessing a new technology, or implementing some new material; what if the only way to make Moore’s law obsolete, is to go back to the drawing board and rethink how information is computed. Welcome to the world of Quantum Computing.

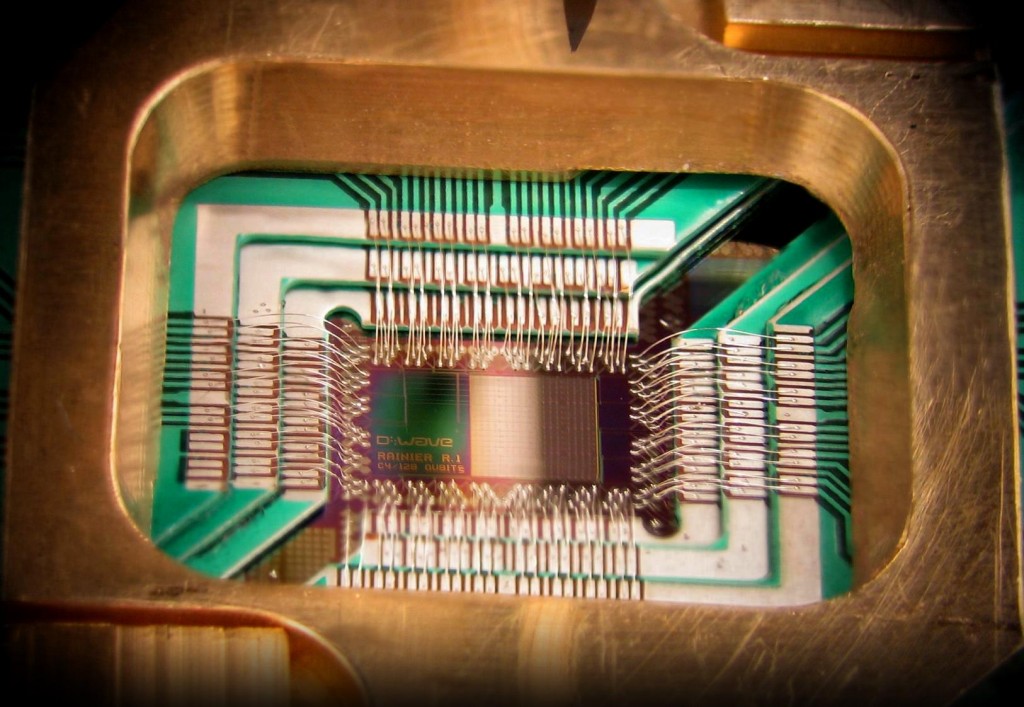

A chip constructed by D-Wave Systems, a company in Burnaby, B.C., designed to operate as a 128-qubit quantum optimization processor, Credit: D-Wave Systems (Wikimedia Commons)

In order to appreciate the impact of quantum computing, it will first be necessary to understand how it differs from classical computing. To get a decent overview, please watch the following short explanation by Isaac McAuley.

Now, with a better understanding of Quantum Computing and how it differs from classical computing, we can ask, “Why is this development so important?”

In order to answer this, consider that Quantum Computers can solve certain problems much more efficiently then our fastest computers can. For instance, suppose you have a budget for buying groceries and you want to work out which items at the store will give you the best value for your money; a quantum computer can solve this task much faster then a classical one. But let’s try a less trivial example. Suppose you take that very same problem and now you are a hydro company, you have a limited amount of electricity to provide your entire city with, and you want to find the best method of providing electricity to all people within your city at all hours of the day. Ever further, consider that you might be a doctor and that you want to radiate the most amount of cancer out of your patient’s body, using the smallest amount of radio isotopes, and by compromising the least amount of their immune system. All of these are problems of optimization that a quantum computer can solve at breakneck speeds. Think about it, how much time and money is spent trying to solve these problems and how much scientific progress could be made if they could all of these problems could be solved exponentially faster. For further consideration, checkout the following video by Lockheed Martin (one of the first buyers of a Quantum Computer) below:

Now that we are familiar with how Quantum Computing differs from classical computing, and what Quantum Computing could do for scientific research, the question one might ask is, “Why do we not have Quantum Computers yet?” The simplest answer is that while some Quantum Computers are for sale at exorbitant prices (The D-Wave One 128 Qubit Computer remains a costly $10,000,000 USD), Quantum Computers remain highly prone to errors.

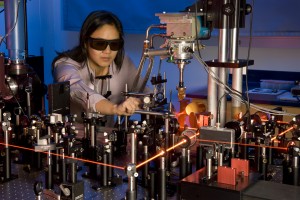

Recently, researchers at the Martinis Lab at the University of Santa Barbara have developed a new technology for Quantum Computers that allows the computer to check itself for errors without compromising how the system operates. One of the fundamental obstacles when working with Quantum Computers is that measuring a Qubit changes its inherent state. Therefor, any operation performed on a Qubit, such as checking to see that the Qubit stores the information that you want, will defeat the purpose of the system altogether.

Why? Well, because Quantum Physics, that’s why.

This new system allows Qubits to work together in order to ensure that the information within them is preserved by storing information across several Qubits which backup their neighbouring Qubits. According to chief researcher Julian Kelly, this new development allows Quantum computers the ability to

“pull out just enough information to detect errors, but not enough to peek under the hood and destroy the quantum-ness”

This development could allow Quantum Computers the reliability needed to not only ensure that they work as intended; but also, decrease the price of the current Quantum Computers as most of the money spent on a Quantum Computer is on the environmental controls the machine is placed in to prevent errors from occurring.

If you are interested in learning more about Quantum Computing, I highly recommend the following articles as introductions to what will surely be a revolution in Computer Science:

1. Quantum Computing for Everyone by Michael Neilson (a writer on the standard text for Quantum Computing, )

2. The Limits of Quantum by Scott Aronson in Scientific American (an MIT Professor of Computer Science)

3. The Revolutionary Quantum Computer that May Not Be Quantum at All by Wired Science

If you have any questions, please feel free to comment. I hope you all enjoyed this three part series on what the future of computation holds in trying to surpass Moore’s Law. Whatever way you look at it, the future looks bright indeed!

– Corey Wilson