Over the years, animation has evolved from creating drawings frame by frame (sequences of pictures that create movement), to using specialized 3D software such as Maya or Pixar’s Renderman. However, creating 3D animations not only involves principles of 2D animation, but requires applying common pipelines that are used among many popular films and games today.

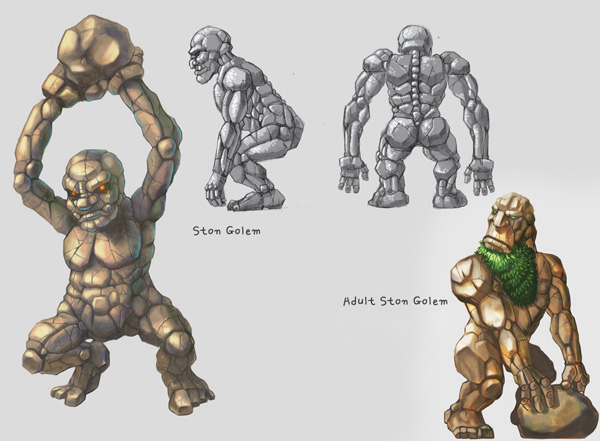

Character Concept: As an essential element in any creative idea, creating a concept starts by collecting and implementing features that will be essential for a character . In most cases, artists are able to draw poses, set shapes, and recreate expressions that will bring a character to life. This is a critical stage because based on the concept, later stages will be much easier to achieve.

Modeling: Following a previously created concept, modeling will consist of shaping a figure or character, starting from a base polygon. A basic modeling technique that some artists use is extruding(to squeeze or force out geometry). By using a 3D application such as Maya, this technique is based on adding divisions to a polygon, and expand its faces and edges until the desired shape is completed. However, depending on the model, a more advanced software like ZBrush may be used to reach higher levels of realism.

Texturing: As intuitive as wrapping a present, texturing mainly consists of mapping UVs(projecting a 2D image into a 3D surface). Most 3D applications contain tools that allow UV mapping to be done almost automatically. However, in order to make a better projection, it is important to know that each tool implements different algorithms that work better based on the shape of a polygon. For example, a planar mapping will work better on a flat object like a box, whereas a spherical projection will be ideal for a round object like a ball.

Rigging: Before animating, a character rigging (creating a digital skeleton) must be implemented. Rigging is sometimes considered the middle-stage in the animation process, and it consists of building a skeleton based on joints(when two parts of the skeleton are fitted together) that will allow deformation in a polygon. In addition, creating controllers that will be attached to specific joints, will act similar as having strings in a real-life puppet in order to manipulate the character’s movement. Nevertheless, rigging is often considered a very technical process; in some cases programming tools may be involved to reach a higher detail in movement.

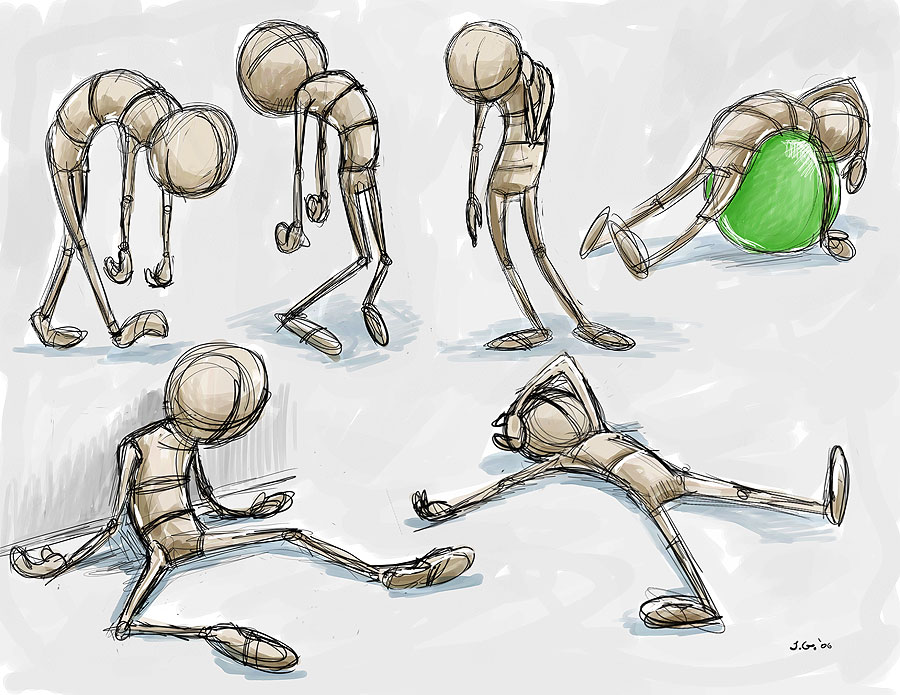

Posing: Based on the initial concept, posing indicates to animators how the character’s actions must be achieved. For instance, it will be easier to identify when the character is exhausted rather than cheerful.

Animation: Once rigging has finalized, the animation process can start. Following the principles of classical animation such as timing, anticipation, and staging, an artist is able to bring a 3D character to life by manipulating its movement through the rig controls that were previously created. As a comparison, 2D animation mostly requires drawing frame by frame in order to create movement, whereas 3D animation requires key frames (a pose that defines the starting and ending points of any smooth transition) so the software can create motion automatically. Moreover, most animated films run at 24 fps(frames per second), and most video games run at 30 to 60 fps.

Rendering: Adding some of the final details will generally occur at the rendering stage. By implementing materials that use a shader (a computer program that produces special effects or does video post-processing), polygons will have different consistencies to either look metallic, transparent, glowing, or diffuse(when light glows faintly by dispersing it in many directions).

Integration: Finally, once everything in the 3D animation has been completed, a final render is usually exported for animated films as frame by frame. In most cases, using an external video-editing application such as After Effects or Final Cut Pro, will join every frame to produce the final version of the animation. However, if a character is meant to be used for a game, a different procedure such as integrating a FBX file (a format which provides interchangeability between digital content creation applications) to a game engine such as Unity or Unreal.

In conclusion, making 3D animation is a process that may require technical background in various software applications. However, it can be like any other skill that can be improved over time.

In the following video, a few differences between animating in 2D and 3D are explained in more depth. Feel free to check it out!

The Difference Between 2D and 3D Animation by Bloop Animation (Morr Meroz)