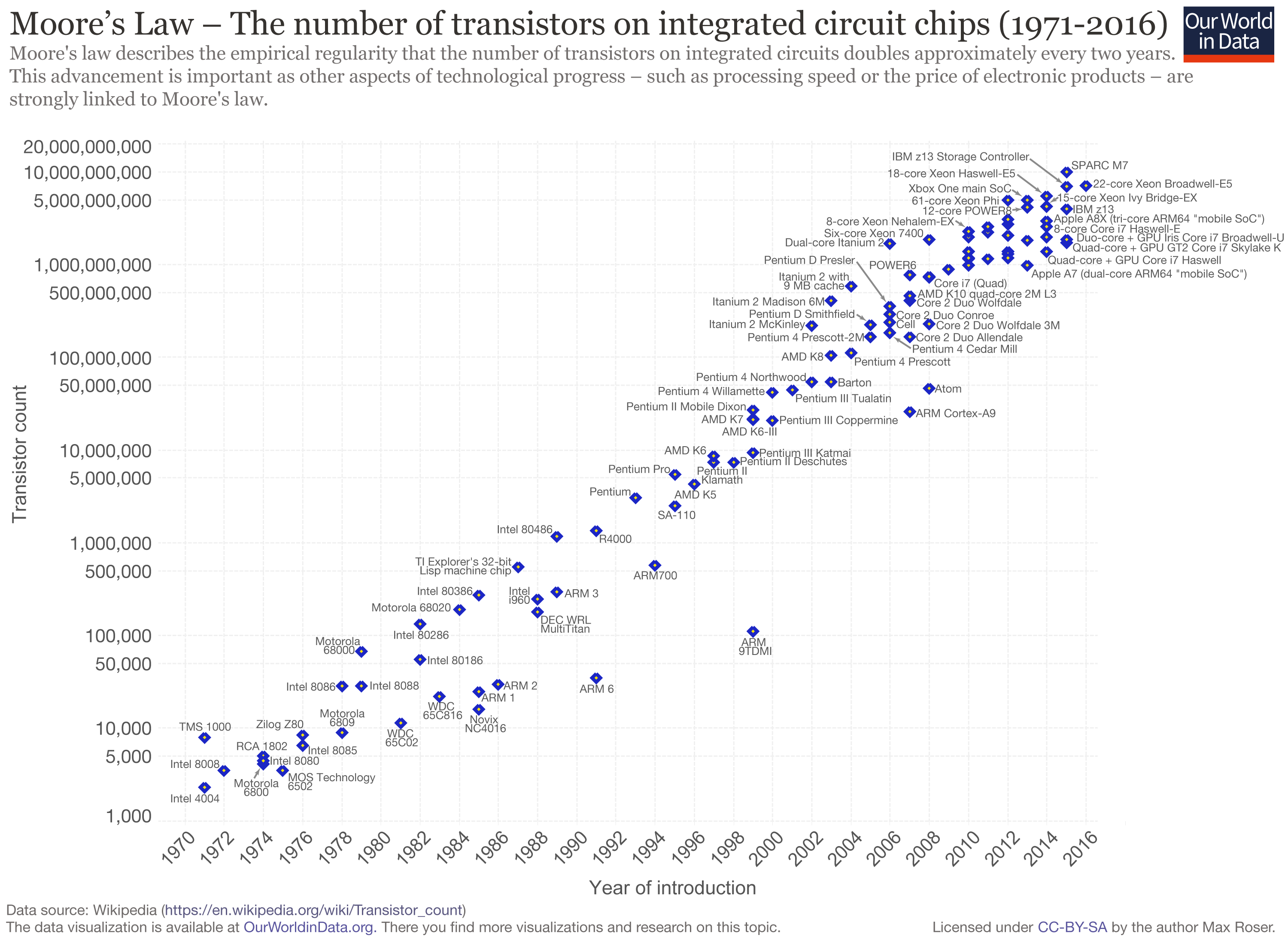

Many countries around the world are seeing a resurgence in preventable diseases such as measles and pertussis as a result of falling immunization rates. As of last week, there have been at least 18 confirmed measles casesin B.C. this year which has more than double last year. If at least 95% of the population is properly vaccinated, the number of measles cases should theoretically be zero. However a 2015 survey shows that only 89% of children under the age of two received the first dose of the MMR (measles, mumps and rubella) vaccine. For full immunity, it is recommended that children are immunized once at 12 months and a second time between the ages of 4-6.

An infant with measles. https://static.timesofisrael.com/www/uploads/2018/08/iStock-857285148.jpg

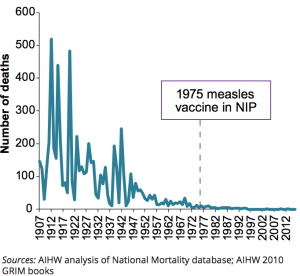

The measles vaccine has been around since 1963 and its effectiveness is clinically proven. Since its introduction disease incidence has decreased from hundreds of thousands of cases annually to less than a hundred. And yet some parents are choosing not to immunize their children at all. This puts not only their children, but the whole community at risk. So why are people reluctant to vaccinate their kids?

The current culture of vaccine hesitancy can be traced back to Andrew Wakefield and the autism scare. His paper linking autism to the MMR vaccine has since been retracted and thoroughly disproved, but it gained enough attention in the media and support from influential individuals to do lasting damage. A significant number of parents still cite fear of autism or of other chronic health conditions as a result of “toxic” chemicals in vaccines, when asked why they choose not to vaccinate.

Jenny McCarthy and Jim Carrey – outspoken celebrity anti-vax advocates. https://jamieeathorne.files.wordpress.com/2012/11/jenny-mccarthy-2.jpg

There seems to be a lack of confidence in health care professionals and scientists and it may have to do with how information is being communicated. The scientific community tends to view the general public as lacking knowledge on the topic. But simply laying out the facts is not enough to convince skeptical parents. Tone is also very important. Nobody wants to feel like they are being talked down to, but this is sometimes the experience parents have with doctors.

The truth is that anti-vaxxers are not all the ignorant kale-munching mommy-bloggers that we sometimes like to think they are. In fact, non-compliant parents have often done more research. The problem is that there is a lot of misinformation and misrepresentation out there. Just look at the two graphs below:

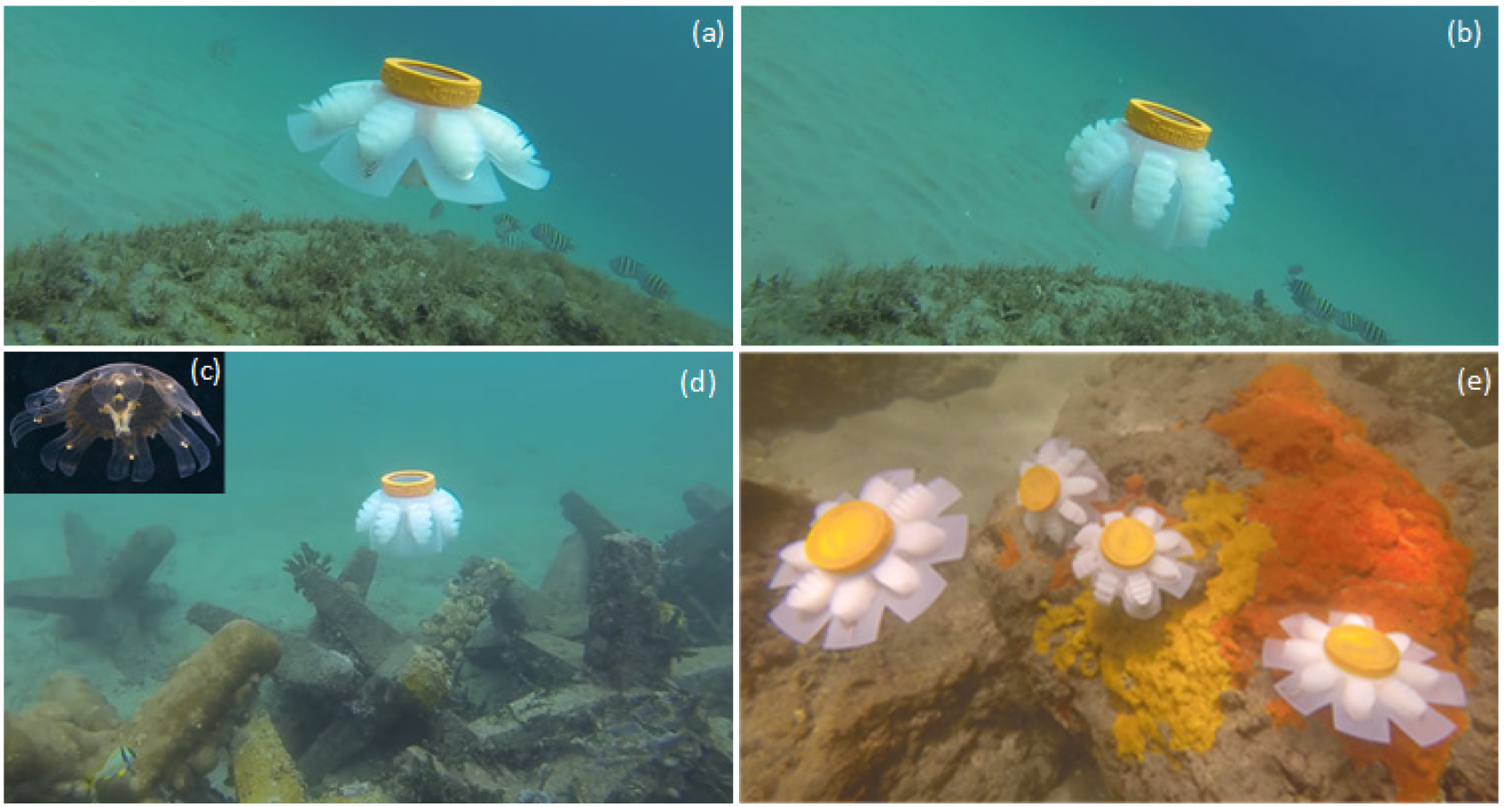

The first is from a prominent anti-vaccine site, Vaccine Liberation.This graph is trying to show that measles deaths in Australia were declining significantly well before vaccines were introduced. Note the very large range and simplification of the graph. Compare that to the second graph which was retrieved from the Australian Institute of Health and Welfare. On this one you can see more clearly that there is a significant drop in measles deaths between the two decades before and after the vaccine was introduced. It still shows that deaths were on the decline before, but this is to be expected as a result of better sanitation and advancements in healthcare. I would perhaps argue that in this case, graphs might not be the most convincing way to convey a message.

In decisions concerning their children, parents have a right to be cautious. Vaccine hesitancy is a wide spectrum and no single message will move an entire population. To be effective we must listen and respond to the different concerns. I believe that scientists and health care providers have a responsibility to engage in dialogue rather than assume the role of educator of the masses.

Sebastian Thrun

Sebastian Thrun Elon Musk

Elon Musk