How often have you heard your fellow instructors lament,

I don’t know why I bother with comments on the exams or even handing them back – students don’t go over their exams to see where they what they got right and wrong, they just look at the mark and move on.

If you often say or think this, you might want to ask yourself, What’s their motivation for going over the exam, besides “It will help me learn…”? But that’s the topic for another post.

In the introductory gen-ed astronomy class I’m working on, we gave a midterm exam last week. We dutifully marked it which was simple because the midterm exam was multiple-choice answered on Scantron cards. And calculated the average. And fixed the scoring on a couple of questions where the question stem was ambiguous (when you say, “summer in the southern hemisphere, do you mean June or do you mean when it gets hot?”). And we moved on.

Hey, wait a minute! Isn’t that just what the students do — check the mark and move on?

Since I have the data, every student’s answer to every question, via the Scantron and already in Excel, I decided to “go over the exam” to try to learn from it.

(Psst: I just finished wringing some graphs out of Excel and I wanted to start writing this post before I got distracted by, er, life so I haven’t done the analysis yet. I can’t wait to see what I write below!)

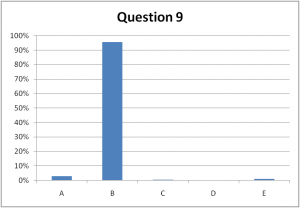

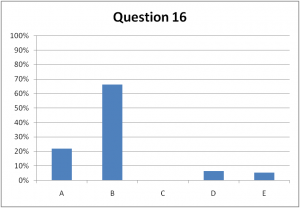

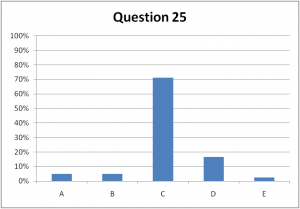

Besides the average (23.1/35 questions or 66%) and standard deviation (5.3/35 or 15%), I created a histogram of the students’ choices for each question. Here is a selection of questions which, as you’ll see further below, are widespread on the good-to-bad scale.

Question 9: You photograph a region of the night sky in March, in September, and again the following March. The two March photographs look the same but the September photo shows 3 stars in different locations. Of these three stars, the one whose position shifts the most must be

A) farthest away

B) closest

C) receding from Earth most rapidly

D) approaching Earth most rapidly

E) the brightest one

Question 16: What is the shape of the shadow of the Earth, as seen projected onto the Moon, during a lunar eclipse?

A) always a full circle

B) part of a circle

C) a straight line

D) an ellipse

E) a lunar eclipse does not involve the shadow of the Earth

Question 25: On the vernal equinox, compare the number of daytime hours in 3 cities, one at the north pole, one at 45 degrees north latitude and one at the equator.

A) 0, 12, 24

B) 12, 18, 24

C) 12, 12, 12

D) 0, 12, 18

E) 18, 18, 18

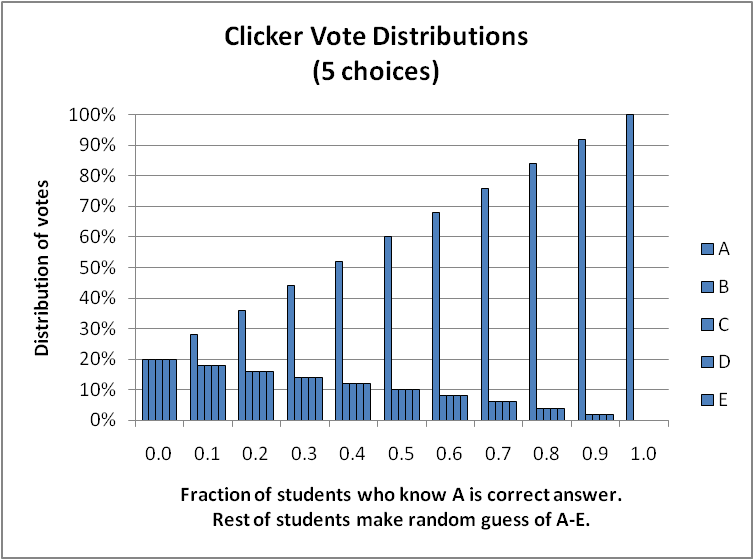

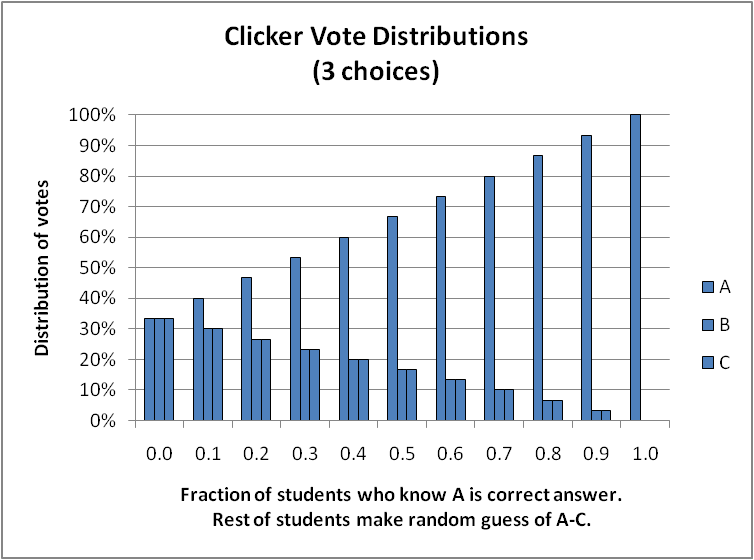

How much can you learn from these histograms? Quite a bit. Question 9 is too easy and we should use our precious time to better evaluate the students’ knowledge. The “straight line” choice on Question 16 should be replaced with a better distractor – no one “fell for” that one. I’m a bit alarmed that 5% of the students think that the Earth’s shadow has nothing to do with eclipses but then again, that’s only 1 in 20 (actually, 11 in 204 students – aren’t data great!) We’re used to seeing these histograms because in class, we have frequent think-pair-share episodes using i>clickers and use the students’ vote to decide how to proceed. If these were first-vote distributions in a clicker question, we wouldn’t do Question 9 again but we’d definitely get them to pair and share for Question 16 and maybe even Question 25. As I’ve written elsewhere, a 70% “success rate” can mean only about 60% of the students chose the correct answer for the right reasons.

I decided to turn it up a notch by following some advice I got from Ed Prather at the Center for Astronomy Education. He and his colleagues analyze multiple-choice questions using the point-biserial correlation coefficient. I’ll admit it – I’m not a statistics guru, so I had to look that one up. Wikipedia helped a bit, so did this article and Bardar et al. (2006). Normally, a correlation coefficient tells you how two variables are related. A favourite around Vancouver is the correlation between property crime and distance to the nearest Skytrain station (with all the correlation-causation arguments that go with it.) With point-biserial correlation, you can look for a relationship between students’ test scores and their success on a particular question (this is the “dichotomous variable” with only two values, 0 (wrong) and 1 (right).) It allows you to speculate on things like,

- (for high correlation) “If they got this question, they probably did well on the entire exam.” In other words, that one question could be a litmus test for the entire test.

- (for low correlation) “Anyone could have got this question right, regardless of whether they did well or poorly on the rest of the exam.” Maybe we should drop that question since it does nothing to discriminate or resolve the student’s level of understanding.

I cranked up my Excel worksheet to compute the coefficient, usually called ρpb or ρpbis:

where μ+ is the average test score for all students who got this particular questions correct, μx is the average test score for all students, σx is the standard deviation of all test scores, p is the fraction of students who got this question right and q=(1-p) is the fraction who got it wrong. You compute this coefficient for every question on the test. The key step in my Excel worksheet, after giving each student a 0 or 1 for each question they answered, was the AVERAGEIF function: for each question I computed

where μ+ is the average test score for all students who got this particular questions correct, μx is the average test score for all students, σx is the standard deviation of all test scores, p is the fraction of students who got this question right and q=(1-p) is the fraction who got it wrong. You compute this coefficient for every question on the test. The key step in my Excel worksheet, after giving each student a 0 or 1 for each question they answered, was the AVERAGEIF function: for each question I computed

=AVERAGEIF(B$3:B$206,”=1″,$AL3:$AL206)

where, for example, Column B holds the 0 and 1 scores for Question 1 and Column AL holds the exam marks. This function takes the average of the exam scores only for those students (rows) who have got a “1” on Question 1. At last then, the point-biserial correlation coefficients for each of the 35 questions on the midterm, sorted from lowest to highest:

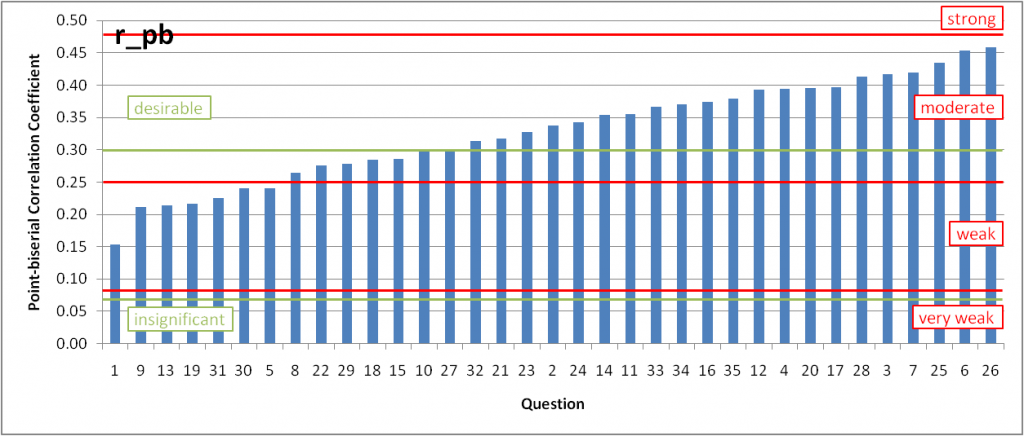

Point-biserial correlation coefficient for the 35 multiple-choice question in our astronomy midterm, sorted from lowest to highest. (Red) limits of very weak to strong (according to the APEX disserations article) and also the (green) "desirable" range of Bardar et al. are shown.

First of all, ooo shiney! I can’t stand the default graphics settings of Excel (and PowerPoint) but with some adjustments, you can produce a reasonable plot. Not that this in is perfect, but it’s not bad. Gotta work on the labels and a better way to represent the bands of “desirable”, “weak”, etc.

Back to going over the exam, how did the questions I included above fare? Question 9 has a weak, not desirable coefficient, just 0.21. That suggests anyone could get this question right (or equivalently, no could get this question right). It does nothing to discriminate or distinguish high-performing students from low-performing students. Question 16, with ρpb = 0.37 is in the desirable range – just hard enough to begin to separate the high- and low-performing students. Question 25 is one of the best on the exam, I think.

In case you’re wondering, Question 6 (with the second highest ρpb ) is a rather ugly calculation. It discriminated between high- and low-performing students but personally, I wouldn’t include it – doesn’t match the more conceptual learning goals IMHO.

I was pretty happy with this analysis (and my not-such-a-novice-anymore skills in Excel and statistics.) I should stopped there. But like a good scientist making sure every observation is consistent with the theory, I looked at Question 26, the one with the highest point-biserial correlation coefficient. I was shocked, alarmed even. The most discriminating question on the test was this?

Question 26: What is the phase of the Moon shown in this image?

A) waning crescent

A) waning crescent

B) waxing crescent

C) waning gibbous

D) waxing gibbous

E) third quarter

It’s waning gibbous, by the way, and 73% of the students knew it. That’s a lame, Bloom’s taxonomy Level 1, memorization question. Damn. To which my wise and mentoring colleague asked, “Well, what was the exam really testing, anyway?”

Alright, perhaps I didn’t get the result I wanted. But that’s not the point of science. Of this exercise. I definitely learned a lot by “going over the exam”, about validating questions, Excel, statistics and WordPress. And perhaps made it easier for the next person, shoulders of giants and all that…