I have started a series of blog posts reviewing what others have done related to the ethics of educational technology–see Ed Tech Ethics part 1 and Ed Tech Ethics part 2 so far.

Here in Part 3 I want to talk about a new resource I came across on Mastodon, a chart of Elements of Digital Ethics, by Per Axbom.

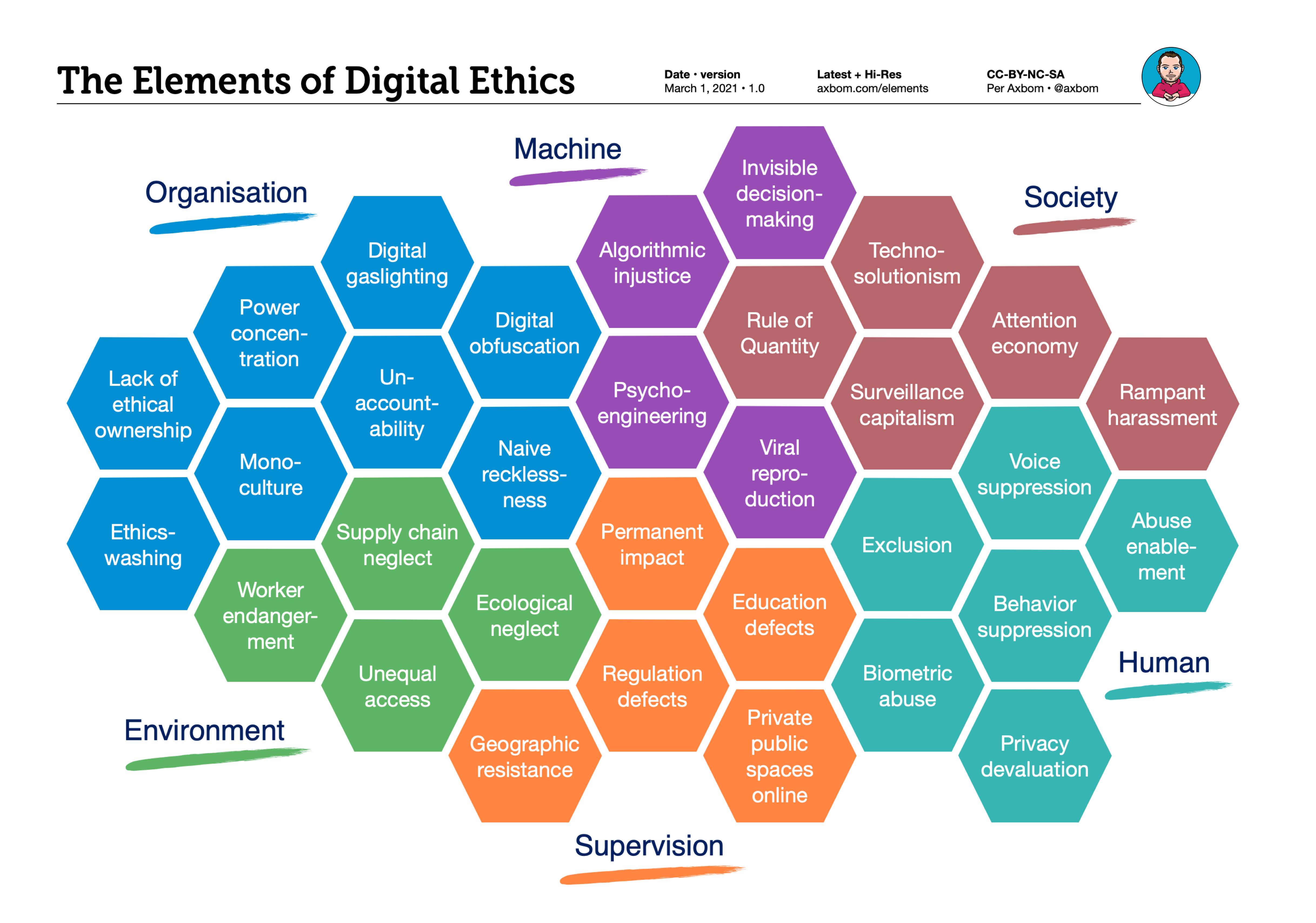

The elements of Digital Ethics, by Per Axbom. Licensed CC BY-NC-SA 4.0.

This chart is meant to include ethical considerations and concerns related to work with digital technology generally, and much (if not all?) is also relevant to educational technology. There is a lot here, and I won’t go over every piece (Axbom’s website helpfully provides a summary of each area), but I do want to make a few reflections here to help me connect this work to educational technology specifically, and to what I’ve reviewed in previous posts.

The elements of the chart are not ethical principles or criteria so much as they are broad-ish areas in which ethical concerns and harms arise, and that should be considered when deciding on things like what to purchase and how to use digital products and services.

Categories of digital ethics

Axbom breaks up the list into five areas of ethical considerations.

Organization

The areas in this category have to do with the organizations creating digital products and services. It includes things like whether anyone in the organization is responsible for ethical considerations and practices, whether the organization practices ethics washing, and whether there is accountability in the organization for harms. Another aspect I want to highlight here is what Axbom calls “monoculture”:

Through the lens of a small subset of human experience and circumstance it is difficult to envision and foresee the multitudes of perspectives and fates that one new creation may influence. The homogenity of those who have been provided the capacity to make and create in the digital space means that it is primarily their mirror-images who benefit – with little thought for the wellbeing of those not visible inside the reflection.

There is a significant ethical concern with not having diversity amongst those who design, create, implement, and support, as it is too easy to miss significant omissions and harms for others.

Machine

This category has to do with the way in which the design of machines, and decision-making by machines, can have harmful impacts. This include injustices perpetuated by the use of algorithms, and invisible decision-making, where algorithms and continual additions to code by multiple people with fewer and fewer understanding how it all works means it is difficult for most to understand how automated decisions are made.

Society

This category is about larger social impacts of digital products and practices. It includes “technosolutionism,” a sense that technology can and should be used to solve a wide array of problems (often without also recognizing the ways in which it can cause further problems). Another area of concern is surveillance capitalism (coined by Shoshana Zuboff), in which gathering large amounts of data about individuals (or types of individuals) is used to better profit off of them.

Human

Aspects under this category focus specifically on human wellbeing (though Axbom notes that many of the above do as well). This includes the ways that digital products and practices can enable abuse; suppress voices that do not adhere, for various reasons, to the norms of communication, practice, and thinking that are baked into the products; and suppress behaviour due to coming to understand that everything we say or do can be (and often is) tracked and recorded through many of the digital products we use.

Supervision

This refers to challenges relating to addressing the harms in the other categories, including challenges with regulations (which are often slower than the rate of change of the technology), with education of digital professionals in the areas of ethics, and long-term or permanent impacts such as releasing of data that can’t be taken back.

Environment

This category has to do with issues and impacts that may be considered secondary to the work of creating or using digital technology because they happen in the “environment” around those activities. For example, Axbom includes in this category ecological impacts of manufacture and use, worker endangerment (such as having to sift through hate speech or violence in communications, or the moral harms of knowing you are contributing to harms of others without being empowered to make significant change), and ignoring the harms that can occur in the supply chains that make the digital products possible (e.g., worker exploitation). Another aspect here is unequal access–e.g., access to the internet–and the impacts of forgetting such differential access when organizing our work, educational, and leisure activities.

Some reflections

There is a lot here, and that is because there are many things to consider in the wide field of digital ethics. I expect folks could even think of a few more–e.g., under human wellbeing impacts there could also be harms to mental health and relationships from the attention economy, or to physical health from postures required by devices.

For me, the value of this set of considerations is in how wide it is, and how it could invite further additions–the benefit to this is to recognize that ethical considerations in this area are many and complex, and can’t be easily addressed by a few simple actions. But that is a benefit that is also a challenge too, because it can make the issues possibly seem overwhelming. Where and how can we even start? And what can individuals do to make a difference?

Axbom suggests using the chart to spark discussions about ethical problems and risks, and that it can also serve as a set of considerations when doing risk assessments. How might it be useful for thinking and acting in relation to ethics and learning technology?

Similar to the discussion in the previous post in this series, this chart encourages a focus on the companies behind the learning technology that an institution may choose to purchase–the ethics of their own actions, accountability for harms, obfuscation in their terms, documentation, marketing, and more.

The machine category can apply to ed tech tools that involve machine learning, artificial intelligence, algorithmic decision-making in other ways…which many do and more are likely to do in the future.

Impacts in the human category are also quite relevant, including exclusion that results when tools are designed with certain kinds of practices, experiences, knowledge, abilities and identities in mind to the exclusion of others. They can require high bandwidth and be inaccessible to learners without that. They can increase student stress significantly in ways that lead them to do less well academically. They can devalue privacy too much for the sake of expediency.

In the society category, it can be easy to practice technosolutionism when vendors are marketing their tools directly to faculty and students that way, or also to institutions. Tools that enable greater communications and collaborations within classes and across institutions can also enable harassment, and institutions need to have practices and policies in place for how to address that.

In supervision, one area that stands out to me as relevant to educational technology is education defects–the lack of attention to ethics in the training and everyday practices of ed tech decision-makers and professionals, both in vendor organizations and in post-secondary institutions. Now, this is a wild generalization and I’m sure it doesn’t apply in all cases!

Finally, in environment, I’m thinking about possible worker endangerment amongst ed tech workers, if they are required in their work to implement and support tools that cause harm in areas such as those delineated in the chart.

Finally

When I started making blog posts about ed tech ethics recently, I had in mind a suggestion made by a working group at our institution to develop a set of principles to guide selection of learning technology tools, that would include ethical considerations beyond the legal privacy considerations we already use. I do think we should still do that, but the more I dig into what others have done in the area of ed tech ethics the more I’m realizing that is just one small piece of approaching this area with an ethical lens.

We can (and should!) develop a set of principles for selecting tools, and these can include considerations such as the organization/vendor, the way the tool works and possible exclusions, suppression of voices and impacts on wellbeing (among other things); but we should also consider broader practices such as how decisions are made, who is making them (and who is not included), processes for reporting and addressing harmful impacts, education about possible harmful impacts, and more.

So really, this is going to be a multi-year, multi-pronged, complicated set of activities to bring ethics more deeply into educational technology choices, decisions, uses, supports, and other aspects of our practices.

Text version of chart

There are five categories of digital ethics in the chart, each with several subcategories; all are listed below for accessibility purposes, since the chart above is in an image format.

Organisation

- Lack of ethical ownership

- Ethicswashing

- Monoculture

- Power concentration

- Unaccountability

- Digital gaslighting

- Digital obfuscation

- Naive recklessness

Machine

- Algorithmic injustice

- Invisible decision-making

- Psycho-engineering

- Viral reproduction

Society

- Rule of quantity

- Technosolutionism

- Attention economy

- Surveillance capitalism

- Rampant harassment

Human

- Exclusion

- Voice suppression

- Abuse enablement

- Privacy devaluation

- Behaviour suppression

- Biometric abuse

Supervision

- Permanent impact

- Education defects

- Regulation defects

- Private public spaces online

- Geographic resistance

Environment

- Worker endangerment

- Supply chain neglect

- Unequal access

- Ecological neglect

Thank you so much for these thoughtful reflections on my chart. I’m glad you found the work valuable and I really appreciate how you further built on the concepts and explored their relationship to ed tech ????

Hi Per, a big thank you to you for creating the chart! I found it very thought-provoking and generative. And thank you for visiting the post and your comment as well!