Thank you to each of my students who took the time to complete a student evaluation of teaching this year. I value hearing from each of you, and every year your feedback helps me to become a better teacher. As I explained here, I’m writing reflections on the qualitative and quantitative feedback I received from each of my courses.

After teaching students intro psych as a 6-credit full-year course for three years, in 2013/2014 I was required to transform it into 101 and 102. Broadly speaking, the Term1/Term2 division from the 6-credit course stays the same, but there are some key changes because students can take these courses in either order from any professor (who uses any textbook). These two courses really still form one unit in my mind so I structure the courses extremely similarly. I have summarized the quantitative student evaluations in tandem. Students rate my teaching in these courses very similarly. However, I will discuss them separately this year because of some process-based changes I made in 102 relative to 101.

To understand the qualitative comments in Psyc 101, I sorted all 123 of them into broad categories of positive, negative, and suggestions, and three major themes emerged: enthusiasm/energy/engagement, tests/exams, and writing assignments. Many comments covered more than one theme, but I tried to pick out the major topic of the comment while sorting. Twenty-one comments (18%) focused on the positive energy and enthusiasm I brought to the classroom – enthusiasm for students, teaching, and the discipline (one other comment didn’t find this aspect of my teaching helpful). All nine comments about the examples I gave indicated they were helpful for learning.

Thirty-four comments focused largely on tests (28%). Last year I implemented the two-stage tests in most of my classes, and I expected to see this format discussed in these evaluations. All but one of the five comments about the two-stage tests were positive. Other positive comments mentioned that tests were fair and that having three midterms kept people on track. Yet, the major theme was difficulty. Of the 17 negative comments that focused largely on tests (representing 14% of total comments), the two biggest issues were difficultly and short length (which meant missing a few questions had a larger impact). The biggest theme of the suggestions (5 comments) requested practice questions for the exams. There is a study guide that students can use that accompanies the text. I wonder how many students make use of that resource? Do they know? Would that help students get a better sense of the exam difficulty? This is a major question I want to ask my upcoming Student Management Team (SMT) this year. Still, it’s important to keep in mind that these comments represented a minority in the context of all 123 that were given.

Twenty-five comments (20%) focused largely on the low-stakes writing-to-learn assessments. Five times throughout the term students write a couple of paragraphs explaining a concept and then applying it to understand something in their lives. They are each worth 2%. When I implemented this method two years ago I also added a peer assessment component, such that 1% has been completion, and 1% comes from the average of their peers’ ratings of their work. In year one I used Pearson’s peerScholar application, and in year two (2014/2015) I switched to the “Self and Peer Evaluation” feature in Connect (UBC’s name for Blackboard LMS)… which was disastrous from the data side of things (e.g., giving a zero instead of missing data… and then counting the zero when auto-calculating the student’s average peer rating!). As I expected, most of the comments about the assignments were about peer review failures: missing comments, missing data, distrust of peers as being able to rate each other, distrust at only receiving 3 ratings that can be wildly different sometimes, difficult to get a good mark, suspicion that peers weren’t taking it seriously. For 2015/2016, I have made three major changes to help improve this aspect of the course:

- Return to the peerScholar platform instead of Connect, which should fix part of the missing data problem (and it can now integrate with my class list better than it did before),

- Review 4 or maybe 5 peers rather than 3,

- Implement a training workshop! With the help of a TLEF, Peter Graf and I have been developing an online training module for our courses to help students learn to use our respective peer assessment rubrics and test out the rubric on some sample essays. Our main hypothesis is that students will be more effective peer reviewers—and will feel like peers are more effective reviewers—as a result of going through this process. More to come!

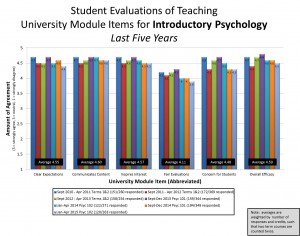

As can be seen in the graph above, quantitative ratings of this course haven’t changed too much over the past few years. The written comments are useful for highlighting some areas for further development. I look forward to recruiting a Student Management Team this year to make this course an even more effective learning experience for students!

Follow

Follow