As I mentioned in this previous post, I am working through feedback from my students. All quantitative data, as well as links to all previous blog posts (since 2011), are available here. ALERT! This post has become ridiculously long. Writing it is helping me think and process this overwhelming year, so I’m just going with it.

For this installment, I focus on PSYC 217 — the course I have taught the most: 24 sections and almost 2000 students since 2008. I regularly teach this course in the Fall term, two sections back-to-back of almost 100 students each. This post will focus on my pre-pandemic Fall 2019 results (business as usual), versus results from my mid-pandemic Fall 2020 online offering.

Both iterations featured a group research project scaffolded by labs led by Teaching Fellows (TFs), that culminated in an individual APA-style manuscript as well as a group poster. This course component is the same across all sections for the last 10 years. Over summer 2020, I worked with two graduate students as well as the other instructors to create Canvas modules to support all PSYC 217 students through each lab, in preparation for the fully online experience in the Fall. During the term, TFs continued to act as support, trying to guide groups through their projects.

In The Before Times, I assigned almost every chapter in the text (and a few short supplementary articles), held classes three times a week for 50 minutes, and measured learning primarily through the project deliverables (as above) plus 3 tests and a final exam. All tests and the exam were two-staged. Here’s the syllabus, which honestly hadn’t really changed much in about 5 years. Neither had my lesson plans, which were dotted with clicker questions, and included many demonstrations and discussions and illustrative examples that had been honed over many years. With the help of Arts ISIT, I set up the system so all lessons were recorded and posted automatically on Canvas (yes, even back in Fall 2019).

Fall 2020 was not business as usual. Early in Summer 2020 I hastily decided that my old lesson plans and course strategies would largely not work in a fully online environment, that also needed to support a fully asynchronous experience for learners joining from all around the world. (In hindsight, I probably could have adapted more than I thought I could, but that needed 9 months of online teaching for me to realize.) See the syllabus for details. Major changes: slashed content by about 3 chapters, shifted to two tests and a final exam (none of which were two-staged), added weekly low-stakes quizzes (from the textbook publisher’s materials), added option to customize some aspects of assessment weighting, and drastically changed how I thought about class time. Mondays became Q&A, where I answered questions from the previous week’s discussion posts, and answered questions live. (Unfortunately I labelled this as “optional” so it wasn’t well-attended/watched despite folks who came finding it really helpful). Wednesdays I held class in a form similar to The Before Times, featuring selected topics from that week’s chapter and bringing people together for discussion and demos as best I could. Fridays were for independent work (e.g., discussion posts, quizzes) and/or Labs. To help students stay on track, I curated everything in Weekly Modules, with an opening page that integrated everything to do and think about that week. As I had been doing for years, I closed each week with an announcement with reminders, though these were more elaborate than usual.

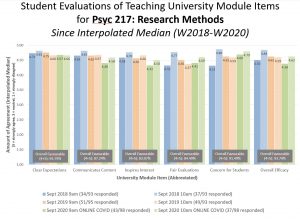

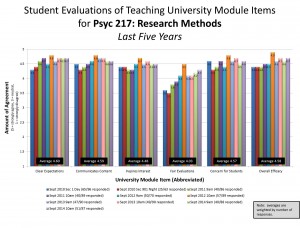

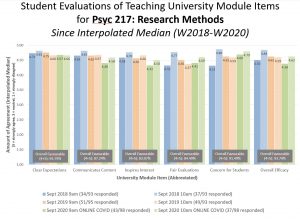

Now that I have oriented to the major changes in the course, on to student feedback! Quantitative data show that students in 2019 and 2020 rated the course remarkably similarly. Response rates were down a bit in 2020, but not to a worrisome degree. Click to enlarge the graph below:

Interestingly, clear expectations did not change, which might be attributable to the doubling or tripling of efforts to keep students on track with reminders and organizers — that extra effort might be necessary to keep commensurate with face-to-face. Slight drops in communicates content effectively and inspires interest might be related to the relative drop in synchronous class time. For perhaps the first time ever, fair evaluations nudged a little higher than inspires interest, which might be related to the customize grade weights. Concern for students has long been an area of strength for me, and I’m not surprised to see this rated highly this year due to the lengths I took to approach all decisions with compassion (though cf. 2018 Section 2?). Overall efficacy is a bit lower (in Section 1 only?) but doesn’t seem meaningfully so.

Qualitative data time! This is always an emotional and difficult undertaking for me, which is why I start with quant to orient me to what I’m looking for. I have 7 pages to work though for 2020, and 6 pages for 2019. I try to roughly code student comments into four quadrants along two continua (thanks to Jan Johnson for teaching me this strategy about a decade ago). The first is valence (positive-negative) and the second is ‘under my control, changeable’ on the one end, and ‘not under my control or not willing to change’ on the other. ‘Not willing to change’ is usually because I have data behind that decision (but I might rethink implementation; see example below) or it’s just not possible given the time-effort that I have to give in the context of my other commitments. It’s also pretty common to read evaluations that directly conflict with each other (I loved this! alongside I hated this!), so I need to look for common themes.

Key points from 2019 qualitative: On the negative side, the modal issue was the quizzes. Quite a few students (~9) mentioned they felt quite pressed for time on the individual portion, and another couple mentioned there was too little time remaining for the group portion to be effective. I need to think more about this. The in-term quizzes are already quite brief, and I’ve tinkered with length before — too few points and students get stressed that each question becomes valued at close to a percent of their final grade. This is the one downside of a 50 minute class and two-stage exams. But this timing thing is becoming too common a complaint for my liking.

Many students mentioned the use of examples in a positive way, particularly appreciating how applied they were. But also a few students mentioned a desire to be given more examples, or more different examples, or using the examples from the textbook in class. I’m not sure how to do this without increasing lecture time (sacrificing demos, activities, engagement).

On the positive side, the clear themes are two of my signature strengths in the classroom (as I have learned because of reading evaluations over the years): bringing enthusiasm every day, and caring about students — both their learning and broadly as fellow humans. The vast majority of comments of any kind included mention of one or both of these qualities. There were various additional notes of what worked well: asking questions and activities to keep people engaged, using real applied examples, recording and uploading lessons (2 people), office hours, explaining reasons why I do things a certain way, structure/sequencing, wellness moments in weekly announcements. My two favourite comments:

“She’s very passionate, which makes learning more interesting and easier. I really liked how she included the class a lot and used questions and examples to actually help students learn in class, instead of expecting them to just take notes and learn later, like most teachers. I also really liked to set up of the course with the groups and labs and group tests. Group tests and time to discuss in class about questions with my group really helped me to learn.” [emphasis added]

“Dr. Rawn is one of the best professors I have ever had. She made the classes so engaging and interesting, and time and time again showed her genuine concern for her students’ learning and wellbeing. I visited her office hours once and overheard talking to another student about different ways he could improve his wellbeing and performance in a course. I just wish she had longer office hours because I could tell she wants to connect more with her students but has a lack of time to do so.” [emphasis added… to highlight a sentence that fills me with All The Feels. Check out the opening bullet of my previous post.]

“Dr. Rawn has perfected the formula for this class.” –> this student gets the decade+ process behind the course as it was. Which is why I’m filled with terror to begin reading 2020 comments… but here we go…

Key points from 2020 qualitative: Wow. That was a lot to process. On the negative side I have a long list of things people mentioned as not working for them (which was starting to alarm me), but not much in the way of clear themes. Upon reflection, I take this as a good sign, in light of the fact that I simply cannot please 200 people all the time with all the decisions I make across a 13+ week timespan (while in a pandemic teaching a large class online for the first time). A few folks mentioned they wanted more “lecture” (i.e., me talking) and less reliance on the text and less participation. However, at least as many people (if not more) appreciated the engagement in active learning and value of student-directed Q&A (plus, you know, All The Research on active learning). A few people noted there were too many small assignments, but again a few people mentioned appreciating the range of activities available to show learning. If I group a few comments together about labs and the paper, there are some folks who didn’t feel sufficiently supported in the lab portion (Fair enough. We all tried our best and knew things weren’t as smooth as in person.). One student mentioned wishing a better guide to help them navigate course content, but many students mentioned the navigation, organization, and structure of the course as a real strength. Interestingly, enthusiasm barely made it on the list at all — apparently that’s something that comes through in my face-to-face teaching but not so much online.

And yet my heart sinks to read there was one time I didn’t respond in a caring way to a student and it clearly upset them deeply and soured their whole experience of the course. Reading a comment like that just breaks my heart. I am human and I make mistakes in the moment and wish I could take back how words came out of my mouth, and what exactly those words were. But I can’t. I tried to fix it then and that clearly did not work. So although I deeply regret that I couldn’t reach that student, I have to force myself to learn and move on, to always live what I know: every single interaction with a student matters. Even when that interaction is happening anonymously online. And I have to recognize that, by far, the biggest theme across 2020 qualitative comments was that I cared.

Many students mentioned that I cared and that made a difference for them. I cared that they learned, and they noted I worked hard for them which made them motivated to work hard too. I responded to emails consistently and in timely ways, and I asked for feedback each week and used it to make real changes students experienced. I also cared about them as human begins who were learning in a pandemic. Many students mentioned my concern for their well-being, compassion and flexibility, Wellness Moments in announcements, and how I chose to highlight self-care and compassion in examples I used to teach the content. I found it interesting that each of these specific choices was mentioned more than once, and this theme of care was a much bigger deal than anything about the course content or technology used or assessments or anything else. We teach people, not topics or courses. My two favourite comments:

“Dr. Rawn was highly adaptive, and showed great care and concern for her students. She produced a safe, and engaging learning environment. It was clear that she had her students well–being in mind when she designed this class. Her lectures were effective in producing clarity, and her Ask Dr. Rawn Sessions allowed us to further learn, and develop a sense of community in discussion our ideas with peers.”

“Dr. Rawn went above and beyond to teach this course. Her lectures and labs were very engaging and fun. Also, she provided useful resources. Even outside the class, she made sure that the students were on track with quizzes, discussion boards, and take home surveys. When I first came to this class, I had little hope with how it was going to be taught, considering we couldn’t conduct experiments in person. But Dr. Rawn gave me so much hope and motivation towards my project. I really appreciate a professor like this who overcame the problem of COVID–19 and social isolation, and to be able to bring us all together and work hard.”

Next time on the blog… I reflect on student feedback and my experiences in PSYC 218 Statistics, where I changed relatively little about the course, and had 2 terms of experience teaching online under my belt already.

Follow

Follow