Welcome to part four–the final installment!–of my reflections on student evaluations of teaching from 2011/2012. Please see my earlier posts for a general introduction and reflection on feedback from my Psyc 217 research methods, Psyc 100 intro course, and Psyc 208 Section 002 Special Topics course. I have also posted graphs that facilitate comparison across all my courses and years I have taught them.

First, as always, I would like to thank each of my students who took the time to complete a student evaluation of teaching this year. I value hearing from each of you, and every year your feedback helps me to become a better teacher. Please note that with respect to the open-ended responses, I appreciate and consider every thoughtful comment. The ones I write about are typically those that reflect common themes echoed by numerous students.

Psyc 218: Analysis of Behavioural Data

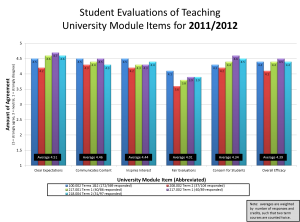

Because I have only taught this course once, I am interpreting the numerical data in reference to my other courses that I have taught multiple times. As you’ll see from the graph below (click on it to enlarge), students rated this course right on par with my others. In fact, ratings were almost exactly what I received from students in my research methods courses. Given that the third midterm was much more challenging than I had anticipated, it surprised me somewhat that students rated evaluations as fair as my students in research methods did. At 3.9 there is definitely room for improvement there (in both courses). Interestingly, clarity of expectations was also high, which lends support for my hypothesis that these two are related (see further discussion in my research methods reflection). Overall, these numbers signal to me that students are feeling positively toward this course.

After reading the comments, I must say an extra thank you to each of you for the polite and thoughtful tone used in delivering this feedback. There most common point of discussion was acknowledgments that the first two midterms were too easy, and the last too difficult and too lengthy. I absolutely agree — it was clear during the semester and is clear in the evaluations. I will make every attempt to even out the difficulty of these exams next time. What I appreciated most was the way these comments were delivered. Here’s an example:

The course was a lot of fun, and easy to understand. However, for the future, I would prefer if we can have midterms with a consistent level. It was a huge shock for me on the last midterm. 🙁

In case you’re writing these kinds of comments in the future, here’s why I found this comment particularly effective: it starts with a positive that was at least a bit specific (could be moreso), and conveys a respectful tone set by phrases like “I would prefer”. There’s no personal attack toward me here, but a fair acknowledgment of an area in this course that needs improvement.

The midterms were by far the most discussed aspect of the course. However, many people also noted how much they valued my enthusiasm and interactive style. Here’s a couple of specific comments that capture the sentiments echoed by many students in the class:

Not one minute is wasted in class. She is always teaching in innovating and varied ways.

Although still challenging, I found this course to be enjoyable. Dr. Rawn was approachable and tried hard to interest and even engage us in the material and provided encouragement to students. I liked how the iclickers were used so that u could test yourself to see if you understood the material without having to worry about losing marks. As already discussed in class, the midterms although sometimes too long or short, I thought the questions were fair (they tested for understanding rather than memorization). I thought the spss assignments were a good way of understanding theory and application of the tests we learned although I found some questions unclear.

For a few people, it seems I was able to calm some of their hesitation toward math/numbers. This is fabulous to hear, because it’s something I really tried to do throughout the course. Here’s an example of one of these comments:

I thought Dr. Rawn did an amazing job teaching this course. I have struggled with math and was not enthusiastic to take this course however, she inspired motivation and made this course interesting and easy to learn. I ended up with a higher grade in this course than I anticipated and I owe it all to Dr. Rawn.

While I’m not convinced this student “owes it all” to me, it seems that I was able to offer some support beyond the technicalities of course content, reflecting one of my personal goals for this course this year. Again, I’d like to thank everyone who completed the student evaluations for doing so in such a thoughtful and respectful way. Your feedback will influence the way I teach this course in the future.

Conclusion

What a helpful exercise that was! Writing about my student evaluations of teaching helped me to really think about what you (my students) were saying about my teaching and my courses. If I had to pick one overall goal for me to keep in mind next year, it would be having clear expectations and communicating those effectively. I think I’m doing this well in some courses, making progress in others, and have more room for improvement in others.

Reading student evaluations can be a very emotional experience for those of us who dedicate our lives to helping others learn. Overall, I’m thankful for the respectful tone that most of my students used when identifying strengths and areas for growth. When feedback isn’t conveyed respectfully it makes it difficult to hear what is being said. Thank you for taking the time to provide feedback, and for the way you did it.

For educators who might be reading this, I also gained insight into a process that helped me be optimally receptive to feedback: (1) I started with the numbers — comparing within year and within course (using graphs) really helped me set up an analytic frame of mind; (2) once I was in that analytic (rather than emotional) frame of mind, I read the comments and used them to help me understand what I was seeing in the numbers; (3) I wrote about it–you might share it or not, but this was really helpful for me to make sure I processed the messages and decided on action plans.

Onward and upward!

Follow

Follow