Thank you to each of my students who took the time to complete a student evaluation of teaching this year. I value hearing from each of you, and every year your feedback helps me to become a better teacher. As I explained here, I’m writing reflections on the qualitative and quantitative feedback I received from each of my courses.

This was another year of relatively major changes in this course. I have revised and revised this course over the years. This year, I needed to replace the readings anyway, so I used it as an opportunity to do a thorough course evaluation. Last summer I carefully considered this course with respect to Dee Fink’s model of course design. In brief, Fink’s model prompts an analysis of the degree to which the learning objectives/course goals, learning assessments, and teaching techniques are integrated with each other. I presented the results of this analysis at a conference (follow this link for the conference presentation support materials). In brief, I learned from this analysis is that my class-by-class learning objectives (and therefore my exams) were really only addressing two of my broader course goals.

Major changes in 2013/2014

- Revised most readings. Created a new custom set of readings from only one publisher, omitting most of the sport psychology chapters that many students had had trouble connecting with in previous iterations.

- Revised topic sequence, in-class topics, and exams to align with new content. This meant re-arranging some topics, reframing others, cutting a few entirely, and creating a few new lessons on new topics.

- Instead of using the Team Based Learning style team tests for two units (one of which was now gone entirely), I created a “Learning Blitz” to serve the same sort of readiness assurance process. In brief, students came with readings prepared, then worked on questions that guided what they were to take from the readings (e.g., keywords, key studies, take-home message). My intent was to help students learn to extract the most important information from readings, while working together.

- As I said I would in response to last year’s feedback, I created an exam study guide that I distributed to students the week before each exam. It collected all learning objectives, keywords, key studies, etc., together in one place as a sort of “here’s what to know” from class and the readings.

- The TA who had helped me develop the course over four years graduated. Two new TAs were assigned to my course. They were keen to help support the course, but we did hit some snags.

As you can see, this was a big year in the life of this 208 section. Personally, I felt challenged by the sheer amount of revision needed. When I consider my course design intentions, I think I inched toward integrated assessment and teaching techniques (still lots of room to grow there), and better aligned my course goals and learning objectives with assessments. I also realized just how much work my former TA did to ensure feedback and support was given in a timely way to each group, and to ensure consistency of grading with her fellow TA (which changed most years). I need to be better prepared with a process for communicating more effectively and regularly with TAs, and helping them work together to ensure coordination throughout the grading process.

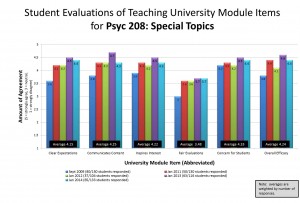

Quantitative student feedback was on par with previous years (see the graph above, click to enlarge), but qualitative comments tended to hit a different tone. Many students commented positively about how motivated they were to come to class, how much they enjoyed my teaching style and the activities that we did to encourage them to apply the material to their lives. Some students mentioned that I created a “positive learning environment” and was “engaging” and “inspiring.” These comments were consistent with previous years, and I’m glad that many students are finding value in this course and my approach to it.

The suggestions for improvement seemed related to the changes I made, and fell largely into two categories: grading and content. Commonly, students commented that the midterm exam and assignment grading was difficult. There was frustration with the required means – I was frustrated by that too. These means were more salient perhaps than in previous years because of how I handled a couple of things: instead of asking my TAs to revise their grades on an assignment to better align with each other, I scaled them quite explicitly (i.e., one half the class had a +3 boost, the other had a -5 reduction; on the midterm, I scaled +7 for everyone). The midterm difficulty was an overshoot because of the revisions with the new material (not an unusual occurrence). What I wish I had done with the assignment was ask the TAs to take a couple of extra days and revise their grades to come to a common acceptable mean. It would have had the same effect on the grades, but the process would have reduced the salience of the scaling problem. As it stood, half the class seemed to feel like they were punished – when in fact they were simply over-graded initially. Process is crucial. Lesson: Carefully ensure TAs are communicating regularly and are aligned throughout the grading process for the assignment.

The handful of comments on the content surprised me a little. One student mentioned high overlap in the content between this course and some others (although also noted that the applied take on it was new). A few students mentioned that they desired more depth of theory/research and less application. One person phrased it like this, “I know that Dr. Rawn really enjoys research, so I am confident that she teaches us things that has research to support it…. I wish the course focused more on helping [us] understand definitions, and different approaches so I could make connections between material and life myself.” This feedback surprised me. I feel like I am constantly describing studies, but the fact that a few students made similar comments means that maybe this course is starting to come across as preachy (this is how to live a good life and how I apply it and you should too), and, perhaps consequently, less rigorous. I’m not sure what to do with this feedback, but it’s certainly something to think about further.

Other useful suggestions for next year:

- Clarify and simplify the group project handout. It has been updated each year for a few years, so it reads a bit patchy. Give the rubrics ahead of time. Like last year, I’m nervous about grading – but perhaps use the rubrics as a base to structure the handout.

- Offer half a lab day about a week before the presentation (maybe cut Lab 3 into two half days?)

- Have some sort of control over the chaos that is the presentation. Maybe have a bell every 15 minutes – could I bring someone in to do that? I’m busy grading.

- Shorten the learning blitz requirements: they’re too long for meaningful discussions and some groups are reporting splitting the workload rather than discussing each item together.

- Consider having pairs of groups – or encouraging even number teams to match with an odd number team — something that helps people meet new people other than their teammates every once in a while.

Thank you to everyone who provided feedback. This course, more than any other I teach, goes through growing pains regularly, and this year felt like a big growing pains year. I have a lot to think about revising as I move forward, and also a lot of success to celebrate. This deliberately unconventional course – although not everyone’s cup of tea – does seem to be reaching a subset of students in a very positive way.

Follow

Follow