Although I’ve polished/reconfigured my teaching philosophy a few times over the years, I’m feeling compelled to take it in a new direction. It’s not that my teaching has taken on an entirely new form… well, maybe in some ways it has. I’m feeling a greater sense of clarity these days, specifically around collaboration and community-building. Here is my latest draft of my single sentence nutshell version, followed by lengthier elaboration/musings.

The goal of my teaching practice is to help people develop ways of thinking and doing that are informed by the methods and evidence of quantitative psychology, and that will prepare them to engage in their social worlds throughout their lives.

I chose many of these words very carefully.

goal, my teaching practice: indicate my own process of ongoing development. For me, teaching is not a skill I can check off as “mastered” — which is actually one of the reasons I enjoy it so much. It’s a set of skills and attitudes I can get better at through reflective practice, but it’s never “done.” Also noteworthy at this point: although this statement is grounded in what (I think) I do, it is also somewhat aspirational and intended to help me make decisions about how to change my teaching in the future.

help, develop: In my most effective teaching moments, I am acting as a guide, not some sage trying to fill brains with what I know. I view myself in a supporting role, and that probably has something to do with the most impactful teachers in my life, who treated me as an individual person (see below) and pushed me to develop new skill sets, regardless of how scary or challenging that was (I’m thinking of you Clinton, Ross, Holmes, Biesanz, Vohs…). Because many of my core courses are foundational (introductory psychology, research methods, statistics, teaching of psychology for graduate students), I sometimes get to see and support developmental change in my students across much of their degrees. When possible, I provide opportunities for students to practice and build on past work.

people: reminds me that my students are more than just students (just as I am more than their instructor). I put this into practice by saying this, right before every exam begins: “and remember, your value as a person has nothing to do with your performance on this or any other exam.” Year after year, students thank me for this tiny reminder that they are not the sum of their grades. Also, this word reminds me that some of my teaching activities reach beyond traditional classroom settings and involve people who aren’t necessarily “students” per se.

ways of thinking and doing: I am increasingly aware of the fact that when I use lecture, I am giving students ample opportunity to practice note-taking and listening… which are not entirely useless skills, but are maybe not the best use of our time together (see above). With each new course I develop and each course I revise, I strive further toward spending class periods giving students time to practice their thinking and doing (asking, answering, brainstorming, designing, summarizing, analyzing, performing, playing, creating). To support doing-driven assignments, I need to work on building space in my course for further skill development.

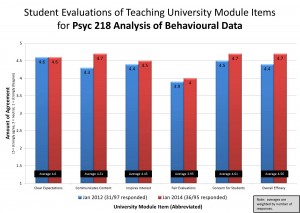

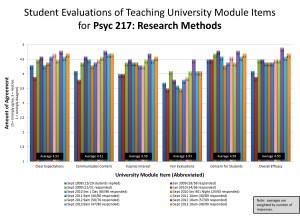

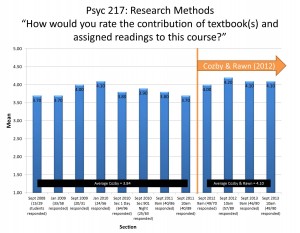

methods and evidence of quantitative psychology: By training, I am a quantitative social psychologist. I teach research methods and statistics, write a research methods textbook, and deliberately chose to use an introductory psychology textbook that emphasizes research methods and combating pseudoscience. I think the tools and content of psychology are useful for critically consuming information that is thrown at us, and for engaging productively in the world. In my classes, I create opportunities for students to apply concepts and methods (test questions, assignments). Importantly, I use the methods and evidence of quantitative behavioural sciences to inform my teaching practice.

engage: Here’s a word that was core in my old teaching philosophy. The way I’m looking at it now is this: I structure my learning situations to require learners to participate and engage with the material and each other. I use various common techniques: vivid examples, videos, attention grabbing questions sprinkled throughout class (clickers), projects that mean something (e.g., identify a problem you’re having and find research to help), and some that are uncommon (2-stage exams). I engage students in the learning process by breaking down my 1st and 2nd year courses into four chunks (three midterms and a cumulative final) — and in my intro course I require students to begin studying at least 2 days before each midterm because a short low-stakes concept check paper is due. Another way I try to engage is by building community…

social: Now we get to the primary reason for this whole revision. Most of the changes I’ve made over the years to my courses have been to make them more social. Community-building and collaboration feature heavily in my courses, and I’m ready to commit to them as defining features of my teaching. The research literature supports this approach. “Active learning” techniques that involve some sort of peer-to-peer engagement seem to work best to help students learn. I also believe that in order to engage effectively in their personal lives and broader society, people need practice developing skills and attitudes needed to work with and learn from others. The social looks different in different courses (in part depending on class size and level), but includes two-stage/team tests, group projects, peer evaluation, reviewing peers’ written work and presentations, informal discussions prompted by clicker questions, team writing, occasional field trips, and invitational office hours. Also, the more students talk in class, the stronger sense of community they feel. Because my classes tend to be very large (88 is the smallest, excepting my grad class), and many students have traveled from around the world to be here at UBC, increasing the social in class may help struggling students feel more connected. Next year, my new course on the psychology of social media will explore the theme of technology-mediated connection and will require it!

throughout their lives: my greatest teaching efforts are lost if they do not contribute to some lasting change for some people some of the time. My hope is that some of the skills, tools, ways of thinking and doing and engaging will positively influence the people I teach. If ever-so-subtly.

Some other thoughts

Technology-enhanced experience. I use technological tools wherever it makes sense to facilitate my goals. Examples: clickers, Connect, self-and-peer evaluation (Connect, peerScholar), scratch cards and live test grading, turnitin, videos

Integrated Course Design model. With each new course I’m developing, I’m getting better at applying Fink’s model. Basically, strive for alignment among course goals, learning assessments, and in-class experiences.

Follow

Follow