Making Connections

Personal Connections– Learning

Four years ago I suffered an injury that tore one of the tendons that controls movement in my thumb. I eventually regained use of the thumb and was able to perform all daily activities with little trouble. All except one. It was difficult and very painful to write. So I turned to using the computer. I bought myself a laptop and thankfully my part-time job was a fully computerized environment. At first I saw it as a very efficient substitute writing tool; it was much quicker to type than to jot down notes. About half a year later I began to feel that learning was becoming more difficult, and causing more fatigue, and my creativity had been highly impacted.

It wasn’t until I took this course that I began to really investigate the relationship between the two.

In thinking about the definition of text and looking at the evolution of writing spaces and technologies made me reflect on my current and previous modes of learning. Earlier notes were meticulously underlined, highlighted, written in different colours (while also possible on the computer, rarely used these functions because I owned a black and white laser printer). The handwriting was all over the page, with little clumps of information, connected by arrows and diagrams. The margins were reserved for ‘outside links’, where I made personal connections and devised memory aids to help me synthesize and remember information and ideas. This practice also extended to any papers, textbooks, and novels that I read. However, the injury discouraged this and I ended up typing a few notes on the computer (instead of directly on the page—which made the information feel … disconnected).

Remediation

The concept of remediation was also very useful in my understanding of the difficulties with embracing technological use in schools. As a TOC I visited many schools and saw many classrooms wherein the computer lab was used for typing lessons, KidPix, or research. Many schools also have Interactive White Boards (IWBs), and teachers use them as, in essence, a very cool replacement for a worksheet. Remediation helps frame and pinpoint the reason for this phenomenon: the use of technology is not just a set of skills, it’s a change in thinking and pedagogy. Literacy is not just literacy anymore, it has become multiliteracies and Literacy 2.0. Teachers cannot continue to teach reading and writing the same way as before, because text is not the same anymore.

December 22, 2009 No Comments

Remediation

“…a newer medium takes place of an older one, borrowing and reorganizing the characteristics of writing in the older medium and reforming its cultural space.” (Bolter, 2001, p. 23)

Bolter’s (2005) definition of remediation struck me a bit like a Eureka! moment as I sat at lunch in the school staffroom, overhearing a rather fervent conversation between a couple of teachers, regarding how computers are destroying our children. They noted how their students cannot form their letters properly, and can barely print, not to mention write in cursive that is somewhat legible. The discussion became increasingly heated as one described how children could not read as well because of the advent of graphic novels, and her colleague gave an anecdote about her students’ lack of ability to edit. When the bell rang to signal the end of lunch, out came the conclusion—students now are less intelligent because they are reading and writing less, and in so doing are communicating less effectively.

In essence, my colleagues were discussing what we are losing in terms of print—forming of letters, handwriting— the physicality of writing. However, I wonder how much of an impact that makes on the world today, and 20 years from now when the aforementioned children become immersed in, and begin to affect society. Judging from the current trend, in 20 years time, it is possible that most people will have access to some sort of keypad that makes the act of holding a pen obsolete. Yes, it is sad, because calligraphy is an art form in itself, yet it strikes me that having these tools allow us the time and brain power to do other things. Take for example graphic novels. While some graphic novels are heavily image-based, there are many that have a more balanced text-image ratio. In reading the latter, students are still reading text, and the images help them understand the story. By making comprehension easier, students have the time and can focus brain processes to create deeper understanding such as making connections with personal experiences, other texts or other forms of multimedia.

As for the communications bit, Web 2.0 is anything but antisocial. Everything from blogs, forums, Twitter, to YouTube all have social aspects to them. People are allowed to rate, tag, bookmark and leave comments. Everything including software, data feeds, music and videos can be remixed or mashed-up with other media. In academia, writing articles was previously a more isolated activity, but with the advent of forums like arxiv.org, scholarly articles could be posted, improved much more efficiently and effectively compared to the formal process that occurs when an article is sent in to a journal. More importantly, scholarly knowledge is disseminated with greater ease and accuracy.

Corporations and educational institutions are beginning to see a large influx of, and reception for Interactive White Boards (IWB). Its large monitor, computer and internet-linked, touch-screen abilities make it the epitome of presentation tools. Content can be presented every which way—written text, word processed text, websites, music, video, all (literally) at the user’s fingertips. The IWB’s capabilities allow for a new form of writing to occur—previously, writing was either with a writing instrument held in one’s hand, or via typing on a keyboard. IWBs afford both processes to occur simultaneously, alternately, and interchangeably. If one so chooses, the individual can type and write at the same time! IWBs are particularly relevant to remediation of education and pedagogy itself, because the tool demands a certain level of engagement and interaction. A lesson on the difference between common and proper nouns that previously involved the teacher reading sentences and writing them on the board, then asking students to identify them—could now potentially involve the students finding a text of interest, having it on the IWB, then students identifying the two types of nouns by directly marking up the text with the pen or highlighter tools.

Effectively, the digital world is remediating our previous notion of text in the sense of books and print. Writing—its organization, format, and role in culture is being completely refashioned.

References

Bolter, J. D. (2001). Writing Space: Computers, Hypertext, and the Remediation of Print (2 ed.). Mahwah, NJ: Lawrence Erlbaum.

December 13, 2009 No Comments

Storage and Performance

I attempted to work through how technologies’ characteristics of storage and performance affect fluency. It represents a start. Please follow this link to see my attempt.

December 6, 2009 No Comments

Hyperculture

The last two chapters of Bolter (2001) were an excellent choice to close our readings. As an aside, I would like to say how much I enjoyed the sequencing and intertextuality of the readings in this course. Most courses I have taken offered carefully chosen readings around the key ideas and topics, but none linked them so successfully and recursively as was done here. It was helpful to my own thinking and enjoyable to read Bolter on Ong, Kress cited in Dobson and Willinsky, and so on. I could cite such pairings all the way back to the first readings. It’s one of those subtle displays of good pedagogy that makes me wonder if I could do a better job selecting and sequencing the readings in my classes.

To return to Bolter, however, the argument that the technology used for writing changes our relationship to it (p. 189) seems almost self-evident. I know that my approach to writing changes when the tool is a pen versus a word processor. And it is largely for this reason that I avoid text messages. I worry how typing on a tiny keyboard with my thumbs to any great extent would affect my relationship with writing (which is already sufficiently adversarial). The discussion of ego and the nature of the mind itself as a writing space was also interesting. I’m not sure that I can follow where Bolter leads when he suggests that if the book was a good means of making known the workings of the Cartesian mind, hypertext remediates the mind (p. 197). That, it seems to me, accords too little to the ego and too much to networked communications—at least as they currently exist.

Bolter is certainly correct, however, when he asserts that electronic technologies are redefining our cultural relationships (p. 203). This is especially true for my students. Writing in 2001, Bolter preceded Facebook by at least three years, but he could have been doing Jane Goodall-style field research in my school (watching students use laptops, netbooks and handheld devices to wirelessly access Facebook) when he suggests that we are rewriting “our culture into a vast hypertext” (p. 206). My own efforts at navigating the online reading and writing spaces of the course were, I fear, somewhat hampered by having lived most of my life in “the late age of print.”

I didn’t post a lot of comments, although I attempted to chime in on the Vista discussions. What I realized late in the game was that I should have been more active in posting comments to the Weblog. The strange thing is that I enjoyed reading the weblog posts—and especially enjoyed reading the comments people made about my weblog postings. For some reason, however, that didn’t translate to reciprocating with comments in that space. Perhaps it’s because I’m not a blogger or much of a blog reader outside of my MEd classes. I still prefer more traditional (read: professional, authoritative) sources for news and opinion. Though, truth be told, I probably read as much news and opinion online as in print. It doesn’t hurt that the New York Times makes most of its content available online for free and that I have EBSCO and Proquest access at work. It might also be because the other online courses I’ve taken in the past two years tended to use the Vista/Blackboard discussion space as the discussion area, so I think of that as the “appropriate” space for that type of writing. It’s fascinating to analyze one’s own reading and writing behaviours and assumptions in light of what we’ve read and discussed. It also takes me again to my own practice as a teacher. When I next use wikis, for example, with my students, I will try to devise a way (survey, discussion tab in the wiki, etc.) to find out how they believe their previous online reading and writing experiences influence their interactions and contributions.

References

Bolter, J.D. (2001). Writing Space: Computers, Hypertext, and the Remediation of Print. Mahwah, NJ: Lawrence Erlbaum.

December 2, 2009 2 Comments

Making [Re]Connections

This is one of the last courses I will be taking in the program and as the journey draws to a close, this course has opened up new perspectives on text and technology. Throughout the term, I have been travelling (more than I expected) and as I juggled my courses with the travels, I began to pay more attention to how text is used in different contexts and cultures. Ong, Bolter and the module readings were great for passing time on my plane rides – I learned quite a lot!

I enjoyed working on the research assignment where I was able to explore the movement from icon to symbol. It gave me a more in-depth look at the significance of visual images, which Bolter discusses along with hypertext. Often, I am more used to working with text in a constrained space but after this assignment, I began thinking more about how text and technologies work in wider, more open spaces. By the final project, I found myself exploring a more open space where I could be creative – a place that is familiar to me yet a place that has much exploration left to it – the Internet.

Some of the projects and topics that were particularly related to this new insight include:

E-Type: The Visual Language of Typography

A Case for Teaching Visual Literacy – Bev Knutson-Shaw

Language as Cultural Identity: Russification of the Central Asian Languages – Svetlana Gibson

Public Literacy: Broadsides, Posters and the Lithographic Process – Noah Burdett

The Influence of Television and Radio on Education – David Berljawsky

Remediation of the Chinese Language – Carmen Chan

Braille – Ashley Jones

Despite the challenges of following the week-to-week discussions from Vista to Wiki to Blog and to the web in general, I was on track most of the time. I will admit I got confused a couple of times and I was more of a passive participant than an active one. Nevertheless, the course was interesting and insightful and it was great learning from many of my peers. Thank you everyone.

December 1, 2009 1 Comment

This is it!

I must be honest and admit that I simply could not take in all the assigned readings, the forum discussions, the wiki building, and the community weblog; however, I was able to learn a lot from what I could absorb. Reading Ong and Bolter were some of my favorite activities in ETEC540 for a couple reasons. The first reason was that I really enjoyed seeing the contrasting views of these two authors and the second reason was that the reading was on paper. Even though I have a nice new monitor, my eyes could only handle so much digital reading and I found myself craving reading an actual book. That was an interesting realization as we were learning about different writing spaces and the benefits and drawbacks of each.

Before reading Bolter, I found myself seeing eye-to-eye with Ong. His great divide perspective about technological determinism is so black and white and makes sense. Then we read Bolter and his humanistic perspective not definitively labeling a cause and effect relationship on the remediation of writing was slightly disconcerting at first. Being more of a humanitarian myself, I have come to agree more with Bolters ideas than Ong’s. By understanding their contrasting views of text technologies, I was able to gain a solid understanding of the implications of the evolution of writing all the way from papyrus to Web 2.0. To be honest, I as slightly impatient learning about all this history while I was reading about it, but I am glad to have as good of perspective on writing as I do now.

The collection of material created by my classmates on the community weblog is incredible. There are so many creative and innovative ideas incorporating much of what we have read about and lots of other knowledge brought to the table from outside this course. Our blog is a good example of the wisdom of the crowds and thankfully most contributors have added appropriate tags and have categorized them accordingly making it easy to find connections in the contributions. I only wish I had access to our community weblog indefinitely for an instant source of inspiration!

Thanks to all of you for sharing all your knowledge and making this a very enjoyable course.

December 1, 2009 3 Comments

It’s Up To You

For my course project, I decided to create an interactive fictional story for students learning English as a foreign language. The target audience is a small to medium class of upper intermediate students between the ages of 15 and 25 who have recently learned the difference between direct and reported speech. Appropriate level reading material for non-native English students is hard to come by, especially in a non-English speaking country and is greatly appreciated when available. As indicated in the directions to be read before students start their reading journey, the activity can either be completed individually or as a group. Often when there is a competitive element to activities such as these, students are much more motivated to participate as a group. It could potentially be completed remotely but would best be suited for a face-to-face-to-screen computer lab scenario.

This project is a product of my exploration and experimentation of the mixed media hypertext as a teaching tool. Therefore the focus should be much more on the medium than on the actual content. The storyline is of course fictional and is relatively inconsequential other than providing some authentic dialogue (between the reader and their cellmate) and vocabulary appropriate to the students’ level. The story is somewhat shorter than I originally expected, however as I was writing it, I realized that it would be better to start with a simple storyline both for students and a writer that are new to this genre and the tools to create it. “An interactive fiction is an extension of classical narrative media as it supposes a direct implication of spectators during the story evolution. Writing such a story is much more complex than a classical one, and tools at the disposal of writers remain very limited compared to the evolution of technology” (Donikian and Portugal, 2004). I also had an idea of how the story would go before I started writing, but the direction changed in the process as well and I learned that creating a graphic storyboard is very helpful for organizing the different directions it can take readers. There are multiple endings, yet students are redirected to try the story again until they reach “the end.”

Bush, Nelson, and Bolter were the three main authors we read in ETEC540 in order to gain an understanding of the origins, complexity and implications of hypertext. Both Bush and Nelson were primarily concerned with hypertext as a natural means to disseminate nonfictional information, while Bolter’s chapter on fictional hypertext is the by far longest chapter in Writing Space: Computers, Hypertext, and the Remediation of Print. In that chapter, he presents many literary techniques using hypertext to move readers between elements such as time, place, character, voice, plot, perspective, etc. Although these techniques are intriguing, their complexity is not appropriate for my target audience. Bolter’s analysis of hypertext goes further by pointing out that instead of being nonlinear, it is actually multilinear. He points out that all writing is linear, but hypertext can go in many different directions. Even in his chapter titled Hypertext and the Remediation of Print, he writes, “The principal task of authors of hypertextual fiction on the Web or in stand-aloe form is the use links to define relationships among textual elements, and these links constitute the rhetoric of the hypertext” (Bolter, 2001, p. 29).

Unlike a traditional storyline, hypertextual storytelling gives the students the freedom over how they read it. This (perceived) control is a much more common characteristic to the way we interact with digital information today and therefore should be incorporated into classroom activities regularly. Putting the student in the proverbial driver’s seat is indicative of a constructivistic teaching approach, which is especially effective when employing ICT in the classroom. However, as Donikian and Portugal observe, “Whatever degree of interactivity, freedom, and non linearity might be provided, the role that the interactor is assigned to play always has to remain inside the boundaries thus defined by the author, and which convey the essence of the work itself” (2004). For that reason, I have suggested that students actually modify and customize the story after they have read it. They could do that individually or in pairs in class or for homework. Most often, the more control students are given, the more they are motivated to participate and learn. For their final project, they could create a complete story with multiple endings.

There are so many possibilities when writing fiction with hypertext and I have hardly scratched the surface in my first exploration into this genre. This project has given me a solid base from with to create longer and more complex pieces for wider teaching contexts. I hope you enjoy it and that it inspires you experiment with this exciting medium as well. Click here to access the story or copu and paste this url: http://wiki.ubc.ca/Course:ETEC540/2009WT1/Assignments/MajorProject/ItsUpToYou

References:

Bolter, J.D. (2001). Writing Space: Computers, hypertext, and the remediation of print. Mahway, NJ: Lawrence Erlbaum Associates, pp. 27-46, 121-160.

Bush, V. (1945). As we may think. The Atlantic Monthly, 176(1), 101-108.

Donikian, S. & Portugal, J. (2004). Writing Interactive Fiction Scenarii with DraMachina. Lecture notes in computer science, pp. 101–112

Nelson, Theodore. (1999). Xanalogical structure, needed now more than ever: Parallel documents, deep links to content, deep versioning and deep re-use.

November 29, 2009 2 Comments

Hypermedia and Cybernetics: A Phenomenological Study

As with all other technologies, hypermedia technologies are inseparable from what is referred to in phenomenology as “lifeworlds”. The concept of a lifeworld is in part a development of an analysis of existence put forth by Martin Heidegger. Heidegger explains that our everyday experience is one in which we are concerned with the future and in which we encounter objects as parts of an interconnected complex of equipment related to our projects (Heidegger, 1962, p. 91-122). As such, we invariably encounter specific technologies only within a complex of equipment. Giving the example of a bridge, Heidegger notes that, “It does not just connect banks that are already there. The banks emerge as banks only as the bridge crosses the stream.” (Heidegger, 1993, p. 354). As a consequence of this connection between technologies and lifeworlds, new technologies bring about ecological changes to the lifeworlds, language, and cultural practices with which they are connected (Postman, 1993, p. 18). Hypermedia technologies are no exception.

To examine the kinds of changes brought about by hypermedia technologies it is important to examine the history not only of those technologies themselves but also of the lifeworlds in which they developed. Such a study will reveal that the development of hypermedia technologies involved an unlikely confluence of two subcultures. One of these subcultures belonged to the United States military-industrial-academic complex during World War II and the Cold War, and the other was part of the American counterculture movement of the 1960s.

Many developments in hypermedia can trace their origins back to the work of Norbert Wiener. During World War II, Wiener conducted research for the US military concerning how to aim anti-aircraft guns. The problem was that modern planes moved so fast that it was necessary for anti-aircraft gunners to aim their guns not at where the plane was when they fired the gun but where it would be some time after they fired. Where they needed to aim depended on the speed and course of the plane. In the course of his research into this problem, Wiener decided to treat the gunners and the gun as a single system. This led to his development of a multidisciplinary approach that he called “cybernetics”, which studied self-regulating systems and used the operations of computers as a model for these systems (Turner, 2006, p. 20-21).

This approach was first applied to the development of hypermedia in an article written by one of Norbert Wiener’s former colleges, Vannevar Bush. Bush had been responsible for instigating and running the National Defence Research Committee (which later became the Office of Scientific Research and Development), an organization responsible for government funding of military research by private contractors. Following his experiences in military research, Bush wrote an article in the Atlantic Monthly addressing the question of how scientists would be able to cope with growing specialization and how they would collate an overwhelming amount of research (Bush, 1945). Bush imagined a device, which he later called the “Memex”, in which information such as books, records, and communications would be stored on microfilm. This information would be capable of being projected on screens, and the person who used the Memex would be able to create a complex system of “trails” connecting different parts of the stored information. By connecting documents into a non-hierarchical system of information, the Memex would to some extent embody the principles of cybernetics first imagined by Wiener.

Inspired by Bush’s idea of the Memex, researcher Douglas Engelbart believed that such a device could be used to augment the use of “symbolic structures” and thereby accurately represent and manipulate “conceptual structures” (Engelbart, 1962).This led him and his team at the Augmentation Research Center (ARC) to develop the “On-line system” (NLS), an ancestor of the personal computer which included a screen, QWERTY keyboard, and a mouse. With this system, users could manipulate text and connect elements of text with hyperlinks. While Engelbart envisioned this system as augmenting the intellect of the individual, he conceived the individual was part of a system, which he referred to as an H-LAM/T system (a trained human with language, artefacts, and methodology) (ibid., p. 11). Drawing upon the ideas of cybernetics, Engelbart saw the NLS itself as a self-regulatory system in which engineers collaborated and, as a consequence, improved the system, a process he called “bootstrapping” (Turner, 2006, p. 108).

The military-industrial-academic complex’s cybernetic research culture also led to the idea of an interconnected network of computers, a move that would be key in the development of the internet and hypermedia. First formulated by J.C.R. Licklider, this idea was later executed by Bob Taylor with the creation of ARPANET (named after the defence department’s Advanced Research Projects Agency). As a extension of systems such as the NLS, such a system was a self-regulating network for collaboration also inspired by the study of cybernetics.

The late 1960s to the early 1980s saw hypermedia’s development transformed from a project within the US military-industrial-academic complex to a vision animating the American counterculture movement. This may seem remarkable for several reasons. Movements related to the budding counterculture in the early 1960s generally adhered to a view that developments in technology, particularly in computer technology, had a dehumanizing effect and threatened the authentic life of the individual. Such movements were also hostile to the US military-industrial-academic complex that had developed computer technologies, generally opposing American foreign policy and especially American military involvement in Vietnam. Computer technologies were seen as part of the power structure of this complex and were again seen as part of an oppressive dehumanizing force (Turner, 2006, p. 28-29).

This negative view of computer technologies more or less continued to hold in the New Left movements largely centred on the East Coast of the United States. However, a contrasting view began to grow in the counterculture movement developing primarily in the West Coast. Unlike the New Left movement, the counterculture became disaffected with traditional methods of social change, such as staging protests and organizing unions. It was thought that these methods still belonged to the traditional systems of power and, if anything, compounded the problems caused by those systems. To effect real change, it was believed, a shift in consciousness was necessary (Turner, 2006, p. 35-36).

Rather than seeing technologies as necessarily dehumanizing, some in the counterculture took the view that technology would be part of the means by which people liberated themselves from stultifying traditions. One major influences on this view was Marshall McLuhan, who argued that electronic media would become an extension of the human nervous system and would result in a new form of tribal social organization that he called the “global village” (McLuhan, 1962). Another influence, perhaps even stronger, was Buckminster Fuller, who took the cybernetic view of the world as an information system and coupled it with the belief that technology could be used by designers to live a life of authentic self-efficiency (Turner, 2006, p. 55-58).

In the late 1960s, many in the counterculture movement sought to effect the change in consciousness and social organization that they wished to see by forming communes (Turner, 2006, p. 32). These communes would embody the view that it was not through political protest but through the expansion of consciousness and the use of technologies (such as Buckminster Fuller’s geodesic domes) that a true revolution would be brought about. To supply members of these communes and other wayfarers in the counterculture with the tools they needed to make these changes, Stewart Brand developed the Whole Earth Catalogue (WEC). The WEC provided lists of books, mechanical devices, and outdoor gear that were available through mail order for low prices. Subscribers were also encouraged to provide information on other items that would be listed in subsequent editions. The WEC was not a commercial catalogue in that it wasn’t possible to order items from the catalogue itself. It was rather a publication that listed various sources of information and technology from a variety of contributors. As Fred Turner argues (2006, p. 72-73), it was seen as a forum by means of which people from various different communities could collaborate.

Like many others in the counterculture movement, Stewart Brand immersed himself in cybernetics literature. Inspired by the connection he saw between cybernetics and the philosophy of Buckminster Fuller, Brand used the WEC to broker connections between ARC and the then flourishing counterculture (Turner, 2006, p. 109-10). In 1985, Stewart Brand and former commune member Larry Brilliant took the further step of uniting the two cultures and placed the WEC online in one of the first virtual communities, the Whole Earth ‘Lectronic Link or “WELL”. The WELL included bulletin board forums, email, and web pages and grew from a source of tools for counterculture communes into a forum for discussion and collaboration of any kind. The design of the WELL was based on communal principles and cybernetic theory. It was intended to be a self-regulating, non-hierarchical system for collaboration. As Turner notes (2005), “Like the Catalog, the WELL became a forum within which geographically dispersed individuals could build a sense of nonhierarchical, collaborative community around their interactions” (p. 491).

This confluence of military-industrial-academic complex technologies and the countercultural communities who put those technologies to use would form the roots of other hypermedia technologies. The ferment of the two cultures in Silicon Valley would result in the further development of the internet—the early dependence on text being supplanted by the use of text, image, and sound, transforming hypertext into full hypermedia. The idea of a self-regulating, non-hierarchical network would moreover result in the creation of the collaborative, social-networking technologies commonly denoted as “Web 2.0”.

This brief survey of the history of hypermedia technologies has shown that the lifeworlds in which these technologies developed was one first imagined in the field of cybernetics. It is a lifeworld characterised by non-hierarchical, self-regulating systems and by the project of collaborating and sharing information. First of all, it is characterized by non-hierarchical organizations of individuals. Even though these technologies first developed in the hierarchical system of the military-industrial-academic complex, it grew within a subculture of collaboration among scientists and engineers (Turner, 2006, p. 18). Rather than being strictly regimented, prominent figures in this subculture – including Wiener, Bush, and Engelbart -voiced concern over the possible authoritarian abuse of these technologies (ibid., p. 23-24).

The lifeworld associated with hypermedia is also characterized by the non-hierarchical dissemination of information. Rather than belonging to traditional institutions consisting of authorities who distribute information to others directly, these technologies involve the spread of information across networks. Such information is modified by individuals within the networks through the use of hyperlinks and collaborative software such as wikis.

The structure of hypermedia itself is also arguably non-hierarchical (Bolter, 2001, p. 27-46). Hypertext, and by extension hypermedia, facilitates an organization of information that admits of many different readings. That is, it is possible for the reader to navigate links and follow what Bush called different “trails” of connected information. Printed text generally restricts reading to one trail or at least very few trails, and lends itself to the organization of information in a hierarchical pattern (volumes divided into books, which are divided into chapters, which are divided into paragraphs, et cetera).

It is clear that the advent of hypermedia has been accompanied by changes in hierarchical organizations in lifeworlds and practices. One obvious example would be the damage that has been sustained by newspapers and the music industry. The phenomenological view of technologies as connected to lifeworlds and practices would provide a more sophisticated view of this change than the technological determinist view that hypermedia itself has brought about changes in society and the instrumentalist view that the technologies are value neutral and that these changes have been brought about by choice alone (Chandler, 2002). It would rather suggest that hypermedia is connected to practices that largely preclude both the hierarchical dissemination of information and the institutions that are involved in such dissemination. As such, they cannot but threaten institutions such as the music industry and newspapers. As Postman (1993) observes, “When an old technology is assaulted by a new one, institutions are threatened” (p. 18).

Critics of hypermedia technologies, such as Andrew Keen (2007), have generally focussed on this threat to institutions, arguing that such a threat undermines traditions of rational inquiry and the production of quality media. To some degree such criticisms are an extension of a traditional critique of modernity made by authors such as Alan Bloom (1987) and Christopher Lasch (1979). This would suggest that such criticisms are rooted in more perennial issues concerning the place of tradition, culture, and authority in society, and is not likely that these issues will subside. However, it is also unlikely that there will be a return to a state of affairs before the inception of hypermedia. Even the most strident critics of “Web 2.0” technologies embrace certain aspects of it.

The lifeworld of hypermedia does not necessarily oppose traditional sources of expertise to the extent that the descendants of the fiercely anti-authoritarian counterculture may suggest, though. Advocates of Web 2.0 technologies often appeal to the “wisdom of crowds”, alluding the work of James Surowiecki (2005). Surowiecki offers the view that, under certain conditions, the aggregation of the choices of independent individuals results in a better decision than one made by a single expert. He is mainly concerned with economic decisions, offering his theory as a defence of free markets. Yet this theory also suggests a general epistemology, one which would contend that the aggregation of the beliefs of many independent individuals will generally be closer to the truth than the view of a single expert. In this sense, it is an epistemology modelled on the cybernetic view of self-regulating systems. If it is correct, knowledge would be the result of a cybernetic network of individuals rather than a hierarchical system in which knowledge is created by experts and filtered down to others.

The main problem with the “wisdom of crowds” epistemology as it stands is that it does not explain the development of knowledge in the sciences and the humanities. Knowledge of this kind doubtless requires collaboration, but in any domain of inquiry this collaboration still requires the individual mastery of methodologies and bodies of knowledge. It is not the result of mere negotiation among people with radically disparate perspectives. These methodologies and bodies of knowledge may change, of course, but a study of the history of sciences and humanities shows that this generally does not occur through the efforts of those who are generally ignorant of those methodologies and bodies of knowledge sharing their opinions and arriving at a consensus.

As a rule, individuals do not take the position of global skeptics, doubting everything that is not self-evident or that does not follow necessarily from what is self-evident. Even if people would like to think that they are skeptics of this sort, to offer reasons for being skeptical about any belief they will need to draw upon a host of other beliefs that they accept as true, and to do so they will tend to rely on sources of information that they consider authoritative (Wittgenstein, 1969). Examples of the “wisdom of crowds” will also be ones in which individuals each draw upon what they consider to be established knowledge, or at least established methods for obtaining knowledge. Consequently, the wisdom of crowds is parasitic upon other forms of wisdom.

Hypermedia technologies and the practices and lifeworld to which they belong do not necessarily commit us to the crude epistemology based on the “wisdom of crowds”. The culture of collaboration among scientists that first characterized the development of these technologies did not preclude the importance of individual expertise. Nor did it oppose all notions of hierarchy. For example, Engelbart (1962) imagined the H-LAM/T system as one in which there are hierarchies of processes, with higher executive processes governing lower ones.

The lifeworlds and practices associated with hypermedia will evidently continue to pose a challenge to traditional sources of knowledge. Educational institutions have remained somewhat unaffected by the hardships faced by the music industry and newspapers due to their connection with other institutions and practices such as accreditation. If this phenomenological study is correct, however, it is difficult to believe that they will remain unaffected as these technologies take deeper roots in our lifeworld and our cultural practices. There will continue to be a need for expertise, though, and the challenge will be to develop methods for recognizing expertise, both in the sense of providing standards for accrediting experts and in the sense of providing remuneration for expertise. As this concerns the structure of lifeworlds and practices themselves, it will require a further examination of those lifeworlds and practises and an investigation of ideas and values surrounding the nature of authority and of expertise.

References

Bloom, A. (1987). The closing of the American mind. New York: Simon & Schuster.

Bolter, J. D. (2001) Writing space: Computers, hypertext, and the remediation of print (2nd ed.). New Jersey: Lawrence Erlbaum Associates.

Bush, V. (1945). As we may think. Atlantic Monthly. Retrieved from http://www.theatlantic.com/doc/194507/bush

Chandler, D. (2002). Technological or media determinism. Retrieved from http://www.aber.ac.uk/media/Documents/tecdet/tecdet.html

Engelbart, D. (1962) Augmenting human intellect: A conceptual framework. Menlo Park: Stanford Research Institute.

Heidegger, M. (1993). Basic writings. (D.F. Krell, Ed.). San Francisco: Harper Collins.

—–. (1962). Being and time. (J. Macquarrie & E. Robinson, Trans.). San Francisco: Harper Collins.

Keen, A. (2007). The cult of the amateur: How today’s internet is killing our culture. New York: Doubleday.

Lasch, C. (1979). The culture of narcissism: American life in an age of diminishing expectations. New York: W.W. Norton & Company.

McLuhan, M. (1962). The Gutenberg galaxy. Toronto: University of Toronto Press.

Postman, N. (1993). Technopoly: The surrender of culture to technology. New York: Vintage.

Surowiecki, J. (2005). The wisdom of crowds. Toronto: Anchor.

Turner, F. (2006). From counterculture to cyberculture: Stewart Brand, the Whole Earth Network, and the rise of digital utopianism. Chicago: University of Chicago Press.

—–. (2005). Where the counterculture met the new economy: The WELL and the origins of virtual community. Technology and Culture, 46(3), 485–512.

Wittgenstein, L. (1969). On certainty. New York: Harper.

November 29, 2009 No Comments

Electronic books: Not yet the remediation of print

The cost of digital alternatives has been a factor in their adoption. E-books read using a home computer are cost effective since few people in developed countries do not already own a PC capable of running the required software. However, the initial cost of purchasing a dedicated e-book reader, particularly for fiction, is still prohibitive. There are also lingering issues of portability, readability and battery life, though these are certain to be addressed over time. For non-fiction materials, particularly encyclopedias and reference works, however, portability works to the advantage of e-books and uptake has been faster and more enthusiastic. High school students are content to use electronic versions of reference works that are, in print form, typically larger, heavier, and available for shorter loan periods than other types of materials. And, in addition to 24/7 availability online, electronic reference books offer the advantages inherent in most digitized material: “Specifically, electronic documents are usually searchable, modifiable, and ‘enhanceable.’ ” (Anderson-Inman & Horney, 1997, p. 487). At the post-secondary level, where students purchase their books, electronic texts are popular with a majority of students for many of the same reasons (Hawkins, 2002, p. 45). “As with any remediation, however, the eBook must promise something more than the form it remediates: it must offer what can be construed as a more immediate, complete, or authentic experience for the reader” (Bolter, 2001, p. 80). Again, to date, this is most true for reference works and most notable for encyclopedias.

Fanfiction.Net (www.fanfiction.net Nov. 11, 2009) employs attributes of Web 2.0 popular with teenage readers. Fanfiction, as the name suggests, is a site that allows users to write chapters and scenes based on their favourite works (novels, plays, movies and television shows), and publish them to thousands of readers. Readers can browse these user creations by category and read them online as long as they have a computer with an Internet connection or access to one in a school or public library. The fan compositions reflect the interests and preoccupations of the time, and reach a wide audience through Internet publication facilitated by categorization, searchability and interactivity (ratings and reviews). Forums and communities allow readers and writers to discuss their works, the shows and books on which they are based and a wide range of related topics. English, literature and creative writing teachers would do well to co-opt the enthusiasm for this type of interaction with popular culture to encourage students to write for a much wider audience than that available in the classroom or the school. Interestingly, while Fanfiction incorporates many Web 2.0 affordances, it is also still linked strongly to the metaphor of the codex. Hawkins (2002) sums up the current situation well: “E-books will survive, but not in the consumer market—at least not until reading devices become much cheaper and much better in quality …. The e-book revolution has therefore become more of an evolution.” (p.48).

References

Anderson-Inman, L. & Horney, M. (1997). Electronic books for secondary students. Journal of Adolescent & Adult Literacy, 40 (6), 486-491.

Bolter, J.D. (2001). Writing Space: Computers, Hypertext, and the Remediation of Print. Mahwah, NJ: Lawrence Erlbaum.

Hawkins, D. T. (2002). Electronic Books: Reports of their death have been exaggerated. Online, 26(4), 42.

November 17, 2009 2 Comments

From tangible to electronic

Commentary #3 – In response to Bolter, Chapter 5 The Electronic Book from Writing Space

Much thought continues to go into the shift from the book to the electronic book. That is, an electronic entity that replaces the need for a tangible book. Bolter (2001), in Chapter 5 – The Electronic Book of his book Writing Space, explores the differences, similarities, practicalities and otherwise significant characteristics of both the book and the emerging electronic versions of it. This chapter begs the reader to contemplate the affordances of the electronic book and think critically about how the nature of the book will continue to evolve. Beyond unpacking the implications of the electronic novel, Bolter discusses the electronic book in general and his discussion warrants a further look at the electronic version of textbooks and books used for research purposes. Interestingly, I find it uncomfortable to use the word “book” for the purpose of this commentary when referring to an electronic book. The word “book” itself denotes the sense of containment afforded by a cover, a back and a spine. The open-endedness of technology means that book wouldn’t be the appropriate term, but rather electronic information or electronic resource, would be more appropriate when referring to electronic versions of the tangible book.

There is no question that the book in its tangible form represents a sense of permanency in comparison to its digital counterpart. We are re-envisioning the way we look at print resources and at its technological counterpart and we have now come to have expectations of print as a result off what is available via technology. Bolter notes, “as we refashion the book through digital technology, we are diminishing the sense of closure that belonged to the codex and to print” (2001, p. 79). The very nature of technology requires that information is constantly evolving and there is a sense when visiting sites online that upon a return visit, changes will have been made. Working in special education, we refer to Individual Education Plan’s (IEP’s) as “living documents”, that is, documents that don’t remain fixed but rather change and evolve as necessary. This parallel works well with Bolter’s discussion but warrants the question of how evolving and living information can be appropriately organized. This is where hypertext becomes the defining technological feature that allows the information itself to dictate the nature of organization. Bolter says “its organization, the principles by which it controls other texts, and the choice of organizing principles depends on both the contemporary construction of knowledge and the contemporary technology of writing” (2001, p.84). The contemporary technology that we are currently using to further the precision by which we organize is hypertext, and that hypertext is creating parallels and links between information in a way that the tangible book simply cannot. A common challenge presented by the tangible book is the ability to collect, in one location, enough sources to properly conduct research.

Whereas pre-technological forms of organization only allowed for a piece of information to be housed in one category, electronic affordances remove the issue of quantity. As a university student, I was often frustrated by the fact that the perfect book to help support my thesis would be on loan, and I would be forced to wait or settle for different, and sometimes seemingly lesser, information. By storing resources digitally and organizing it appropriately, I would argue that the nature of research would actually improve because of the greater access afforded by electronic information. Bolter’s Chapter 5 leads me to believe that the ideal situation would be to have access to giant online encyclopedia that incorporates links to all related books on a subject (scanned in and searchable through the Google Books Project naturally) and all related sites through the use of hyperlinks. While Wikipedia exists as a popular encyclopedia, the openness it allows in editing articles does not make the Wiki conducive to facilitating electronic books.

Earlier in Writing Space, Bolter argues “in graphic form and function, the newspaper is coming to resemble a computer screen, as the combination of text, images, and icons turns the newspaper into a static snapshot of a World Wide Web page” (2001, p.51). While books may not be able to resemble a computer screen as easily as a newspaper, there is certainly a need for innovation in the presentation of books given the technological culture we now live in. While Kindle and other systems have continued the evolution of how a story is told, there needs to be a system by which informational texts can be made electronic and further improve the nature of how we come to know about a subject. Already, electronic textbooks contain links and virtual activities that have enhanced the learning experience. Bolter lays the framework for analyzing the nature of improvements that moving to the electronic book will afford and it is clear that electronic books will lead to greater access and therefore greater understanding of information now contained outside of the container of a tangible book.

References:

Bolter, Jay David. (2001). Writing space: Computers, hypertext, and the remediation of print [2nd edition]. Mahwah, NJ: Lawrence Erlbaum.

November 15, 2009 1 Comment

Images Before Computers

“My sense is that this is essentially a visual culture, wired for sound – but one where the linguistic element… is slack and flabby, and not to be made interesting without ingenuity, daring, and keen motivation. (Bolter, p. 47.) Bolter quotes Jameson in The Breakout of the Visual for the purpose of illustrating how “very different theorists agree that our cultural moment – what we are calling the late age of print – is visual rather than linguistic.” (Bolter, p48) One needs only to look around us and see how prevalent images are in our everyday life especially when pertaining to advertising on the outside on billboards, busses and storefronts. The space is limited therefore the images have to be much more compelling without actually using a lot of words.

Both Kress and Bolter assert that the use of image over print is a relatively new phenomenon which has happened as a result of computer use and hypertext. If we look at the history of advertising, we can see that the shift was occurring and becoming culturally entrenched before the wide use of computers. Bolter asserts that “in traditional print technology, images were contained by the verbal text.” (p.48) He is absolutely right when referring to books and magazine articles but when looking at printed ads, we can see that images play a more primal role.

Since we live in a commercially driven capitalist (market) society which is highly dependent on the sale of unnecessary items, much capital and research has gone into how to sell every product imaginable. It may have become a cliché, but only because it is true – sex sells. Here is a very interesting web site that highlights some of the more ludicrous examples. (http://inventorspot.com/articles/ads_prove_sex_sells_5576)

United States and Canada are made up of many people, representing various diverse cultures and languages. Images are pretty much universal although we do have to be careful as some may not be as universal as others. “The main point is that the relationship between word and image is becoming increasingly unstable, and this instability is especially apparent in popular American magazines, newspapers, and various forms of graphic advertising.” (p.49) I would assert that the relationship was already unstable when computers became prevalent. Computers allowed people the forum of discussion and quick access to the images which were previously viewed in isolation. There is no doubt that hypertext allows a further foray into the world of image and freed the image from the binding of the text. Kress points out the obvious and is not always correct. When he states that “[the] chapters are numbered, and the assumption is that there is an apparent building from chapter to chapter: [they] are not to be read out of order. [at] the level of chapters, order is fixed.” (Kress, p.3) It is a mistake to limit our study of the remediation of print by simply looking at text in books. If we expand our focus, as we must to properly discuss the subject, and include magazines and printed ads, evidence clearly points to the fact that the image was becoming more dominant before the prevalent use of computers. Like books, magazines and authors who wrote for them also knew “about [their] audience and … subject matter” (Kress p.3). Unlike books where the order is very rigid, a magazine can be read in any order you like.

Bolter acknowledges the influence of magazines and advertising on remediating text and images by stating that in Life magazine and People magazine “the image dominates the text, because the image is regarded as more immediate, closer to the reality presented.

Bolter’s use of the shaving picture from the USA Today is an excellent example of images becoming central in print. However, I think he is being generous when he states that “designers no longer trusted the arbitrary symbolic structure of the graph to sustain its meaning … .” (Bolter, p.53) I see it more as more pandering to the lowest common denominator. The designers do not trust the public’s ability to read a graph rather than the graph’s ability to “sustain its meaning.” (Bolter, p. 53) It seems that the need to dummy text down is a comment not only on the writer’s faith in the public’s ability to interpret text but also to interpret images. Images are becoming more and more basic and try to appeal to our primal senses and needs – for instance, using sex as a vehicle to increase sales.

The existence of the different entry points speaks of a sense of insecurity about the visitors. This could also be described as a fragmentation of the audience—who are now no longer just readers but visitors, a different action being implied in the change of name, as Kress points out.

Kress succinctly addresses the power of the image in the example of Georgia’s drawing of her family. We can clearly see the differences and interpret them the way the creator of the drawing intended. The placement of the little girl in the drawing tells us about how she views herself in terms of her place in the family. There are no words and none are needed for the image really is worth a thousand words.

Perhaps it is fitting that in this fast paced world we live in, we are moving away from the art of writing, which does take time to both produce and consume to the image which takes time to produce but is designed to be consumed very quickly. However, to tie this change directly to the rise in the use of computers is to blind oneself to the rich legacy of printed images in advertising prior.

Bolter, D. (2001). Writing space: Computers, hypertext, and the remediation of print [2nd edition]. Mahwah, NJ: Lawrence Erlbaum

Kress, G. (2005). Gains and losses: New forms of texts, knowledge, and learning. Computers and Composition, 22(1), 5-22.

Inventor Spot. (2009). 15 Ads That Prove Sex Sells… Best? Retrieved 12 November, 2009, from http://inventorspot.com/articles/ads_prove_sex_sells_5576

November 15, 2009 1 Comment

RipMixFeed using del.icio.us

For the RipMixFeed activity I collected a set of resources using the social bookmarking tool del.icio.us. Many of us have already used this application in other courses to create a class repository of resources or to keep track of links relevant to our research projects. What I like about this tool is that the user can collect all of their favourite links, annotate them and then easily search them according to the tagged words that they created. This truly goes beyond the limitations of web browser links.

For this activity I focused on finding resources specifically related to digital and visual literacy and multiliteracies. To do this I conducted web searches as well as searches of other del.icio.us user’s links. As there are so many resources – too many for me to adequately peruse – I have subscribed to the tag ‘digitalliteracy’ in del.icio.us so I connect with others tagging related information. You can find my del.icio.us page at: http://delicious.com/nattyg

Use the tags ‘Module4’ and ‘ETEC540’ to find the selected links or just search using ETEC540 to find all on my links related to this course.

A couple of resources that I want to highlight are:

- Roland Barthes: Understanding Text (Learning Object)

Essentially this is a self-directed learning module on Roland Barthes ideas on semiotics. The section on Readerly and Writerly Texts is particularly relevant to our discussions on printed and electronic texts. - Howard Rheingold on Digital Literacies

Rheingold states that a lot people are not aware of what digital literacy is. He briefly discusses five different literacies needed today. Many of these skills are not taught in schools so he poses the question how do we teach these skills? - New Literacy: Document Design & Visual Literacy for the Digital Age Videos

University of Maryland University College faculty, David Taylor created a five part video series on digital literacy. For convenience sake here is one Part II where he discusses the shift to the ‘new literacy’. Toward the end of the video, Taylor (2008) makes an interesting statement that “today’s literacy means being capable of producing fewer words, not more”. This made me think of Bolter’s (2001) notion of the “breakout of the visual” and the shift from textual to visual ways of knowing.

Alexander (2006) suggests that social bookmarking can work to support “collaborative information discovery” (p. 36). I have no people in my Network as of yet. I think it would be valuable to connect with some of my MET colleagues so if you would like share del.icio.us links let’s connect! My username is nattyg.

References

Alexander, B. (2006). Web 2.0: A new wave for teaching and learning? Educause Review, Mar/Apr, 33-44.

Bolter, J.D. (2001). Writing space: Computers, hypertext and the remediation of print. London: Lawrence Erlbaum Associates, Publishers.

Taylor, D. (2008). The new literacy: document design and visual literacy for the digital age: Part II. Retrieved November 13, 2009, from https://www.youtube.com/watch?v=RmEoRislkFc

November 14, 2009 2 Comments

Speech Recognition: Will it change the way we write?

At the beginning of his book, Bolter (2001, p.xiii) states, “At present, however, it seems to me that the computer is not leading to a new kind of orality, but rather to an increased emphasis on visual communication”. While it is true that we are seeing an increase in visual modes of representation, we are also seeing an increase in the availability and usefulness of speech recognition technologies. Perhaps the “pendulum” is also swinging back towards a new kind of orality (Bolter, 2001, p.xiii).

Speech recognition, also known as voice recognition, converts speech to text and allows users to verbally direct their computer to perform specific commands. With the use of a microphone (either an external one or one internally built into the computer) words dictated are reproduced into a word processor document. Speech recognition allows the user to verbally specify punctuation, spell out acronyms, move the cursor, format texts, change fonts, save files (and more), all without touching the keyboard. Errors can be corrected by speaking the name of the word which is incorrect and then saying “correct that”. This will produce a list of words that closely match and one simply needs to speak aloud the number of the line that the correct word is on, followed by “click ok” and the word is corrected.

Speech recognition technologies are extremely beneficial for people with learning disabilities and those who are physically disabled. A relative of mine, who was paralyzed from the neck down in a motorcycle accident, is able to dictate and send emails and update his Facebook page by using speech recognition. This technology could also help young children learn to read and write by allowing them to see on a computer screen, their spoken words turn into written words. Cavanagh (2009) found that “Students who use speech recognition are writing more, writing independently, spelling correctly, using longer words, using more complex written language and writing thoughts that have never been written before”. Another advantage, for people of all ages, is the ease of translating conversations, interviews, notes and other information into a word document, enabling easy access to all sections of the material.

With the use of speech recognition, we will no longer be restricted by the speed at which we can write or type, but by the speed at which we can produce and form ideas and thoughts within our minds. Speech recognition would allow us to reduce distractions and keep our minds on what we are saying. This would be like a “funnel” which according to Ronald Kellogg (1989) is, “an aid that channels the writer’s attention into only one or two processes”. By removing the desire to edit while speaking, speech recognition allows for the uninterrupted flow of ideas into words.

Clearly there are some valuable advantages to the use of speech recognition technology, however, is it simply another method of getting our thoughts into words on a document, or does it alter the way we write? McLuhan’s (1994) expression, “The medium is the message”, seems to say that as dictation is a different medium than writing, each would create a different final product.

Gould, Conti, and Hovanyecz, (1983) research found that participants performed at least as well when dictating to the listening typewriter as they did when writing. John Gould’s (1978) experiment found that after considerable experience with dictation, participants were 20-65% faster at dictating than at writing similar quality compositions.

As stated by Willard (1997), “Speech and writing are fundamentally different; people seldom speak as they write, or write as they speak (unless they are preparing a written copy of a speech)”. When dictating and using speech recognition technologies, one might have the tendency to speak more formally, in a style more ‘suitable’ for writing. If, the typically more informal style of conversation is used, this would change the style of writing produced. However, speech recognition is generally used to get main ideas and thoughts onto a word document, with most users then editing and re-writing sections of their original draft.

Dictation and writing are different, but effective methods to get words on ‘paper’. As Gould (1978) states, “Composition is still the fundamental skill necessary for quality writing, and method of composition is of secondary importance”. Each individual’s writing style is different; therefore, it will be up to the individual who uses the speech recognition to decide if and how it changes and defines their own personal style of writing.

References:

Bolter, J. D. (2001). Writing space: Computers, hypertext, and the remediation of print [2nd edition]. Mahwah, NJ: Lawrence Erlbaum.

Cavanagh, C.A. (2009). Speech Recognition Trial Protocol. Closing the Gap, 26(5), 8-11. Retrieved from:

Gould, J. D. (1978). How experts dictate. Journal of Experimental Psychology: Human Perception and Performance. 4(4), 648-661.

Gould, J., Conti, J. & Hovanyecz, T. (1983). Composing Letters with a Simulated Listening Typewriter. Communications of the ACM , 26, 295-308.

Kellogg, R. T. (1989). Idea Processors: Computer Aids for Planning and Composing Text. Computer Writing Environments: Theory, Research, and Design. Ed. Bruce Britton and Shawn Glynn. Hillsdale, New Jersey: Lawrence Erlbaum Associates. 57-92.

McLuhan, M. (1994). Understanding media: The extensions of man. Cambridge, MA: MIT Press.

Willard, E. (1997). Technical Speaking? Automatic Speech Recohnition and Technical Writing. Cal Poly State University. Retrieved from: http://saoki.site0.com/poly/stc/essays/ewillard.html

November 3, 2009 4 Comments

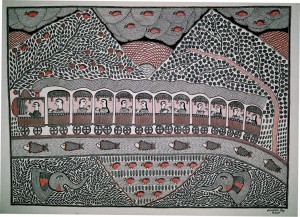

Mithila Art as a Communication Technology

Long before there were computers in most of our homes, there was Mithila Art in homes of what is now India and Nepal. Originally, this folk art form mainly consisted of lively murals painted on the walls of homes in rural villages. But it was much more than simple art for art’s sake. “Mithila painting is part decoration, part social commentary, recording the lives of rural women in a society where reading and writing are reserved for high-caste men” (Arminton, Bindloss & Mayhew, 2006, p. 315). This was art that gave a voice to powerless rural women as a communication technology.

Long before there were computers in most of our homes, there was Mithila Art in homes of what is now India and Nepal. Originally, this folk art form mainly consisted of lively murals painted on the walls of homes in rural villages. But it was much more than simple art for art’s sake. “Mithila painting is part decoration, part social commentary, recording the lives of rural women in a society where reading and writing are reserved for high-caste men” (Arminton, Bindloss & Mayhew, 2006, p. 315). This was art that gave a voice to powerless rural women as a communication technology.

Historical and Cultural Context

This art form acquired its name from the kingdom of Mithila where it originated around the seventh century A.D. At that time, the region was a vast plane located primarily in what is now eastern India as well as in southern Nepal. However, the cultural center and capital of the region was in what is now the city of Janakpur, Nepal only 20 kilometers from the Indian boarder. Janakpur is of course the home of Janakpur painting while the town of Madubandi, India is home of paintings of the same name. Mithila art consists of both kinds of paintings of which Madubandi are more common.

It is said that Mithila art was born when King Janak commissioned artists to create paintings at the time of the marriage of his daughter, Sita, to the god Lord Ram. This might have to do with the fact that most Madubani paintings are created during festivals celebrating marriages and births, religious and social events and ceremonies of the Maithil community. Others say that, “Its original inspiration emerged out of the local women’s craving for religiousness and an intense desire to be one with god” (Janakpur Women’s Development Center, n.d.). However it actually began is not clear, but what it became after being passed down through many generations surly is.

“Mithila is a wonderful land where art and scholarship, laukika and Vedic traditions flourished together in complete harmony between the two” (Mishra, 2009, 4). This harmony was uncommon during this time in many other regions in southern Asia as well as the rest of the world. The general attitude toward artists in this region is one of utmost respect and they were even compared with gods. That could be a major reason why women in ancient Indian society, whom were traditionally regarded as much less significant than men, adopted Mithila art as well as other art forms as not only a communication technology, but as a means for empowerment as well.

“Picture writing is perhaps constructed culturally (even today) as closer to the reader, because it does not depend upon the intermediary of spoken language and seems to reproduce places and events directly” (Bolter, 2001, p. 59). The murals were originally painted during important community events as a kind of subjective snapshot as well as social commentary. This was a positive way for rural women to have a voice and to be heard.

Implications for Literacy and Education

In a communicative context, ‘literacy’ is commonly defined as “the ability to read and write” where to ‘write’ is defined as to “mark (letters, words, or other symbols) on a surface, with a pen, pencil, or similar implement” (Oxford University Press, 2009). So although most Mithila artists were not literate in phonetic writing, they were exceptionally literate in picture writing. As with oral communication, this type of literacy served to bring people together and strengthen their communities. “As we look back through thousands of years of phonetic literacy, the appeal of traditional picture writing is its promise of immediacy. By the standard of phonetic writing, however, picture writing lacks narrative power” (Bolter, 2001, p. 59). The “narrative power” of which Bolter refers to, is the ability of phonetic writing to convey detailed information from a first person perspective. Unfortunately, this ability also has a tendency to actually distance those in communication rather than bring them together as in picture writing.

Bolter goes on to write that, “Sometimes, particularly when the picture text is a narrative, the elements seem to aim for the specificity of language. Sometimes, these same elements move back into a world of pure form and become shapes that we admire for their visual economy” (2001, p. 63). This explains the duality of this art form as both a communication technology and an aesthetic art form. Another perspective of visual communication technologies is that, “Display is, in respect to its prominence and significance and ubiquity, the analogue of narrative” (Kress, 2005, p.14). So while Mithila paintings perhaps lacked the ability to convey a first person narrative, they narrowed the gap between the composer and her audience in a beautiful visual mode of communication.

For the Maithil artists, the ability to express their desires, dreams, expectations, hopes and aspirations to their community in (picture) writing through their painting was most likely much more valuable than communicating detailed information to outsiders by means of phonetic writing. “Unlike words, depictions are full of meaning: they are always specific. So on the one hand there is a finite stock of words—vague, general, nearly empty of meaning; on the other hand there is a n infinitely large potential of depictions—precise, specific, and full of meaning” (Kress, 2005, pgs.15-16). The meaning they conveyed through their art was unmistakable and accessible to all. In this case, picture writing literacy did not lead to phonetic or alphabetic writing literacy. It did, however, require education.

As all writing is communication technology, Mithal art required education to master the particular tools, materials and techniques of this unique style of picture writing. Most of these artists were not formally educated and were illiterate in the ways of phonetic reading and writing. But they did have to learn about the range of natural hues that could be derived from preparations and combinations of clay, bark, flowers and berries as well as how to fashion brushes from bamboo twigs and small pieces of cloth (Mishra, 2009).

Conclusion

Although Mithila art did not directly lead ancient India to a conventional sense of literacy nor to formal education of the masses, it did give a voice to the voiceless. As a communication technology, it provided something for those artists that was and remains a critical element of their society: a heightened consciousness. As Ong writes, “Technologies are not mere exterior aids but also interior transformations of consciousness, and never more than when they affect the word. Such transformations can be uplifting. Writing heightens consciousness” (2002, p. 81).

Mithila art still exists today, but unfortunately has been commercialized with the introduction of tourism. Much of what this art form and communication technology was and did for these people has been lost. Most pieces are painted on paper and many are of scenes made-to-order that have nothing to do with Maithil culture, although selling their artwork has proved an increasing source of income and has in turn improved their quality of live. With the support and guidance development organizations, groups are now promoting the consumption of Vitamin A, voting, safe sex, and saying “no” to drugs to their communities (Janakpur Women’s Development Center, n.d.). So although it has changed considerably over many generations, Mithila art is still a meaningful communication technology.

References

Armington, S., Bindloss, J., & Meyhew, B. (2006). Lonely Planet: Nepal. Oakland, CA: Lonely Planet

Bolter, D. (2001). Writing Space: Computers, Hypertext, and the Remediation of Print. Mahwah, NJ: Lawrence Erlbaum Associates, Inc.

Janakpur Women’s Development Center. (n.d.). Retrieved October 3, 2009, from http://web.mac.com/nadjagrimm/iWeb/JWDC/Welcome.html

Kress, G. (2005). Gains and losses: New forms of text, knowledge, and learning. Computers and Composition, 22, 5-22. Retrieved from http://www.sciencedirect.com/science

Mishra, K. K. (2009). Mithila Paintings: Past, Present and Future. Retrieved October 4, 2009 from Indira Gandhi National Centre for the Arts. Web site: http://ignca.nic.in/

Mithila Art – Madhubani Painting and Beyond. (n.d.). Retrieved October 3, 2009, from http://mithilaart.com/default.aspx

Ong, W. J. (2002). Orality and Literacy. New York: Routledge

Oxford University Press. (2009). Ask Oxford. Retrieved October 10th, 2009 from http://www.askoxford.com/

November 2, 2009 1 Comment

William Blake and the Remediation of Print

One might be inclined to view William Blake’s illuminated books as throwbacks to mediaeval illuminated manuscripts. Yet they should rather be understood as “remediating” older media. According to Bolter (2001, p. 23), remediation occurs when a new medium pays homage to an older medium, borrowing and imitating features of it, and yet also stands in opposition to it, attempting to improve on it. In the case of Blake’s illuminated books, one of the older media being remediated was the mediaeval illuminated manuscript, but another medium being remediated was the printed book, which in Blake’s time had already been in use for three centuries.

Blake adopted the way in which the richly illustrated texts of mediaeval illuminated manuscripts combined the iconic and the symbolic so that the former illumined meaning of the latter, the images revealing the spiritual significance of the scripture. Blake also seized upon an aspect of illuminated manuscripts which would later impress John Ruskin as well (Keep, McLaughlin, & Parmar, 1993-2000)—the way in which they served as vehicles for self-expression. The designs of manuscripts such as the Book of Kells and the Book of Lindisfarne, for instance, reflected the native artistic styles of Ireland and Northumbria and often depicted the native flora and fauna of those lands as well. Blake also adopted some of the styles and idioms of illustration found in mediaeval illuminated manuscripts, producing images in some cases quite similar to ones found in mediaeval scriptures and bestiaries (Blunt, 1943, p. 199). It seems that he also embraced the idea, embodied in the creation of illuminated manuscripts, that the written word can be something sacred and powerful and that it is therefore something to be adorned with gold and lively colours.

Blake’s illuminated books broke with the medium of mediaeval manuscripts mainly by virtue of that which they adopted from the medium of the printed book. Blake produced his illuminated books first by making copper plates engraved with images and text, deepening these engravings with the help of corrosive chemicals. He then used inks to form impressions of the plates on sheets of paper, often colouring the impressed images further with watercolour paints (Blake, 1967, p. 11-2). His use of the copper plates and inks bore similarities to the use of movable type and ink to create printed books. For many years it was believed that, despite this similarity, Blake developed his illuminated books partly as a reaction against the mass production of books, hearkening back to the methods of mediaeval craftsmen – specifically the artists who produced illuminated manuscripts – who created unique items rather than mass produced articles. Consequently, it was believed that after he produced the copper plates for the illuminated books he created only individual books on commission. This belief, first championed by 19th century writers who claimed William Blake as a predecessor (Symmons, 1995), has recently been overturned, however, by the work of Joseph Viscomi. As a scholar and printer who attempted to physically reproduce the methods that Blake employed to create his illuminated books, Viscomi concluded that Blake mass produced these books in small editions of about ten or more books each (Adams, 1995, p. 444).

The primary way in which the illuminated book was meant to improve on the printed book did not lie in the avoidance of mass production, but rather in the relation between the image and the word. In printed books, engraved images could be included with the text, but as the text had to be formed with movable type the image had to be included as something separate and additional (Bolter, 2001, p. 48). In Blake’s illuminated books, in contrast, the written word belonged to the whole image first engraved on the copper plate and then transferred to paper. It participated in the imaginative power of the perceived image, rather than just retaining a purely conceptual meaning. As with the text of mediaeval illuminated manuscripts, the words in Blake’s illuminated books often merge the iconic and the symbolic (Bigwood, 1991). For example, in plate 22 of Blake’s The Marriage of Heaven and Hell, the description of the devil’s speech trails off into a tangle of diabolical thorns. Furthermore, the words are produced in the same colours used in the images to which they belong, and partake in their significance—light watercolours being used in the first edition of the joyous Songs of Innocence and dark reticulated inks being used in the gloomier Songs of Experience (Fuller, 2003, p. 263). As John Ruskin later observed, this ability to use colour in the text of illuminated books made it a form of writing that uniquely expressed its creator’s imagination (Ruskin, 1888, p. 99).