As with all other technologies, hypermedia technologies are inseparable from what is referred to in phenomenology as “lifeworlds”. The concept of a lifeworld is in part a development of an analysis of existence put forth by Martin Heidegger. Heidegger explains that our everyday experience is one in which we are concerned with the future and in which we encounter objects as parts of an interconnected complex of equipment related to our projects (Heidegger, 1962, p. 91-122). As such, we invariably encounter specific technologies only within a complex of equipment. Giving the example of a bridge, Heidegger notes that, “It does not just connect banks that are already there. The banks emerge as banks only as the bridge crosses the stream.” (Heidegger, 1993, p. 354). As a consequence of this connection between technologies and lifeworlds, new technologies bring about ecological changes to the lifeworlds, language, and cultural practices with which they are connected (Postman, 1993, p. 18). Hypermedia technologies are no exception.

To examine the kinds of changes brought about by hypermedia technologies it is important to examine the history not only of those technologies themselves but also of the lifeworlds in which they developed. Such a study will reveal that the development of hypermedia technologies involved an unlikely confluence of two subcultures. One of these subcultures belonged to the United States military-industrial-academic complex during World War II and the Cold War, and the other was part of the American counterculture movement of the 1960s.

Many developments in hypermedia can trace their origins back to the work of Norbert Wiener. During World War II, Wiener conducted research for the US military concerning how to aim anti-aircraft guns. The problem was that modern planes moved so fast that it was necessary for anti-aircraft gunners to aim their guns not at where the plane was when they fired the gun but where it would be some time after they fired. Where they needed to aim depended on the speed and course of the plane. In the course of his research into this problem, Wiener decided to treat the gunners and the gun as a single system. This led to his development of a multidisciplinary approach that he called “cybernetics”, which studied self-regulating systems and used the operations of computers as a model for these systems (Turner, 2006, p. 20-21).

This approach was first applied to the development of hypermedia in an article written by one of Norbert Wiener’s former colleges, Vannevar Bush. Bush had been responsible for instigating and running the National Defence Research Committee (which later became the Office of Scientific Research and Development), an organization responsible for government funding of military research by private contractors. Following his experiences in military research, Bush wrote an article in the Atlantic Monthly addressing the question of how scientists would be able to cope with growing specialization and how they would collate an overwhelming amount of research (Bush, 1945). Bush imagined a device, which he later called the “Memex”, in which information such as books, records, and communications would be stored on microfilm. This information would be capable of being projected on screens, and the person who used the Memex would be able to create a complex system of “trails” connecting different parts of the stored information. By connecting documents into a non-hierarchical system of information, the Memex would to some extent embody the principles of cybernetics first imagined by Wiener.

Inspired by Bush’s idea of the Memex, researcher Douglas Engelbart believed that such a device could be used to augment the use of “symbolic structures” and thereby accurately represent and manipulate “conceptual structures” (Engelbart, 1962).This led him and his team at the Augmentation Research Center (ARC) to develop the “On-line system” (NLS), an ancestor of the personal computer which included a screen, QWERTY keyboard, and a mouse. With this system, users could manipulate text and connect elements of text with hyperlinks. While Engelbart envisioned this system as augmenting the intellect of the individual, he conceived the individual was part of a system, which he referred to as an H-LAM/T system (a trained human with language, artefacts, and methodology) (ibid., p. 11). Drawing upon the ideas of cybernetics, Engelbart saw the NLS itself as a self-regulatory system in which engineers collaborated and, as a consequence, improved the system, a process he called “bootstrapping” (Turner, 2006, p. 108).

The military-industrial-academic complex’s cybernetic research culture also led to the idea of an interconnected network of computers, a move that would be key in the development of the internet and hypermedia. First formulated by J.C.R. Licklider, this idea was later executed by Bob Taylor with the creation of ARPANET (named after the defence department’s Advanced Research Projects Agency). As a extension of systems such as the NLS, such a system was a self-regulating network for collaboration also inspired by the study of cybernetics.

The late 1960s to the early 1980s saw hypermedia’s development transformed from a project within the US military-industrial-academic complex to a vision animating the American counterculture movement. This may seem remarkable for several reasons. Movements related to the budding counterculture in the early 1960s generally adhered to a view that developments in technology, particularly in computer technology, had a dehumanizing effect and threatened the authentic life of the individual. Such movements were also hostile to the US military-industrial-academic complex that had developed computer technologies, generally opposing American foreign policy and especially American military involvement in Vietnam. Computer technologies were seen as part of the power structure of this complex and were again seen as part of an oppressive dehumanizing force (Turner, 2006, p. 28-29).

This negative view of computer technologies more or less continued to hold in the New Left movements largely centred on the East Coast of the United States. However, a contrasting view began to grow in the counterculture movement developing primarily in the West Coast. Unlike the New Left movement, the counterculture became disaffected with traditional methods of social change, such as staging protests and organizing unions. It was thought that these methods still belonged to the traditional systems of power and, if anything, compounded the problems caused by those systems. To effect real change, it was believed, a shift in consciousness was necessary (Turner, 2006, p. 35-36).

Rather than seeing technologies as necessarily dehumanizing, some in the counterculture took the view that technology would be part of the means by which people liberated themselves from stultifying traditions. One major influences on this view was Marshall McLuhan, who argued that electronic media would become an extension of the human nervous system and would result in a new form of tribal social organization that he called the “global village” (McLuhan, 1962). Another influence, perhaps even stronger, was Buckminster Fuller, who took the cybernetic view of the world as an information system and coupled it with the belief that technology could be used by designers to live a life of authentic self-efficiency (Turner, 2006, p. 55-58).

In the late 1960s, many in the counterculture movement sought to effect the change in consciousness and social organization that they wished to see by forming communes (Turner, 2006, p. 32). These communes would embody the view that it was not through political protest but through the expansion of consciousness and the use of technologies (such as Buckminster Fuller’s geodesic domes) that a true revolution would be brought about. To supply members of these communes and other wayfarers in the counterculture with the tools they needed to make these changes, Stewart Brand developed the Whole Earth Catalogue (WEC). The WEC provided lists of books, mechanical devices, and outdoor gear that were available through mail order for low prices. Subscribers were also encouraged to provide information on other items that would be listed in subsequent editions. The WEC was not a commercial catalogue in that it wasn’t possible to order items from the catalogue itself. It was rather a publication that listed various sources of information and technology from a variety of contributors. As Fred Turner argues (2006, p. 72-73), it was seen as a forum by means of which people from various different communities could collaborate.

Like many others in the counterculture movement, Stewart Brand immersed himself in cybernetics literature. Inspired by the connection he saw between cybernetics and the philosophy of Buckminster Fuller, Brand used the WEC to broker connections between ARC and the then flourishing counterculture (Turner, 2006, p. 109-10). In 1985, Stewart Brand and former commune member Larry Brilliant took the further step of uniting the two cultures and placed the WEC online in one of the first virtual communities, the Whole Earth ‘Lectronic Link or “WELL”. The WELL included bulletin board forums, email, and web pages and grew from a source of tools for counterculture communes into a forum for discussion and collaboration of any kind. The design of the WELL was based on communal principles and cybernetic theory. It was intended to be a self-regulating, non-hierarchical system for collaboration. As Turner notes (2005), “Like the Catalog, the WELL became a forum within which geographically dispersed individuals could build a sense of nonhierarchical, collaborative community around their interactions” (p. 491).

This confluence of military-industrial-academic complex technologies and the countercultural communities who put those technologies to use would form the roots of other hypermedia technologies. The ferment of the two cultures in Silicon Valley would result in the further development of the internet—the early dependence on text being supplanted by the use of text, image, and sound, transforming hypertext into full hypermedia. The idea of a self-regulating, non-hierarchical network would moreover result in the creation of the collaborative, social-networking technologies commonly denoted as “Web 2.0”.

This brief survey of the history of hypermedia technologies has shown that the lifeworlds in which these technologies developed was one first imagined in the field of cybernetics. It is a lifeworld characterised by non-hierarchical, self-regulating systems and by the project of collaborating and sharing information. First of all, it is characterized by non-hierarchical organizations of individuals. Even though these technologies first developed in the hierarchical system of the military-industrial-academic complex, it grew within a subculture of collaboration among scientists and engineers (Turner, 2006, p. 18). Rather than being strictly regimented, prominent figures in this subculture – including Wiener, Bush, and Engelbart -voiced concern over the possible authoritarian abuse of these technologies (ibid., p. 23-24).

The lifeworld associated with hypermedia is also characterized by the non-hierarchical dissemination of information. Rather than belonging to traditional institutions consisting of authorities who distribute information to others directly, these technologies involve the spread of information across networks. Such information is modified by individuals within the networks through the use of hyperlinks and collaborative software such as wikis.

The structure of hypermedia itself is also arguably non-hierarchical (Bolter, 2001, p. 27-46). Hypertext, and by extension hypermedia, facilitates an organization of information that admits of many different readings. That is, it is possible for the reader to navigate links and follow what Bush called different “trails” of connected information. Printed text generally restricts reading to one trail or at least very few trails, and lends itself to the organization of information in a hierarchical pattern (volumes divided into books, which are divided into chapters, which are divided into paragraphs, et cetera).

It is clear that the advent of hypermedia has been accompanied by changes in hierarchical organizations in lifeworlds and practices. One obvious example would be the damage that has been sustained by newspapers and the music industry. The phenomenological view of technologies as connected to lifeworlds and practices would provide a more sophisticated view of this change than the technological determinist view that hypermedia itself has brought about changes in society and the instrumentalist view that the technologies are value neutral and that these changes have been brought about by choice alone (Chandler, 2002). It would rather suggest that hypermedia is connected to practices that largely preclude both the hierarchical dissemination of information and the institutions that are involved in such dissemination. As such, they cannot but threaten institutions such as the music industry and newspapers. As Postman (1993) observes, “When an old technology is assaulted by a new one, institutions are threatened” (p. 18).

Critics of hypermedia technologies, such as Andrew Keen (2007), have generally focussed on this threat to institutions, arguing that such a threat undermines traditions of rational inquiry and the production of quality media. To some degree such criticisms are an extension of a traditional critique of modernity made by authors such as Alan Bloom (1987) and Christopher Lasch (1979). This would suggest that such criticisms are rooted in more perennial issues concerning the place of tradition, culture, and authority in society, and is not likely that these issues will subside. However, it is also unlikely that there will be a return to a state of affairs before the inception of hypermedia. Even the most strident critics of “Web 2.0” technologies embrace certain aspects of it.

The lifeworld of hypermedia does not necessarily oppose traditional sources of expertise to the extent that the descendants of the fiercely anti-authoritarian counterculture may suggest, though. Advocates of Web 2.0 technologies often appeal to the “wisdom of crowds”, alluding the work of James Surowiecki (2005). Surowiecki offers the view that, under certain conditions, the aggregation of the choices of independent individuals results in a better decision than one made by a single expert. He is mainly concerned with economic decisions, offering his theory as a defence of free markets. Yet this theory also suggests a general epistemology, one which would contend that the aggregation of the beliefs of many independent individuals will generally be closer to the truth than the view of a single expert. In this sense, it is an epistemology modelled on the cybernetic view of self-regulating systems. If it is correct, knowledge would be the result of a cybernetic network of individuals rather than a hierarchical system in which knowledge is created by experts and filtered down to others.

The main problem with the “wisdom of crowds” epistemology as it stands is that it does not explain the development of knowledge in the sciences and the humanities. Knowledge of this kind doubtless requires collaboration, but in any domain of inquiry this collaboration still requires the individual mastery of methodologies and bodies of knowledge. It is not the result of mere negotiation among people with radically disparate perspectives. These methodologies and bodies of knowledge may change, of course, but a study of the history of sciences and humanities shows that this generally does not occur through the efforts of those who are generally ignorant of those methodologies and bodies of knowledge sharing their opinions and arriving at a consensus.

As a rule, individuals do not take the position of global skeptics, doubting everything that is not self-evident or that does not follow necessarily from what is self-evident. Even if people would like to think that they are skeptics of this sort, to offer reasons for being skeptical about any belief they will need to draw upon a host of other beliefs that they accept as true, and to do so they will tend to rely on sources of information that they consider authoritative (Wittgenstein, 1969). Examples of the “wisdom of crowds” will also be ones in which individuals each draw upon what they consider to be established knowledge, or at least established methods for obtaining knowledge. Consequently, the wisdom of crowds is parasitic upon other forms of wisdom.

Hypermedia technologies and the practices and lifeworld to which they belong do not necessarily commit us to the crude epistemology based on the “wisdom of crowds”. The culture of collaboration among scientists that first characterized the development of these technologies did not preclude the importance of individual expertise. Nor did it oppose all notions of hierarchy. For example, Engelbart (1962) imagined the H-LAM/T system as one in which there are hierarchies of processes, with higher executive processes governing lower ones.

The lifeworlds and practices associated with hypermedia will evidently continue to pose a challenge to traditional sources of knowledge. Educational institutions have remained somewhat unaffected by the hardships faced by the music industry and newspapers due to their connection with other institutions and practices such as accreditation. If this phenomenological study is correct, however, it is difficult to believe that they will remain unaffected as these technologies take deeper roots in our lifeworld and our cultural practices. There will continue to be a need for expertise, though, and the challenge will be to develop methods for recognizing expertise, both in the sense of providing standards for accrediting experts and in the sense of providing remuneration for expertise. As this concerns the structure of lifeworlds and practices themselves, it will require a further examination of those lifeworlds and practises and an investigation of ideas and values surrounding the nature of authority and of expertise.

References

Bloom, A. (1987). The closing of the American mind. New York: Simon & Schuster.

Bolter, J. D. (2001) Writing space: Computers, hypertext, and the remediation of print (2nd ed.). New Jersey: Lawrence Erlbaum Associates.

Bush, V. (1945). As we may think. Atlantic Monthly. Retrieved from http://www.theatlantic.com/doc/194507/bush

Chandler, D. (2002). Technological or media determinism. Retrieved from http://www.aber.ac.uk/media/Documents/tecdet/tecdet.html

Engelbart, D. (1962) Augmenting human intellect: A conceptual framework. Menlo Park: Stanford Research Institute.

Heidegger, M. (1993). Basic writings. (D.F. Krell, Ed.). San Francisco: Harper Collins.

—–. (1962). Being and time. (J. Macquarrie & E. Robinson, Trans.). San Francisco: Harper Collins.

Keen, A. (2007). The cult of the amateur: How today’s internet is killing our culture. New York: Doubleday.

Lasch, C. (1979). The culture of narcissism: American life in an age of diminishing expectations. New York: W.W. Norton & Company.

McLuhan, M. (1962). The Gutenberg galaxy. Toronto: University of Toronto Press.

Postman, N. (1993). Technopoly: The surrender of culture to technology. New York: Vintage.

Surowiecki, J. (2005). The wisdom of crowds. Toronto: Anchor.

Turner, F. (2006). From counterculture to cyberculture: Stewart Brand, the Whole Earth Network, and the rise of digital utopianism. Chicago: University of Chicago Press.

—–. (2005). Where the counterculture met the new economy: The WELL and the origins of virtual community. Technology and Culture, 46(3), 485–512.

Wittgenstein, L. (1969). On certainty. New York: Harper.

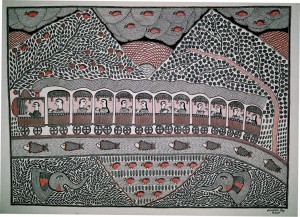

Long before there were computers in most of our homes, there was Mithila Art in homes of what is now India and Nepal. Originally, this folk art form mainly consisted of lively murals painted on the walls of homes in rural villages. But it was much more than simple art for art’s sake. “Mithila painting is part decoration, part social commentary, recording the lives of rural women in a society where reading and writing are reserved for high-caste men” (Arminton, Bindloss & Mayhew, 2006, p. 315). This was art that gave a voice to powerless rural women as a communication technology.

Long before there were computers in most of our homes, there was Mithila Art in homes of what is now India and Nepal. Originally, this folk art form mainly consisted of lively murals painted on the walls of homes in rural villages. But it was much more than simple art for art’s sake. “Mithila painting is part decoration, part social commentary, recording the lives of rural women in a society where reading and writing are reserved for high-caste men” (Arminton, Bindloss & Mayhew, 2006, p. 315). This was art that gave a voice to powerless rural women as a communication technology.